Silicon Valley Power Plays Turn Into Cooking Contests? Zuckerberg Once Cooked Soup to Recruit, OpenAI Says the Calm Is Just for Show

When the Clam and the Snipe Fight, the Fisherman Profits

Sometimes I wish we, as users, could be that fisherman: the harder model developers fight, the faster we get to use better models.

---

Timeline of “Red Alerts”

- December 22, 2022 – Three weeks after ChatGPT’s launch, Google became the first tech giant to issue a “red alert” about the threat posed by OpenAI.

- Yesterday – Just two weeks after launching Gemini 3 (which saw rapid adoption), OpenAI issued its first “red alert.”

Initially, many thought OpenAI was overreacting. Comments like “pride goes before a fall” and “wins and losses are part of warfare” circulated. Upon reflection, this alert might be aimed more at investors: if OpenAI loses its lead, its profitability timeline (currently projected for 2030) could stretch even further.

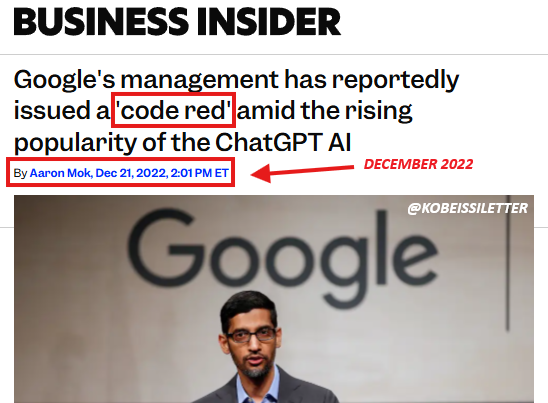

▲ Gemini app downloads are quickly catching up to ChatGPT.

---

OpenAI’s Countermeasures

Leaked plans reveal:

- A new reasoning model launching next week, benchmarking above Gemini 3 internally.

- A counteroffensive model codenamed “Garlic.”

But realistically:

- OpenAI will release models superior to Gemini 3.

- Google has Gemini 4 and Gemini 5 in the pipeline.

---

The Silicon Valley AI Drama

This past year:

- Early Year: DeepSeek R1’s surprise debut disrupted the industry.

- Mid-Year: Zuckerberg’s aggressive talent acquisition spree with astronomical salaries.

- Year-End: The battle returned to core model performance.

---

Mark Chen on the AI “War”

OpenAI’s Head of Research, Mark Chen, shared surreal anecdotes in a podcast interview:

- Zuckerberg personally delivered drinkable soup to researchers in attempts to recruit them.

- Beyond gossip, Chen discussed Gemini 3, scaling debates, DeepSeek R1's influence, compute allocation, and AGI timelines.

Mark Chen’s Profile:

- Math competition veteran

- MIT graduate

- Former Wall Street HFT trader

- Joined OpenAI in 2018 to work with Ilya Sutskever

Chen currently works until 1–2 AM daily, with no social life in the past two weeks.

---

Key Highlights from Chen’s Interview

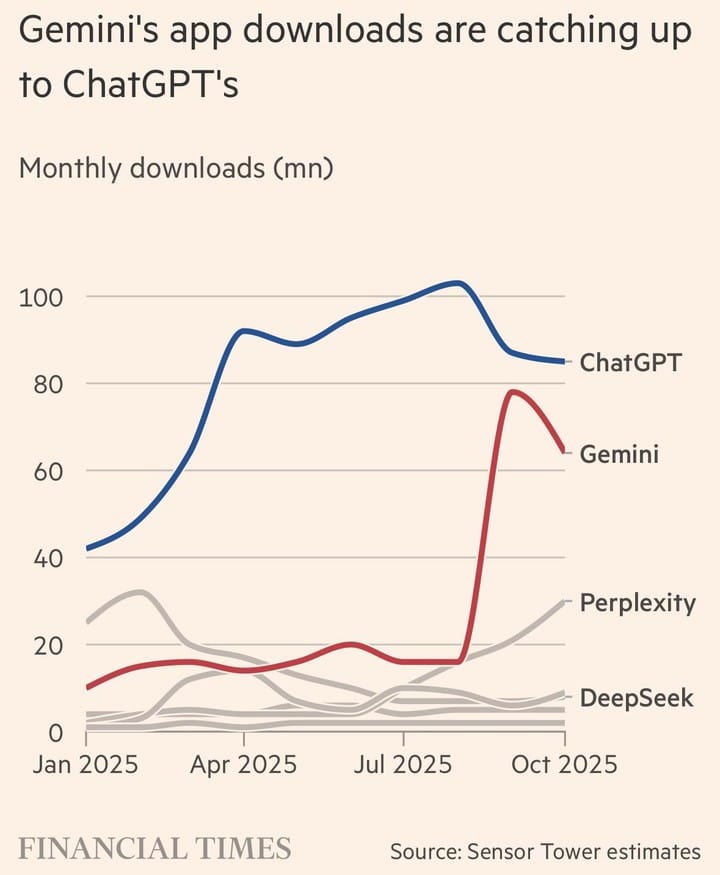

On Gemini 3: “We’re Really Not Worried.”

- Acknowledged Gemini 3 as a good model and noted Google’s progress.

- On SWE-bench, Gemini 3 still lags in data efficiency.

▲ Gemini 3.0 Pro’s SWE-Bench score is 0.1% lower than GPT‑5.1.

Quote from Chen:

> Part of Sam’s job is to inject urgency and speed. As managers, we constantly infuse urgency into the organization.

---

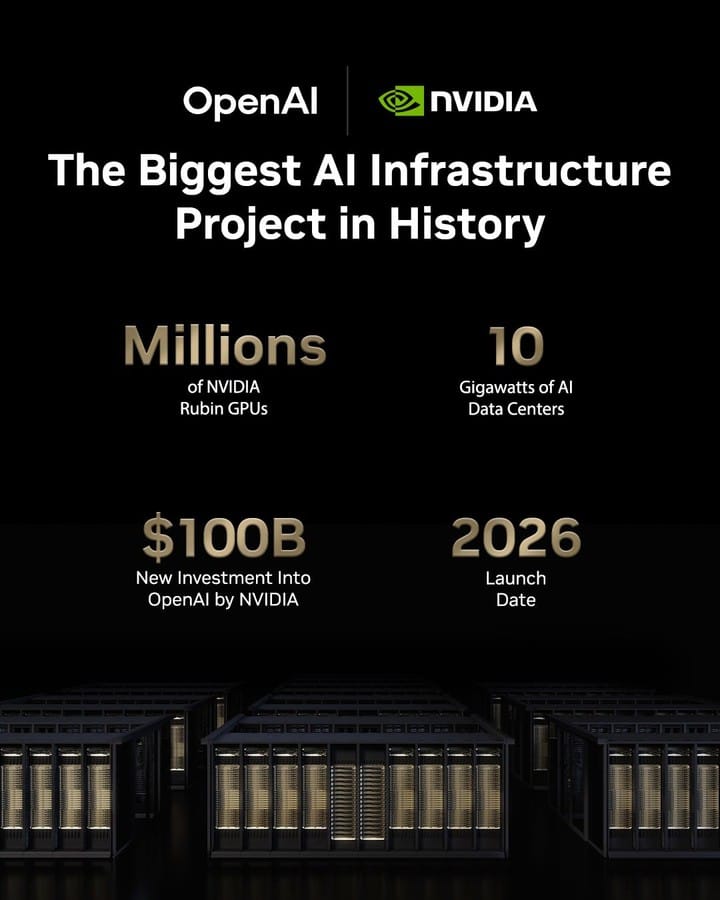

Compute Allocation Strategy

- Biggest challenge: Compute allocation across ~300 ongoing projects.

- GPUs prioritized by strategic importance, not just public benchmarks.

▲ NVIDIA and OpenAI’s million-GPU collaboration

Most GPU capacity is not used for marquee releases, but for experiments exploring next-gen AI paradigms.

Short-term benchmark wins aren’t the main goal — paradigm shifts are.

---

Benchmarks & Mathematical Intuition

Chen tests models with private math questions:

- Example: Create a random number generator modulo 42 using generators modulo smaller primes.

On DeepSeek:

- Both Gemini 3 and DeepSeek’s open-source models added pressure.

- OpenAI remains committed to its own roadmap.

---

Scaling Law Debate

Context

- Scaling Law: Every 10× compute increase should yield significant performance leaps.

- Critics claim GPT‑5 didn’t show the expected jump, implying scaling stagnation.

Chen’s Position

- Disagrees completely with “Scaling is dead.”

- Regression in GPT‑5 pretraining due to focus on reasoning, not scaling failure.

- Compute demand remains extremely high — even 10× more would be fully consumed in weeks.

---

Protecting Talent & Belief

- Meta’s high-cost recruitment drive targeted half Chen’s team — none left.

- Reason: Belief in OpenAI achieving AGI.

Retention lesson:

> Protect core talent, not everyone — focus on key roles critical to mission success.

Chen once slept in the office for a month to safeguard the research team.

▲ Researchers recruited by Meta for its superintelligence lab

---

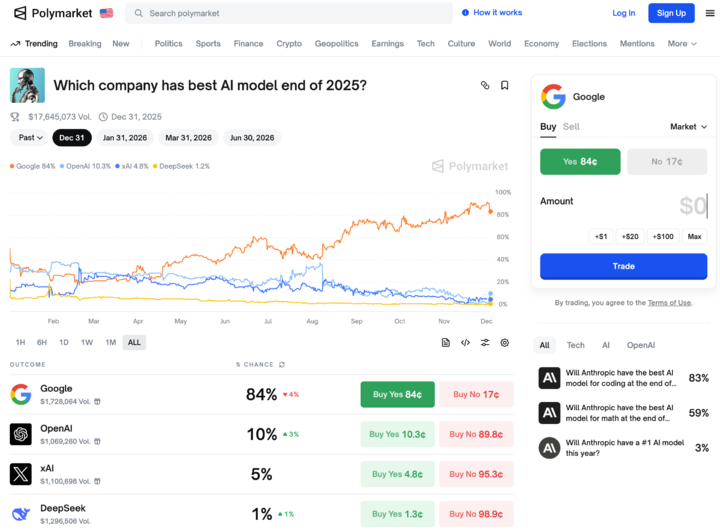

OpenAI’s AGI Milestones

- Within 1 year: Transform research — AI acts as efficient assistants.

- Within 2.5 years: Achieve end-to-end research automation — humans supply ideas, AI executes the entire loop.

Goal: Workers shift from coding experiments to managing AI interns.

▲ Prediction market: Google currently most likely to top AI model rankings before end of 2025.

---

Creator Empowerment in the AI Era

Platforms like AiToEarn官网 are bringing corporate-level AI capabilities to individuals:

- AI generation tools

- Cross-platform publishing

- Analytics

- Model rankings (AI模型排名)

Supported channels: Douyin, Kwai, WeChat, Bilibili, Xiaohongshu (Rednote), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter).

Why it matters:

As AI battles intensify, tools enabling creators to generate, distribute, and monetize quickly will be essential.

---

Bottom Line:

The Silicon Valley AI war — from “chicken soup” recruitment games to multimillion-GPU strategies — is far from over. For users, the fight means faster innovation. For creators, leveraging the right AI tools could be just as critical as the underlying models.