Stanford’s New Paper: Fine-Tuning is Dead, Long Live Autonomous In-Context Learning

Farewell to Traditional Fine-Tuning: Introducing ACE

A groundbreaking study from Stanford University, SambaNova Systems, and the University of California, Berkeley has demonstrated a transformative approach to improving AI models — without adjusting a single weight.

The method, called Agent Contextual Engineering (ACE), relies on context engineering rather than retraining. It autonomously evolves its own contextual prompts through repeated cycles of generation, reflection, and editing — forming a self-improving system.

---

What is ACE?

ACE optimizes both:

- Offline context (e.g., system prompts)

- Online context (e.g., agent memory)

This enables it to outperform strong baselines across different tasks, from domain-specific benchmarks to multi-step agent interactions.

---

The Problem with Traditional Context Adaptation

Many AI tools today improve performance through “context adaptation” — adding instructions or examples to inputs rather than training the model itself. While effective, this approach suffers from two major flaws:

1. Conciseness Bias

To keep inputs short, critical details are omitted.

> Example: “Process financial data” without specifying “verify values in XBRL format” can cause serious errors.

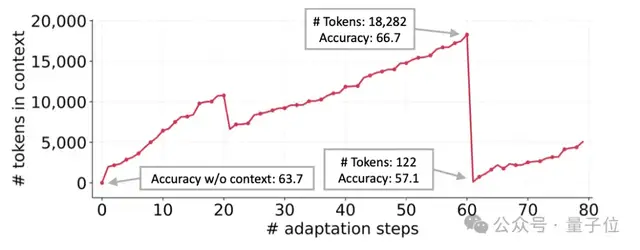

2. Context Collapse

Repeated input modifications cause accumulated information to be lost.

> Example: A detailed 18,000-token strategy (66.7% accuracy) may be shortened to 122 tokens, dropping accuracy to 57.1%.

---

ACE’s Dynamic Context Evolution

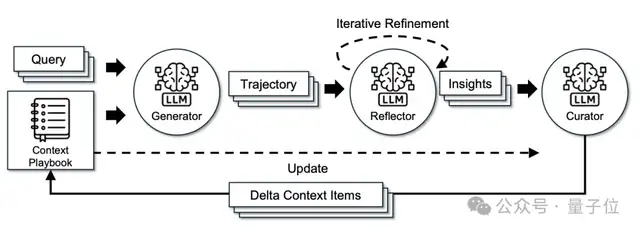

ACE treats context not as a static compressed document, but as an operational manual that evolves over time. It accumulates, refines, and organizes strategies using three specialized roles:

- Generator – Produces reasoning trajectories.

- Reflector – Extracts and refines insights from successes and failures.

- Curator – Integrates these insights into structured, incremental context updates.

How the Cycle Works:

- Generate — Produce reasoning paths and capture common patterns.

- Reflect — distill lessons and optionally iterate multiple times.

- Curate — insert concise, well-structured updates into existing context, using lightweight deterministic logic.

Because these updates are localized, multiple increments can be merged in parallel, enabling batch adaptation at scale.

---

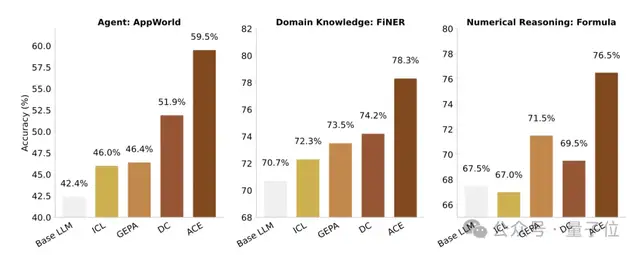

Experimental Results

ACE outperforms:

- Base LLM (no adaptation)

- ICL (few-shot demos)

- GEPA (prompt optimization)

- Dynamic Cheatsheet (adaptive memory prompts)

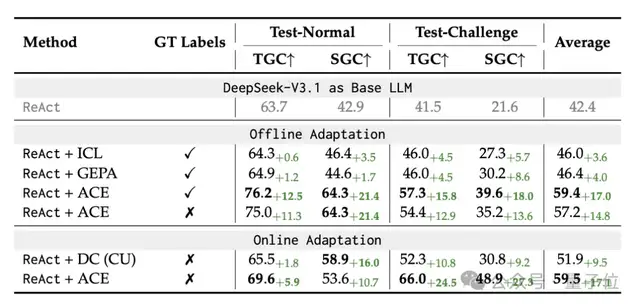

In Agent-based Testing

Using AppWorld, a suite of agent tasks covering API comprehension, code generation, and environment interaction:

- ReAct+ACE beats ReAct+ICL by +12.3%

- ReAct+ACE beats ReAct+GEPA by +11.9%

ACE consistently shows 7.6% average improvement over other adaptive methods in online settings.

---

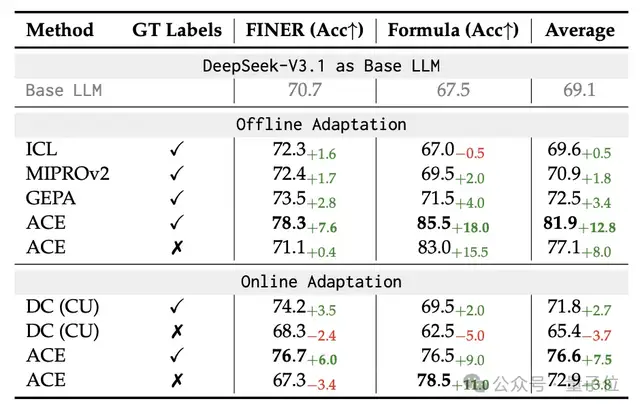

In Financial Analysis Tasks

Benchmarks:

- FiNER – Annotates tokens in XBRL documents into 139 fine-grained entity types.

- Formula – Extracts numbers from XBRL reports and performs reasoning computations.

Offline Setting:

ACE outperformed ICL, MIPROv2, and GEPA by +10.9% average margin when given correct training set answers.

---

Efficiency Gains

ACE greatly reduces:

- Adaptive latency

- Number of trials

- Token input/generation costs

Examples:

- In AppWorld offline tasks:

- Latency ↓ 82.3% vs. GEPA

- Trials ↓ 75.1% vs. GEPA

- In FiNER online tasks:

- Latency ↓ 91.5% vs. DC

- Token cost ↓ 83.6%

---

The Researchers Behind ACE

Qizheng Zhang

- Ph.D. student, Stanford University (Computer Science)

- Bachelor’s in Math, CS, and Statistics — University of Chicago

- Research with Junchen Jiang and Ravi Netravali on network system design

- Internships at Argonne National Lab (MCS Division) and Microsoft Research

Changran Hu

- Bachelor’s — Tsinghua University

- Master’s — UC Berkeley

- Co-founded AI music generation company DeepMusic at age 20

- Raised USD 10 million, collaborated with top Chinese pop singers

- Former Applied Scientist Intern at Microsoft, later Technical Lead at SambaNova Systems

---

Broader Implications

The findings suggest context evolution may replace fine-tuning in many use cases, especially in fast-changing environments where prompts need constant adaptation.

Open-source ecosystems like AiToEarn官网 demonstrate how context-aware AI can:

- Automate content creation

- Refine output strategies over time

- Publish across platforms like Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X

Such systems merge adaptive AI operations with scalable content monetization, extending ACE’s principles beyond research benchmarks into practical, multi-platform creative workflows.

---

Key Takeaway:

Instead of spending resources on constant fine-tuning, context evolution lets AI systems self-refine — achieving better performance, faster adaptation, and lower operational costs.

---

If you'd like, I can also produce a compact summary version of this for quick-reading executives — keeping the structure but condensing the data. Would you like me to do that?