Student Faces 6 Rejections in 3 Years, So Andrew Ng Built a Reviewer Agent Himself

Doing Research Isn't Easy

The Struggle of Academic Publishing

Rejected six times in three years, with each feedback round taking half a year?

When machine learning pioneer Andrew Ng learned about a student's streak of bad luck, he personally created a free AI-powered academic paper review agent to speed up the process.

---

AI Review Performance

Trained on ICLR 2025 review data and tested against a benchmark set, the AI review system achieved a correlation coefficient of 0.42 with human reviews — slightly higher than the 0.41 correlation observed between human reviewers themselves.

> Meaning: The consistency between AI reviews and human reviews is now roughly on par with human-to-human review consistency.

As one netizen put it: "Better to get rejected in minutes than wait six months!"

Advantage: Early rejection enables faster revision and resubmission.

---

Problem With Traditional Peer Review

Peer review often takes months per feedback round. Comments typically focus on acceptance/rejection rather than actionable suggestions for improvement.

Andrew Ng’s AI paper review agent directly addresses this gap.

---

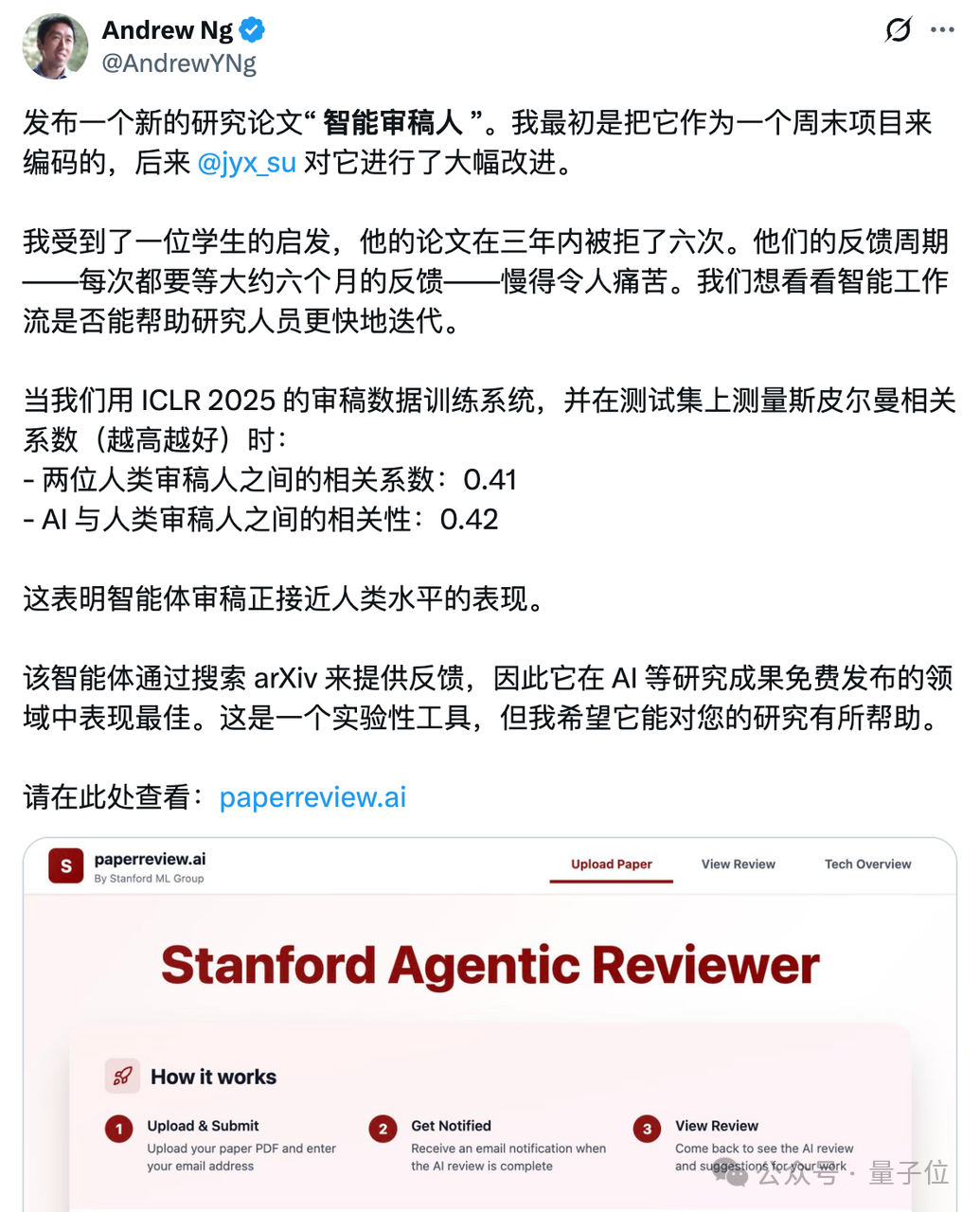

How the AI "Peer Review" Process Works

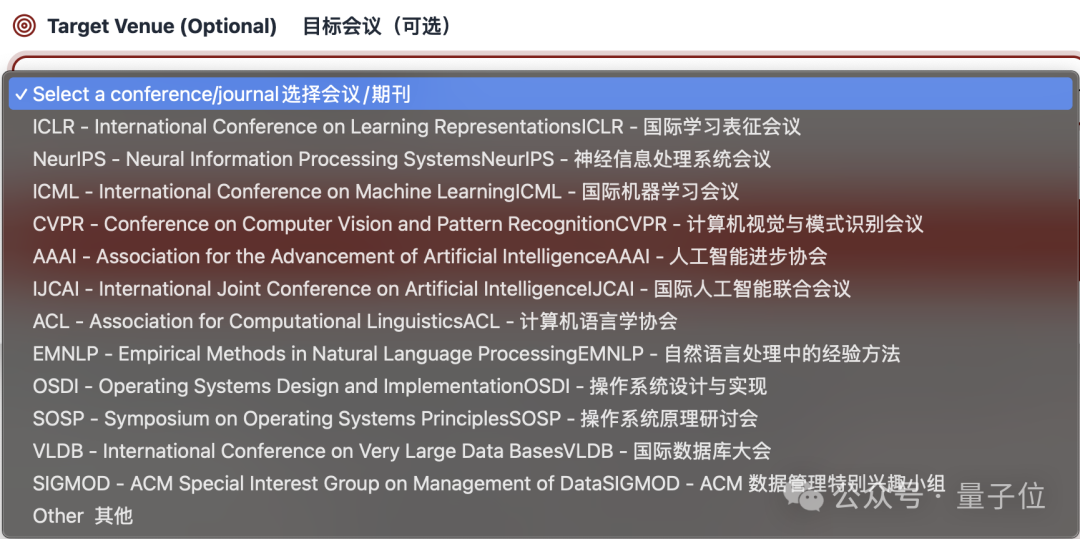

- Target Venue Selection

- When submitting, select your target journal or conference.

- The AI adapts its assessment style to match that venue’s review standards.

- Document Processing

- Converts your PDF to Markdown.

- Verifies it is an academic paper.

- Automatically extracts keywords such as:

- Experimental standards used

- Topic similarities with existing work

- Research Matching

- Searches latest related work on arXiv.

- Selects most relevant papers and generates summaries.

- Review Generation

- Uses your submission and related summaries to produce a full review following a structured template.

- Includes specific, actionable revision suggestions.

- Scoring & Ranking

- Trained to mimic ICLR 2025 reviews.

- Scores across seven dimensions:

- Originality

- Importance of the research question

- Strength of evidence

- ...and more

- Outputs a final score (1–10).

---

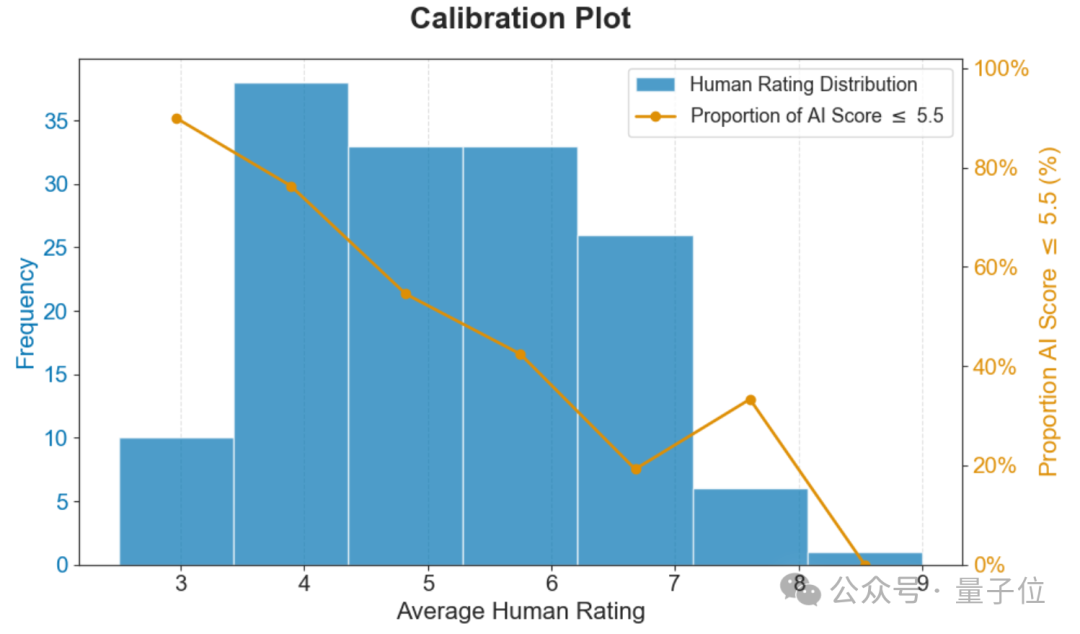

AI vs. Human Review Accuracy

- Correlation with human reviewers:

- AI: 0.42

- Human-to-human: 0.41

- Acceptance outcome prediction accuracy:

- Humans: 0.84

- AI: 0.75

Calibration chart:

Chart Notes

- Blue bars: Human score distribution (mostly between 4–7).

- Orange line: Proportion of AI scores ≤ 5.5 per human score range.

- Observation: As human scores rise, AI scores are less likely to be low — showing the AI follows human scoring trends.

---

Limitations

- AI references mainly arXiv content, introducing possible deviations.

- Review processing is fast but not instant.

> Example: We tried uploading a paper — status: please wait…

> Good news: It didn’t reject within minutes 🐶.

---

Who Built It?

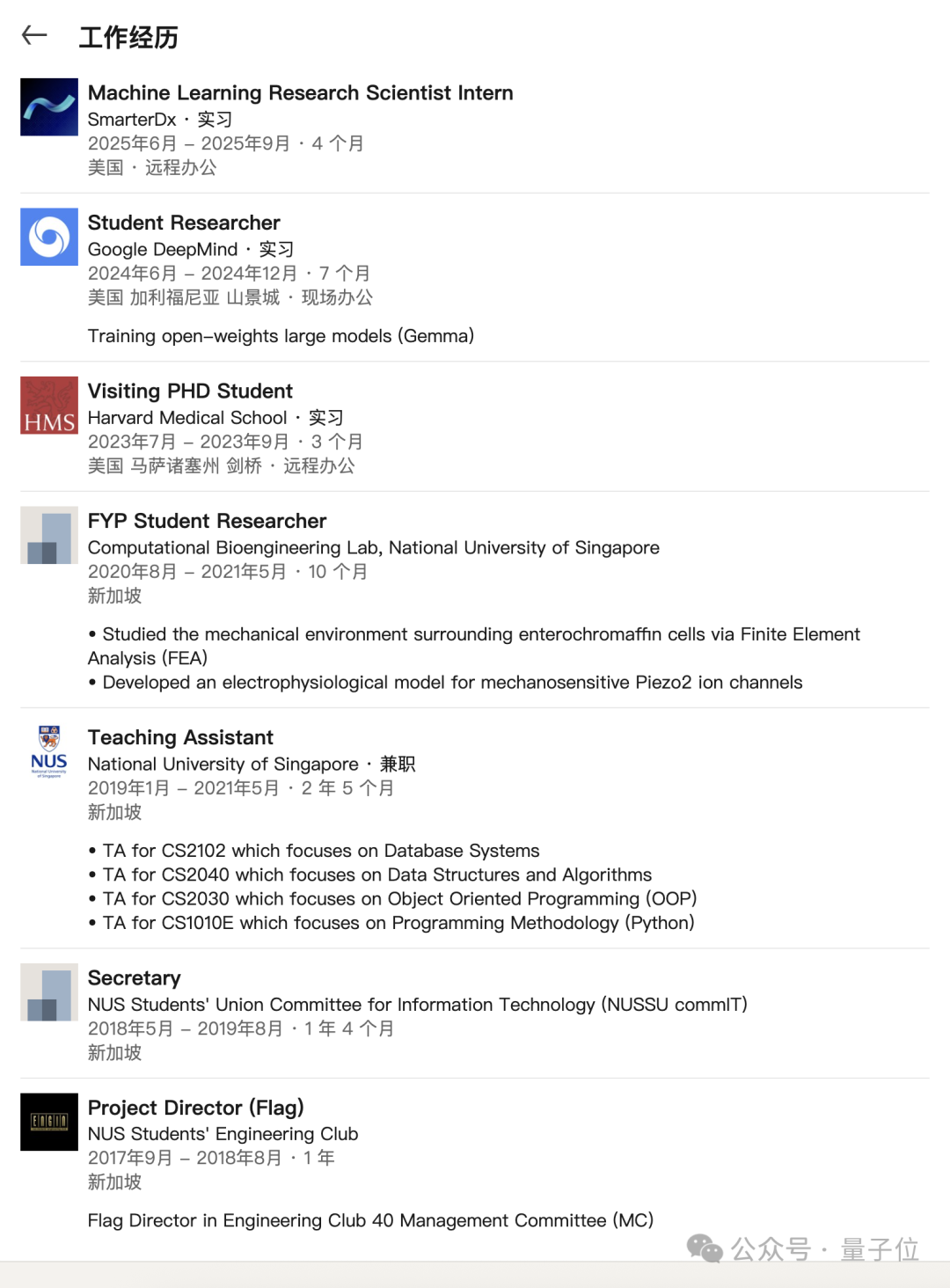

- Developed further by Stanford PhD student Yixing Jiang.

- Jiang also interned at Google DeepMind for seven months.

---

Try It Yourself

If you’re a researcher in need, it’s worth testing this free AI review system — maybe your next submission gets accepted!

🔗 Experience it here: https://paperreview.ai/

---

Bigger Picture: AI in Academic Publishing

Tools like this demonstrate how AI can streamline scholarly workflows:

- Research review

- Paper writing assistance

- Cross-platform dissemination

- Result tracking and analysis

For researchers looking to integrate AI into multi-platform content publishing (Douyin, Bilibili, YouTube, X/Twitter), check out AiToEarn官网:

- Connects AI content generation with publishing, analytics, and ranking

- Helps researchers and creators monetize AI-driven creativity worldwide

---

References

[1] https://x.com/AndrewYNg/status/1993001922773893273?s=20

---

Follow us to get real-time updates on cutting-edge technology.