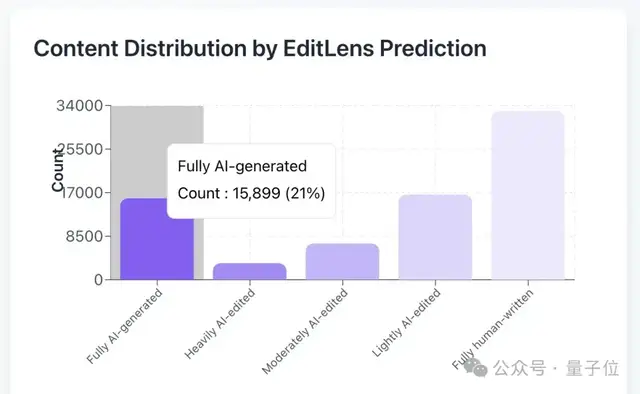

Surprisingly, 21% of ICLR 2026 Reviews Were Fully AI-Generated

ICLR 2026: 21% of Reviews Found to Be Fully AI-Generated

A surprising analysis from Pangram Lab reveals that one in five peer reviews at ICLR 2026 were entirely AI-generated — raising serious questions about authenticity in academic evaluation.

---

The Discovery

Graham Neubig, AI researcher at CMU, suspected that reviews he received had an “AI-flavored” style:

- Overly lengthy

- Filled with symbols

- Associations with uncommon analytical methods for AI/ML research

Unable to verify alone, Neubig posted a $50 bounty seeking a systematic analysis:

> “I’ll offer $50 to the first person who does this.”

---

Pangram Lab Steps In

Pangram Lab specializes in detecting AI-generated text. Their findings were striking:

- 75,800 reviews analyzed

- 15,899 reviews (21%) highly likely to be fully AI-generated

- Numerous papers also showed predominantly AI-written content

---

Data Collection and Preprocessing

Pangram collected full ICLR 2026 data from OpenReview:

- 19,490 paper submissions

- 75,800 reviews

Handling PDF Challenges

PDFs with formulas, charts, and tables can disrupt text parsing. Standard parsers like PyMuPDF performed poorly. Pangram’s solution:

- Convert PDFs using Mistral OCR to Markdown

- Convert Markdown to plain text

- Minimize formatting noise for cleaner analysis

---

Detection Models

Paper Body Analysis

- Pipeline splits paper into paragraphs/semantic segments

- Classifies each segment as human-written or AI-written

- Aggregates results into categories: human-dominant, mixed-authoring, almost entirely AI-generated, extreme outlier

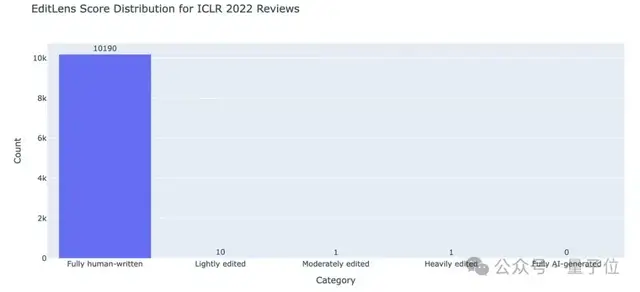

Model Validation: Tested against pre-2022 ICLR and NeurIPS papers — yielding 0% AI likelihood.

Review Analysis via EditLens

Levels of AI involvement:

- Fully human-written

- AI-polished

- Moderate AI editing

- Heavy AI involvement

- Fully AI-generated

Accuracy:

- False positive rates as low as 1/100,000 for heavy AI involvement.

---

Key Findings

- 15,899 reviews fully AI-generated (21%)

- Over half of reviews involved some AI assistance

- 61% papers human-written

- 199 papers (1%) fully AI-generated

---

Conference Policy and Ethics

ICLR’s policies require:

- Disclosure of AI use in papers/reviews

- Responsibility remains with human authors/reviewers

- Integrity — no fabrication, falsification, or misleading statements

For reviewers: AI polishing is allowed, but fully AI-generated reviews may violate ethics due to lack of genuine opinion and possible confidentiality breach.

---

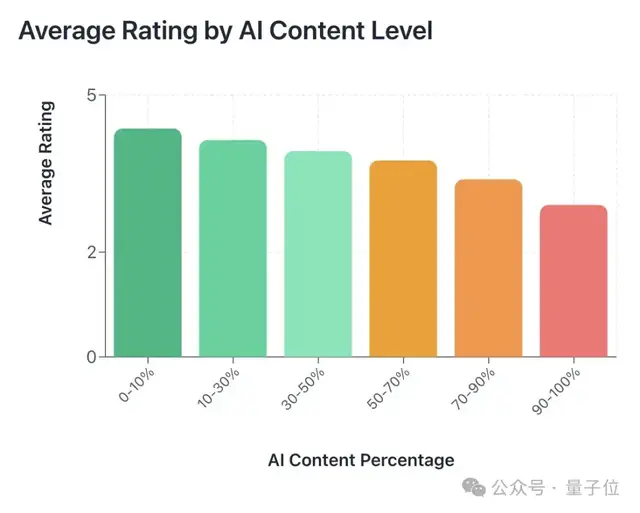

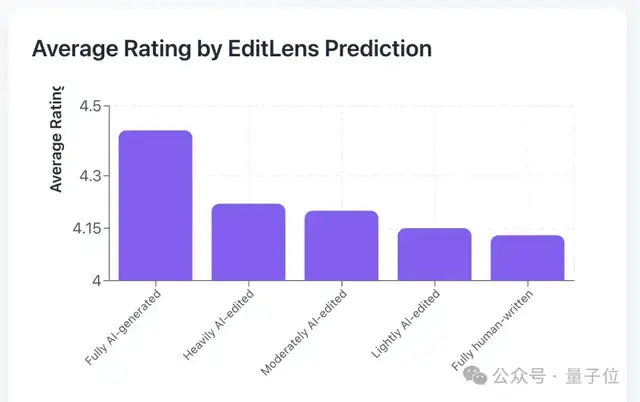

Correlations Between AI Usage and Review Outcomes

- More AI in papers → lower review scores

- AI writing still lags behind original human quality

- More AI in reviews → higher scores given

- AI-assisted reviews tend to be more lenient and friendly

---

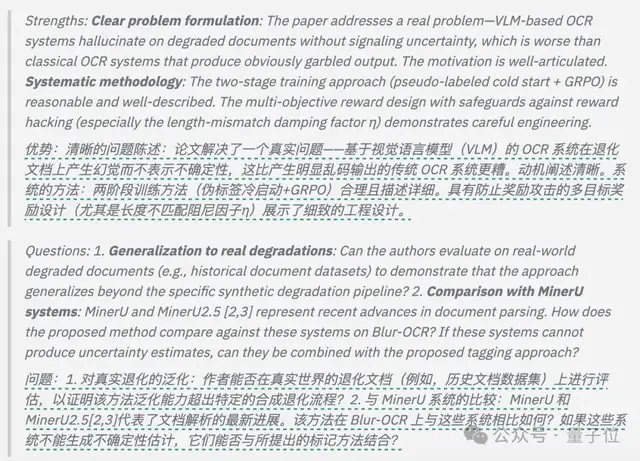

Common Traits of AI-Generated Reviews

- Headings in bold: 2–3 descriptive tags + colon

- Superficial nitpicking

- Requests for tasks already completed in paper

- Filler text with low information density — long but unhelpful reviews

---

The Bigger Question

University of Chicago economist Alex Imas asks:

> Do we want human judgment in peer review?

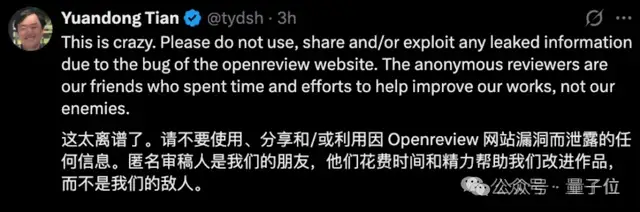

With broken double-blind systems and a wave of AI-generated reviews, trust in the process is at stake.

---

Industry Perspective: Responsible AI Use

Platforms like AiToEarn官网 provide tools for:

- AI content generation

- Multi-platform publishing (Douyin, Kwai, WeChat, Facebook, YouTube, X/Twitter, etc.)

- Analytics and model ranking

- Open-source transparency

Such integrations could help maintain ownership, integrity, and accountability while benefiting from automation.

---

Closing Thoughts

The most urgent challenge: Preserve double-blind review integrity in top-tier conferences.

This is a shared responsibility for the entire academic community.

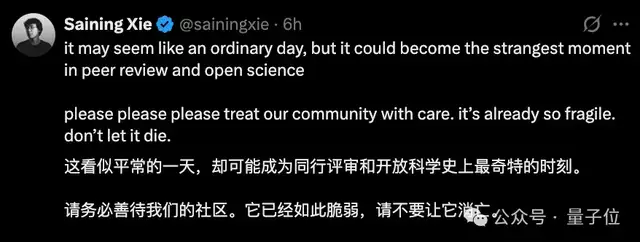

As Xie Saining said:

"Please be kind to our community. It is already so fragile; do not let it perish."

---

References

- https://www.pangram.com/blog/pangram-predicts-21-of-iclr-reviews-are-ai-generated

- https://www.nature.com/articles/d41586-025-03506-6

---

If you want, I can also create a shorter executive summary so this report is easier to share among academic teams. Would you like me to prepare that?