SWE-1.5: Cognition AI Launches New Fast Agent Model

Introducing SWE‑1.5: A New Fast Agent Model

Read the full announcement (via)

---

Overview

On the same day that Cursor released Composer‑1, Windsurf announced SWE‑1.5 — its latest frontier-size coding model boasting:

- Hundreds of billions of parameters

- Near state-of-the-art (SOTA) coding performance

- Extreme speed: up to 950 tokens/second, which is

- 6× faster than Haiku 4.5

- 13× faster than Sonnet 4.5

> Powered by Cerebras hardware for inference — a strategic move to achieve high-speed serving.

Like Composer‑1, SWE‑1.5 is currently:

- Accessible only via Windsurf’s editor

- No public API yet

- Built on a “leading open-source base model” (details undisclosed)

---

First Impressions

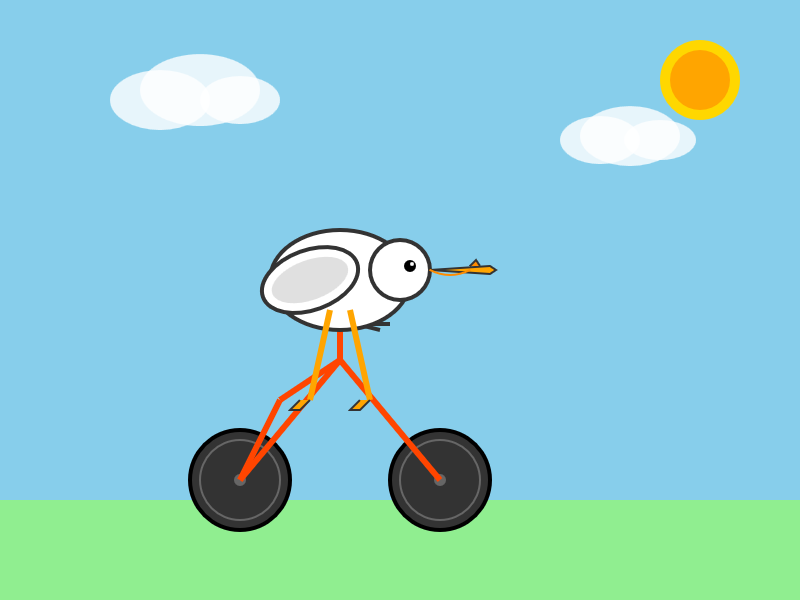

I tested it by asking for an SVG of a pelican riding a bicycle. The output was impressively fast:

The responsiveness felt genuinely faster than most coding models I’ve tried.

---

Training Insights

From the official blog post:

> SWE‑1.5 is trained on our state‑of‑the‑art cluster of thousands of GB200 NVL72 chips.

> We believe SWE‑1.5 may be the first public production model trained on the new GB200 generation.

>

> Our RL rollouts require high‑fidelity environments with code execution and web browsing.

> We use our VM hypervisor otterlink to scale Devin to tens of thousands of concurrent machines (see blockdiff).

> This enables high concurrency and aligns training environments with our Devin production environments.

Key similarities to Composer‑1:

- Heavy use of reinforcement learning (RL)

- Massive concurrent sandboxed coding environments

---

The Emerging Trend in Agent‑Based Coding Models

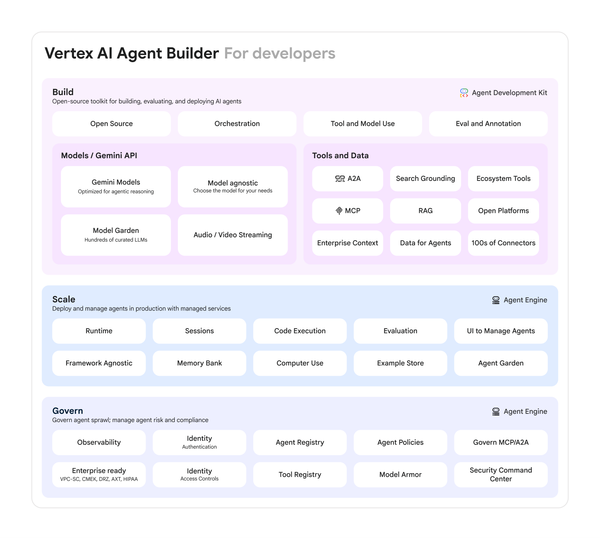

If you want to build a truly capable agent‑based coding tool:

- Fine‑tune with reinforcement learning for integration with custom tools.

- Use hundreds of thousands of concurrent simulated environments to train deeply specialized agents.

- Align training infrastructure closely with real deployment settings.

Both Windsurf and Cursor are demonstrating this infrastructure‑heavy, concurrency‑driven approach.

---

Broader Ecosystem Connections

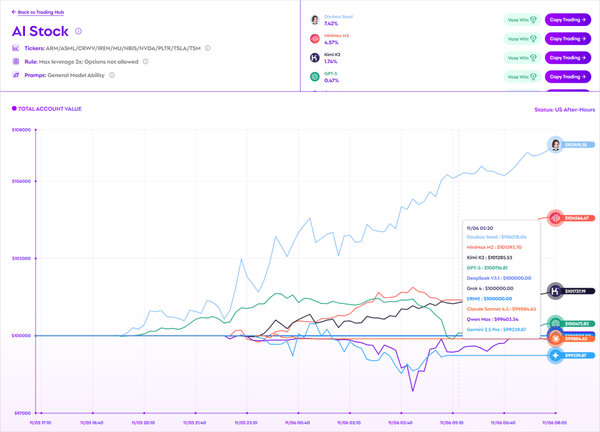

Beyond code generation, similar scaling concepts are emerging in multi‑platform AI content creation.

Open‑source projects like AiToEarn官网 and its GitHub repo enable creators to:

- Generate, publish, and monetize AI content across platforms:

- Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Integrate analytics and model ranking tools

- Build cross‑platform pipelines for scaling content workflows

Just as large‑scale concurrent environments help train SWE‑1.5:

- AiToEarn’s distributed publishing system scales creative output.

- Both approaches show how massively parallel infrastructure is becoming key to sustained AI‑driven productivity.

---

In summary: SWE‑1.5 is not only fast and powerful, but it's also emblematic of a wider trend — coupling RL training, custom tooling, and high‑concurrency clusters to push the limits of AI agents. This parallels innovations in other AI domains where infrastructure scalability determines real-world success.