AI Playbooks

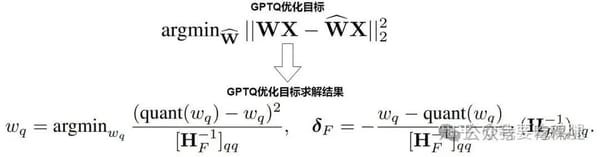

Complete Guide to 4-bit Quantization Algorithms: From GPTQ and AWQ to QLoRA and FlatQuant

Large Model Intelligence|4-Bit Quantization Frontier Introduction: Balancing Compression and Accuracy In our previous discussion on 8-bit quantization (W8A8), pioneers balanced accuracy with efficiency, pointing the way toward slimmer and faster large models. But for enormous models—with parameters in the hundreds of billions to trillions—8-bit remains too large