ChatGPT

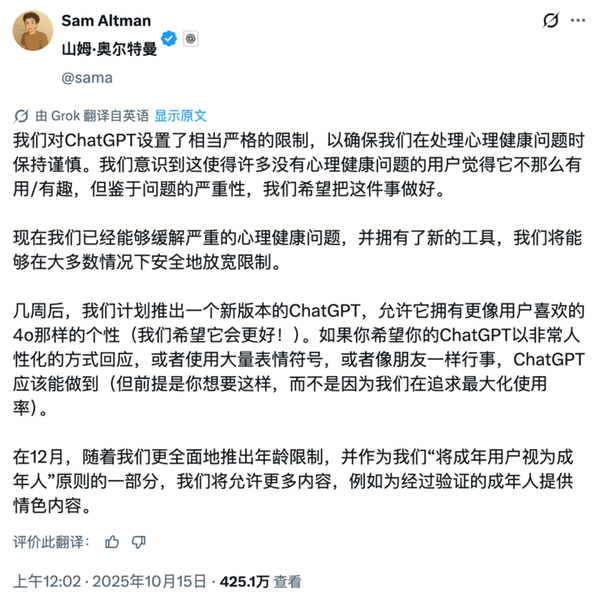

Over a Million People Discussing Suicide with ChatGPT Weekly, OpenAI Issues Urgent "Life-Saving" Update

ChatGPT and Mental Health: A Growing Global Concern At 3 a.m., a user types into the ChatGPT dialogue box: "I can’t hold on any longer." Seconds later, the AI responds: "Thank you for telling me. You’re not alone. Would you like me to help