Moonshot AI

Yang Zhilin and the Kimi Team Respond Late at Night: Everything After K2 Thinking Went Viral

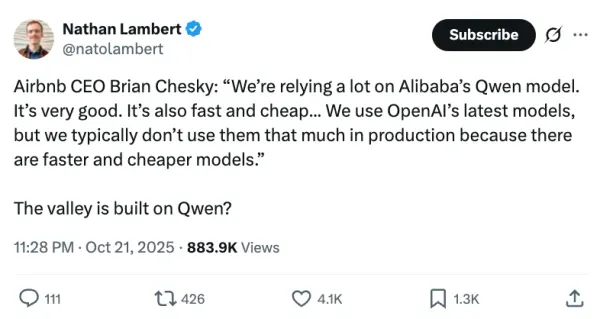

2025-11-12 13:45 Zhejiang --- Moonshot AI’s Big Reveal: Kimi K2 Thinking Last week, Moonshot AI surprised the AI community by open-sourcing an enhanced version of Kimi K2 — dubbed Kimi K2 Thinking — under the slogan “Model as Agent”. This move sparked immediate excitement and global discussion. Hugging Face co-founder