Multimodal AI

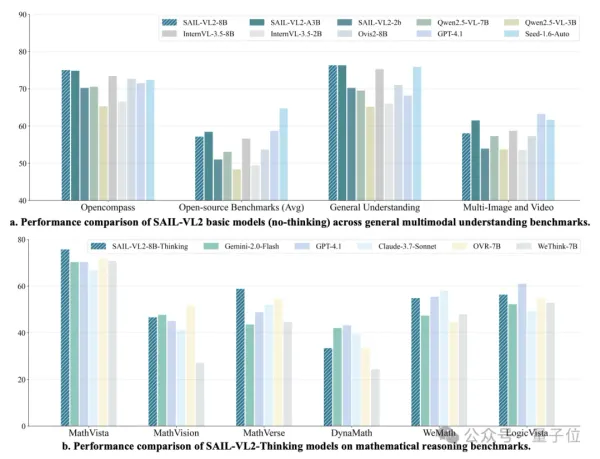

Multimodal AI Models Learn Reflection and Review — SJTU & Shanghai AI Lab Tackle Complex Multimodal Reasoning

Multimodal AI Breakthrough: MM-HELIX Enables Long-Chain Reflective Reasoning Multimodal large models are becoming increasingly impressive — yet many users feel frustrated by their overly direct approach. Whether generating code, interpreting charts, or answering complex questions, many multimodal large language models (MLLMs) jump straight to a final answer without reconsideration. Like an