AI news

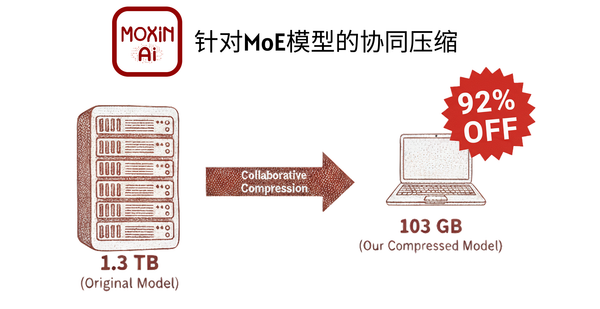

Breaking the Terabyte-Scale Model “Memory Wall”: Collaborative Compression Framework Fits 1.3TB MoE Model into a 128GB Laptop

Collaborative Compression: Breaking the Memory Wall for Trillion-Parameter MoE Models This article introduces the Collaborative Compression framework, which — for the first time — successfully deployed a trillion-parameter Mixture-of-Experts (MoE) large model on a consumer-grade PC with 128 GB RAM, achieving over 5 tokens/second in local inference. Developed by the Moxin