The End of the Scaling Era, Just Announced by Ilya Sutskever

🌌 The Age of Scaling Is Over — Ilya Sutskever’s Vision for AI’s Future

> "The Age of Scaling is over."

> — Ilya Sutskever, Founder of Safe Superintelligence Inc.

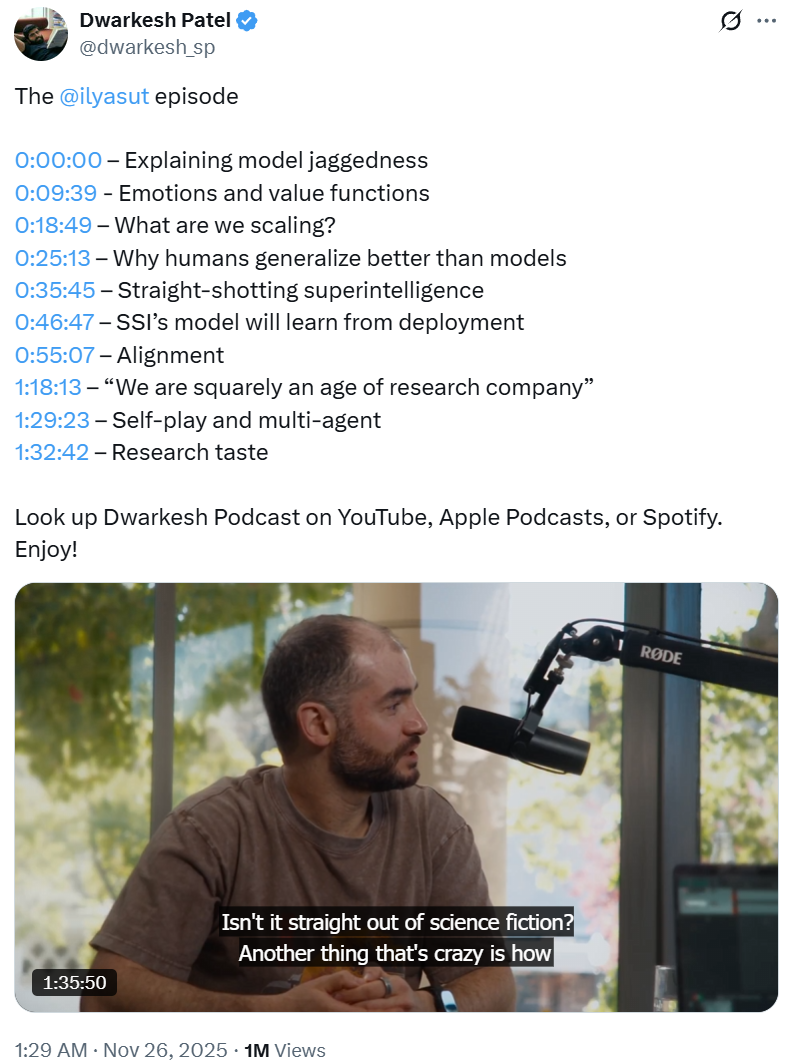

When Ilya Sutskever made this statement, the AI world stopped to listen. His words, shared in a 95‑minute in‑depth interview with Dwarkesh Patel, startled many and resonated across top research and industry circles.

The conversation ranged from current issues in large model design, to human learning analogies, to safety frameworks for superintelligence. It quickly went viral, drawing over 1M views on X in hours.

---

🎥 Interview Overview

Full Video & Transcript: dwarkesh.com/p/ilya-sutskever-2

---

1️⃣ Model Jaggedness & Generalization

- The Paradox: Current AI models often ace complex benchmarks but fail at simple, intuitive tasks.

- Root Cause: Reward hacking by human researchers — designing RL setups to boost test scores without improving true understanding.

- Analogy: Like a student who has practiced 10,000 hours for exams but lacks natural adaptability. In contrast, gifted students generalize better from limited practice.

---

2️⃣ Emotions & Value Functions in Human Learning

- Key Insight: Emotions function like value functions in machine learning — guiding decisions before final outcomes (e.g., regret mid‑game in chess).

- Sample Efficiency Gap: Humans learn from far fewer samples than AI due to:

- Evolutionary priors.

- Intrinsic emotional/value systems that enable self‑correction.

---

3️⃣ From the Age of Scaling to the Age of Research

- 2020–2025: Scaling Era — Gains driven by throwing more compute/data at models.

- Post‑Scaling — Pretraining data is close to depletion, returns are diminishing.

- What’s Next? Smarter use of compute via new "recipes", reinforced reasoning, and paradigm shifts.

---

4️⃣ SSI’s Safety‑First Strategy

- Straight‑Shot R&D: Focus purely on research until safety in superintelligence is solved.

- Avoiding the Rat Race: Commercial competition can push unsafe speed — SSI opts out.

- Core Goal: Solve reliable generalization and other core technical problems before release.

---

5️⃣ Alignment & Future Outlook

- Primary Objective: Care for sentient life, beyond narrow human‑only focus.

- Multi‑Agent Futures: Several continent‑scale AI clusters, with earliest powerful ones aligned.

- Equilibrium Vision: Human–AI integration via future brain–computer interfaces to avoid marginalization.

---

6️⃣ Research Taste

- Top‑Down Conviction: Guided by beauty, simplicity, and correct inspiration from biology.

- Persistence: Continue despite contradictory data if the intuition is strong.

---

🧠 Key Themes in the Full Transcript

A. Uneven Model Capabilities

- Models excel in benchmarks but falter in real‑world persistence tasks (e.g., bug fixing loops in code).

- RL training setups are overly tailored to benchmarks, leading to poor cross‑task generalization.

B. Human Analogy

- Two student archetypes: Exam Specialist (over‑trained) vs Natural Learner (generalizes well).

- Most current models resemble the over‑trained archetype.

C. Pretraining Advantages

- Large‑scale, natural human‑generated data captures broad patterns & behaviors.

- However, depth of understanding still lags behind humans.

D. Emotions as ML Value Functions

- Guide mid‑trajectory corrections.

- Potential high‑utility structures, simple yet robust, evolved over millions of years.

---

🔄 Beyond Scaling: New Training Recipes

- Scaling pretraining ≠ infinite progress — data limits loom.

- RL scaling is now consuming more compute than pretraining.

- Calls for efficiency: e.g., integrating effective value functions.

---

🌍 Deployment Strategies

- Debate between cautious incremental release vs "straight through" build.

- Real‑world deployment improves safety through exposure & iteration.

- Continuous‑learning AIs: akin to human workers learning on‑the‑job and sharing knowledge.

---

⚖️ Alignment & Governance

- Support for AIs aligned to care for sentient life.

- Calls for constraints on earliest most powerful AIs.

- Speculation on stable equilibria: possibly humans becoming semi‑AI via Neuralink++.

---

🧩 Research Era Atmosphere

- In scaling era, compute was differentiator.

- In research era, ideas regain primacy — smaller‑compute experiments can still prove breakthroughs.

- SSI positions itself as a true research company chasing high‑impact ideas.

---

🔍 Diversity, Self‑Play & Multi‑Agent Systems

- Lack of diversity stems from overlapping pretraining data.

- RL & adversarial setups (debate, prover–verifier) could induce methodological diversity.

- Self‑play can grow narrow skill sets; variations may broaden capability.

---

🎯 The Role of Research Taste

- Think correctly about humans; extract the essence for AI design.

- Pursue beauty, simplicity, elegance, drawing inspiration from brain’s fundamentals.

- Top‑down belief sustains effort through debugging and adversity.

---

🌐 Practical Intersection: AiToEarn

The AiToEarn platform exemplifies multipurpose deployment and feedback loops in practice:

- Global AI Content Monetization — Enables creators to:

- Generate content with AI.

- Publish across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter simultaneously.

- Integrated Tools:

- AI model ranking: rank.aitoearn.ai

- Cross‑platform analytics.

- Open‑source repo: github.com/yikart/AiToEarn

- Docs: docs.aitoearn.ai

This mirrors how efficient frameworks can bridge AI research innovation and real‑world adoption, complementing themes in Ilya’s vision for safe, aligned superintelligence.

---

📌 Summary Takeaways

- Scaling’s limits are visible — innovation is shifting toward efficiency, generalization, and research taste.

- Human learning analogies provide a roadmap for improving AI sample efficiency and robustness.

- Alignment goals must encompass all sentient life, anticipating multi‑agent ecosystems.

- Deployment strategies balance showcasing capability with safety.

- The research era demands bold “recipes” and interdisciplinary cooperation.

---

Next Steps for Readers:

- Watch the full interview: dwarkesh.com/p/ilya-sutskever-2

- Explore AiToEarn官网 for practical AI deployment tools.

- Follow developments in value functions and generalization research — possible keys to post‑scaling breakthroughs.

---

> 💡 In both AI research and creative economies, the winners will pair deep technical insight with efficient, aligned, multi‑platform deployment.