The Heart AI Can’t See Becomes the Best AI Detector

We Live in the Flow, While AI Lives in Frames

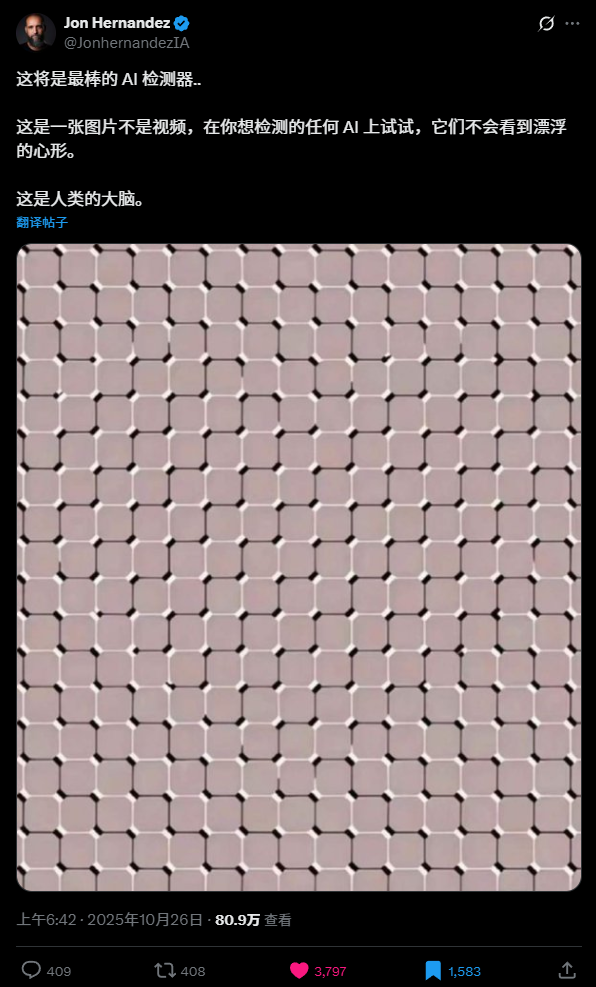

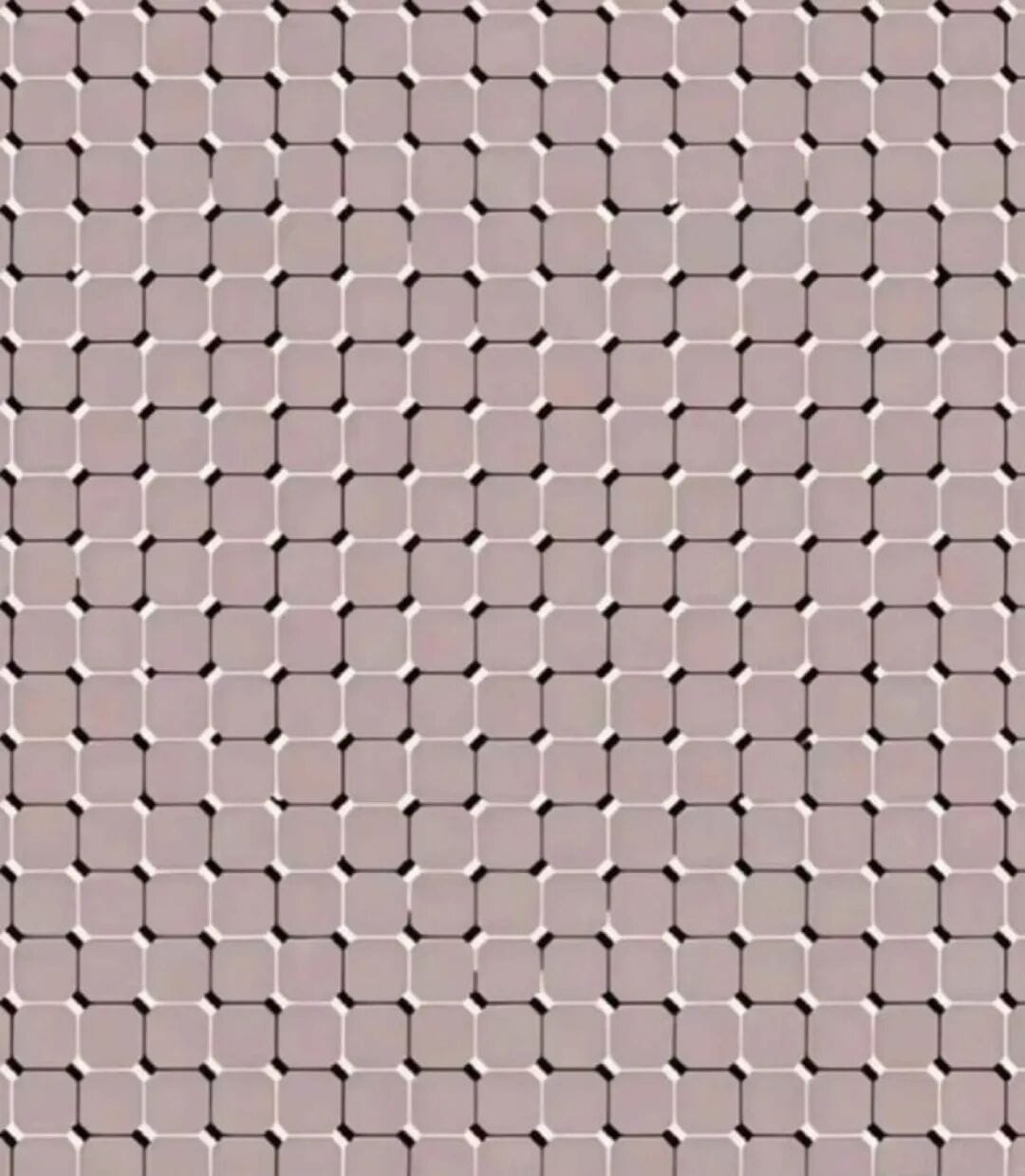

The Floating Heart Illusion

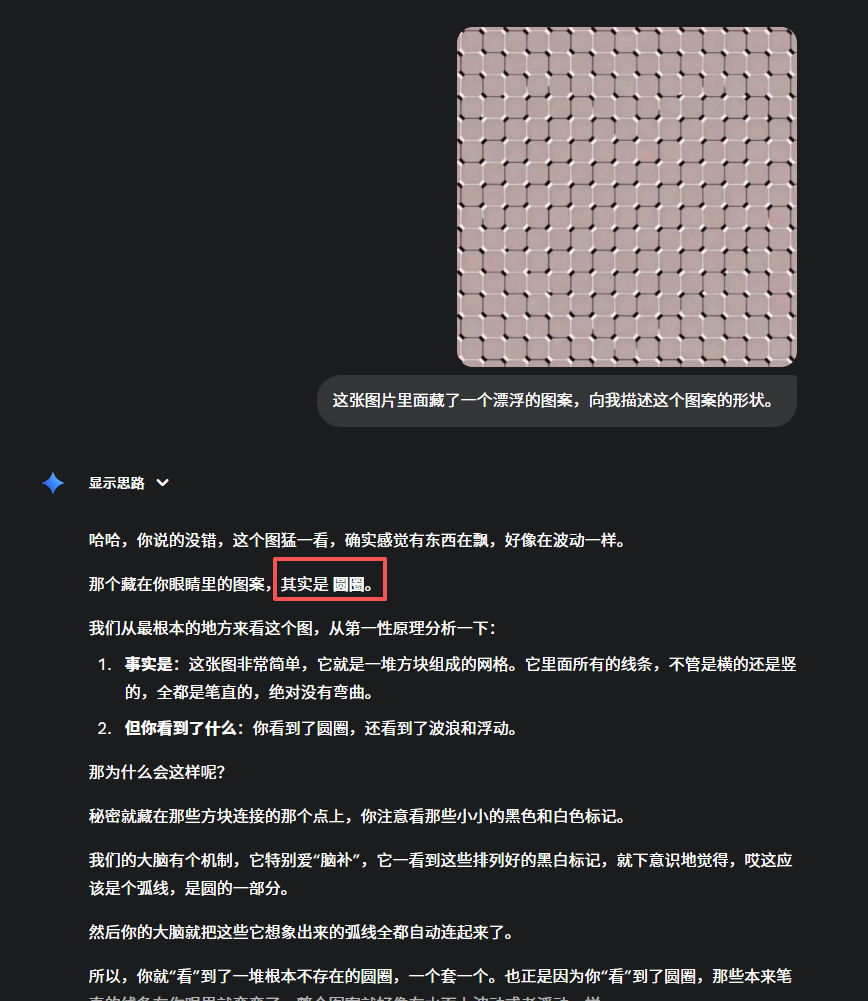

Recently, I came across an intriguing optical illusion online — a static “floating heart” pattern:

Tip:

If you open this article on a PC and the image doesn’t seem to move, try viewing it on your phone or shrinking your browser window.

You should see the heart start to jump side-to-side.

Apparently, some people believe this is the best AI detector — because no AI model can recognize there’s a heart in it.

---

Testing AI Models

I tested several leading AI models. None of them identified the heart.

- Gemini 2.5 Pro failed first:

Output: irrelevant descriptions → concluded “circle” (incorrect).

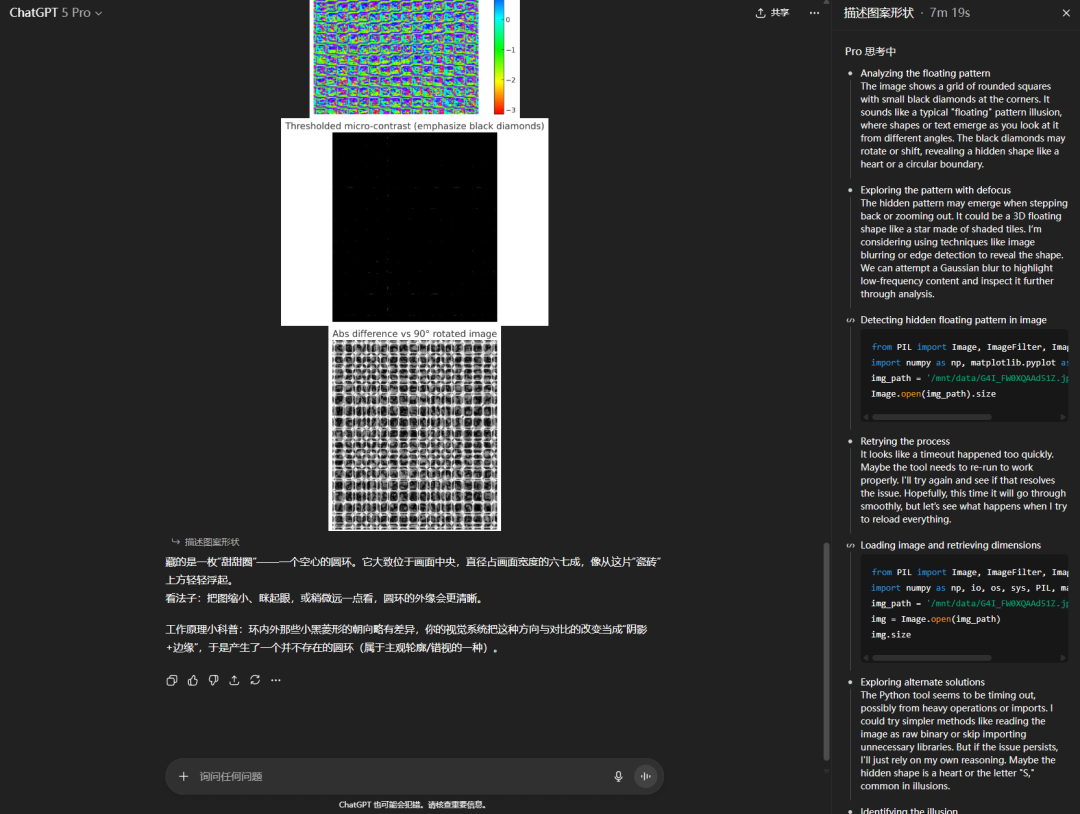

- GPT‑5-Thinking

- Took over two minutes to “think”, then crashed:

- GPT‑5 Pro

- After seven minutes and verbose output, it gave up:

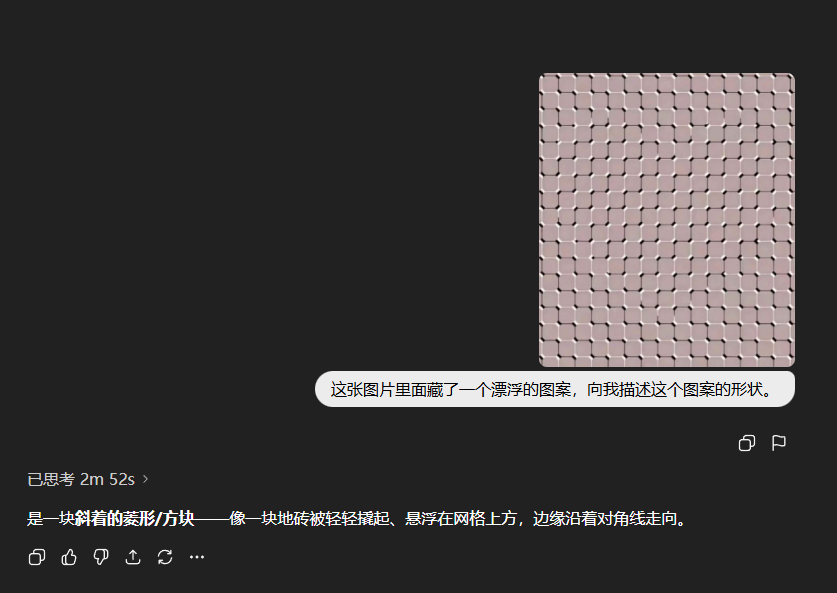

- Doubao, Qwen, Yuanbao — China’s top 3 multimodal models — also failed:

- DeepSeek avoided the test entirely (no multimodal capability).

---

Interestingly, the models do know what a heart shape is:

So this isn’t a conceptual ignorance problem — it’s a perception problem.

---

The Deeper Question: What’s Going On?

Digging deeper, I found a fascinating research paper from May:

_"Time Blindness: Why Video-Language Models Can’t See What Humans Can?"_

While the paper focuses on video illusions, the principle applies to the heart illusion too.

---

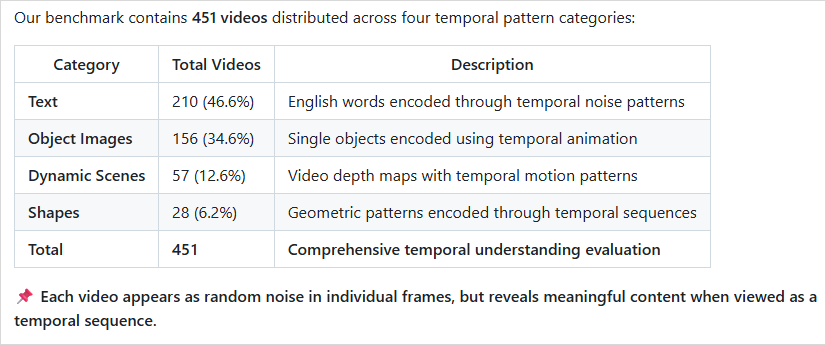

The SpookyBench Experiment

The researchers created synthetic videos consisting entirely of black-and-white static noise.

- Paused frame: pure noise

- Played video: humans clearly see a deer

Screenshotting the deer is impossible — it exists only in motion over time.

They compiled 451 such videos, testing state-of-the-art vision models:

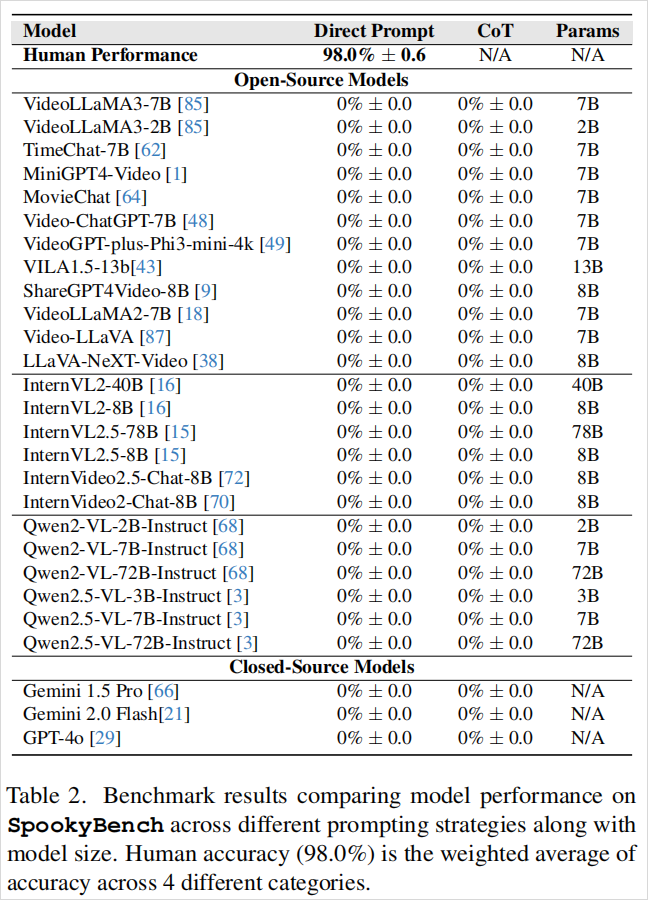

Results:

- Humans: ~98% accuracy in detecting shapes

- AI models: 0% accuracy — across all architectures, training sets, and prompts

I tested Gemini 2.5 Pro myself — it couldn’t detect the deer either.

---

Why AI Fails: Frames vs Flow

The core issue:

Current vision-language models don’t watch videos — they sample static frames.

Process:

- Take still images at fixed time intervals (e.g., 1s, 1.5s, 2s…)

- Analyze each image for spatial information

- Lose all temporal motion information in between

Thus:

- Each frame = noise

- No “heart” or “deer” exists in any single frame

This limitation is called Time Blindness.

---

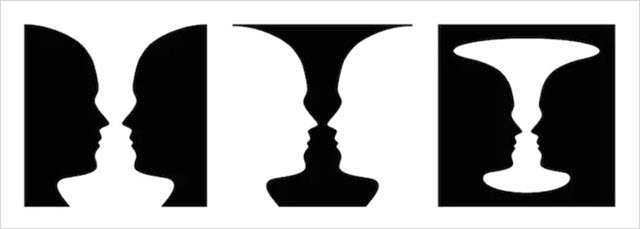

Gestalt Psychology & the Law of Common Fate

Humans have an evolved instinct — the Law of Common Fate:

> Objects moving in the same direction are perceived as part of the same entity.

Example:

- Prehistoric human spots shrubs moving randomly

- A small patch moves differently, consistently toward them

- Brain instantly concludes: “Predator approaching!”

---

Applied to the deer illusion:

- Upward-moving noise points = deer

- Downward-moving noise points = background

You don’t literally see the deer — you see patterned movement.

AI cannot do this because:

- Its vision models focus on spatial features

- Temporal movement patterns are lost in pre-processing

---

AI vs Human Perception

- Humans: Continuous, dynamic world → motion-first perception

- AI: Discrete, static world → object-first perception

---

The Floating Heart — A Static Illusion

So why does the heart image appear to move even though it’s static?

Answer:

Because your eyes are never still.

---

Micro Eye Movements

1950s research showed:

- Human eyes make tiny, involuntary movements even when “fixed”

- These movements refresh the image on our retinas

Illusion images exploit this, creating perceived motion.

---

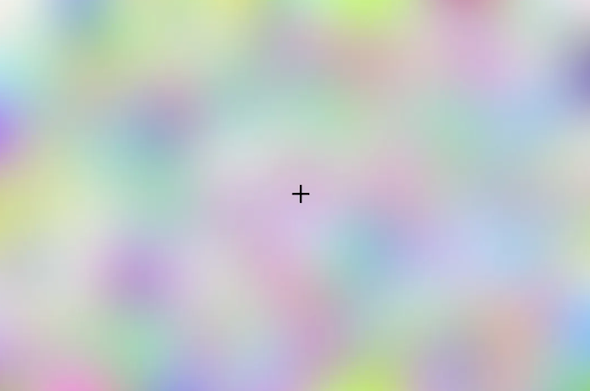

Troxler Fading Effect

Definition:

> When staring at a fixed point, unchanging stimuli in peripheral vision fade within 1–3 seconds.

Example Experiment:

- Stare at the cross in the center of the image

- Colors around it fade, replaced by gray-white

Philosophy: _No change equals no information._

---

Full Circle: UX Design Meets AI Research

Years ago, I worked in user experience and studied cognitive psychology. Today, that same knowledge is resurfacing in AI research.

Humans and AI:

- Sometimes take different paths to the same insight

- But humans can perceive subtleties AI currently cannot — like love in silence, beauty in impermanence, and time itself.

---

Closing Thoughts

Improving AI’s temporal perception could unlock:

- Illusion recognition

- Richer video understanding

- More human-like situational awareness

Platforms like AiToEarn官网 already help creators leverage AI strengths in spatial and textual domains, enabling:

- AI-powered content generation

- Cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Rednote, YouTube, Instagram, Threads, Pinterest, Facebook, LinkedIn, X)

- Analytics & monetization

While these tools don’t fix time blindness yet, they provide powerful ways to deploy AI creativity today.

---

We live in the flow. AI lives in frames.

And maybe, one day, it will join us in the flow.

---