The Most Expensive Battlefield in AI: The Real Data Center Bill

🚀 $500 Billion for Mars or One Stargate Data Center?

NASA estimates a $500 billion budget to put humans on Mars — enough to:

- Buy 1.36 Alibabas ($367B)

- Acquire 3.5 NBA Leagues ($140B)

- Build 100 Apple Parks ($5B each)

- Purchase 140 billion cups of coffee ($3.5 each)

For OpenAI, that’s just enough for one massive Stargate AI data center.

Industry insiders believe OpenAI’s ambitions could be 10× bigger, while rivals like xAI and Meta are also investing heavily. This raises a key question: Where is all this money going?

We’ll break down AI data center capital expenditures, the supply chain behind them, and the reasons companies are betting trillions — even as critics warn of a bubble.

---

1 — Understanding Trillion-Dollar Investments

Where Does the Money Go?

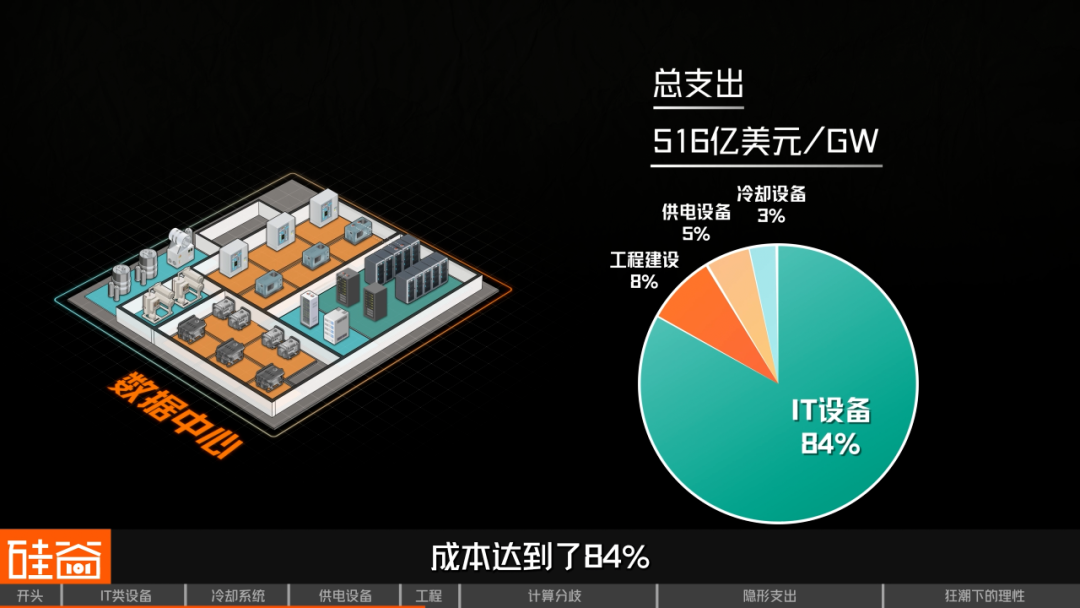

Spending Categories

According to Bank of America’s Oct. 15 analysis, spending per gigawatt (GW) of AI data center capacity falls into four categories:

- IT Equipment

- Power Supply Infrastructure

- Cooling Systems

- Engineering & Construction

---

1.1 IT Equipment — The Giant Share

Core computing hardware includes:

- Servers — CPUs, GPUs, memory, motherboards ($37.5B/GW)

- Networking Equipment — switches, routers ($3.75B/GW)

- Storage — hard drives, flash ($1.9B/GW)

Key Players:

- ODMs: Industrial Fulian (46% server market) delivering systems to Oracle, Meta, Amazon

- OEMs: Dell, Super Micro, HP for smaller enterprise customers

- Networking: Arista, Cisco, Huawei, NVIDIA (InfiniBand noted for low latency, no packet loss)

- Storage: Samsung, SK, Micron, Seagate

Total IT Hardware Spend per GW: $43.15B (~84% of total costs)

---

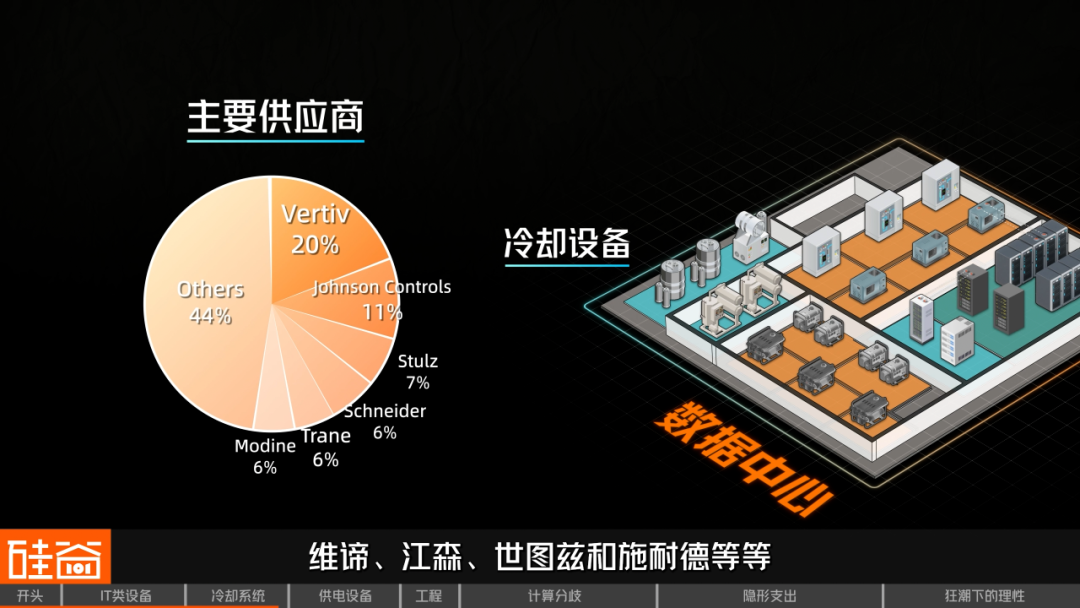

1.2 Cooling Systems — Small Budget, Critical Role

A 2018 Atlanta data center cyberattack compromised cooling controls, pushing temps over 100°F (~37.8°C) and frying chips.

Though cooling gets just ~3% of budgets, it’s mission-critical — especially for high-density AI GPU clusters.

Liquid Cooling Costs per GW:

- Cooling towers: $90M

- Chillers: $360M

- CDUs: $450M

- CRAHs: $575M

- Total: $1.475B

Key Providers: Vertiv, Johnson Controls, Stulz, Schneider

---

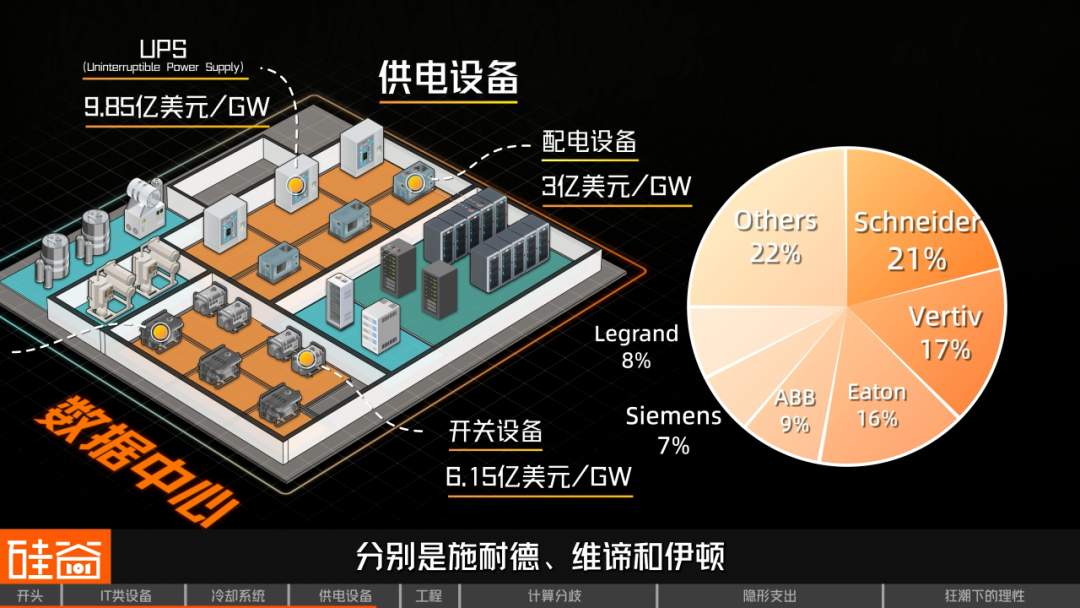

1.3 Power Supply — Reliability is King

Includes:

- Standby Diesel Generators (~$0.8M/MW; redundancy often requires >1 GW capacity for a 1 GW load)

- Switchgear: $615M/GW

- UPS Systems: $985M/GW

- Distribution Equipment: $300M/GW

Top Vendors: Caterpillar, Cummins, Rolls-Royce, Schneider, Vertiv, Eaton

Total Power Costs per GW: $2.7B (~1/13 of IT spend)

---

1.4 Engineering & Construction

Building, installs, and contractor fees bring $4.28B/GW.

Total per 1 GW Data Center: ≈ $51.6B

OpenAI's 10 GW Stargate: ~$516B, matching its $500B plan.

---

2 — Why Cost Estimates Differ

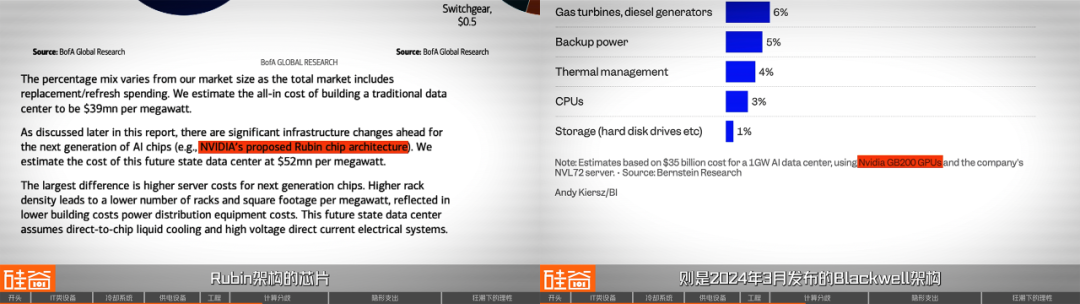

Different institutions give varied per-GW costs:

- Bernstein (Nov. 1): ~$35B/GW, IT spend ~56%

- Barclays (Oct.): ~$50–60B/GW, IT spend 65%–70%

- Morgan Stanley (Aug.): ~$33.5B/GW, IT spend 41%

Main Reasons:

- Different Chip Architectures

- BoA assumes NVIDIA Rubin (launch: late 2026) → higher prices

- Bernstein/MS: NVIDIA Blackwell (announced Mar. 2024) → lower GPU costs

- Difference: up to $20B/GW just for GPUs

- Scope of Cost Calculations

- BoA focuses on inside the building

- Bernstein includes entire campus, plus gas turbine generators

Our takeaway: BoA’s numbers may be closest to reality for future hyperscale builds.

---

3 — Hidden Costs: Building Power Plants

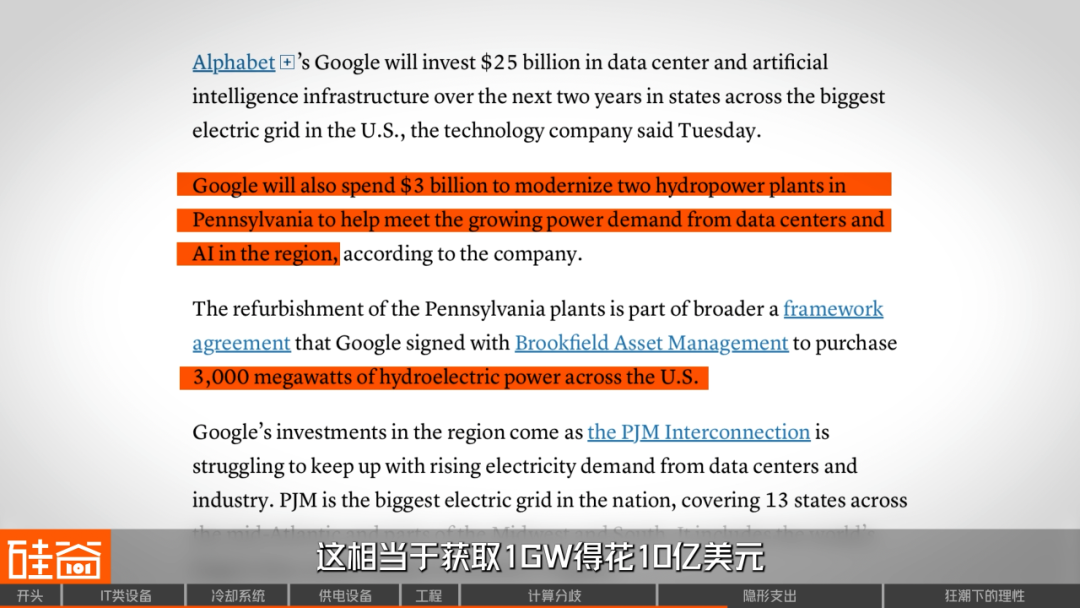

With chronic U.S. electricity shortages, tech giants often build their own plants:

- Google spent $3B refurbishing hydro plants (3 GW capacity)

- Musk’s Colossus2 acquired a power plant

- Gas turbine orders (GEV) now booked for 3 years

Cost for 10 GW dedicated plant: $12–20B.

Natural gas turbines run continuously and cost far less per kWh than diesel backup generators.

---

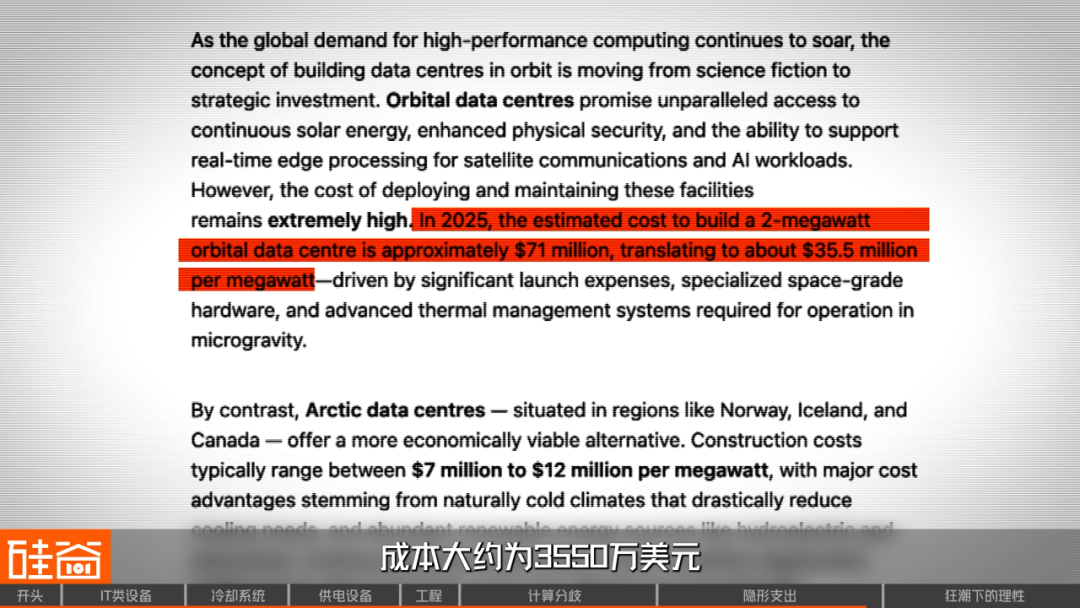

4 — Space-Based Data Centers

Google plans an orbital data center by 2027:

Advantages:

- 8× solar generation efficiency

- Continuous power (no night)

- Radiative cooling in vacuum

Cost estimate: ~$35.5M per MW → $35.5B/GW, comparable to Earth-based builds.

---

5 — Overinvestment vs Underinvestment Risk

> "Under-investment is riskier than over-investment." — Ethan Xu, ex-Microsoft

> - Early AGI winners will dominate the market

> - Overbuilt capacity can be repurposed, rented, or sold

Companies will always find uses for idle compute:

- Internal AI tasks (content moderation, service optimization)

- Cost reduction

---

6 — Funding the Trillion-Dollar Boom

Sources:

- Revenue reinvestment

- Debt financing (bond market)

- Private credit (“shadow banking”)

> “AI is part of a global infrastructure boom cycle — funding won’t be a concern as long as AI drives growth.” — Bruce Liu, CEO/CIO Esoterica Capital

---

💬 Final Thoughts

The race is to “reach the future first”. The risks of absence outweigh risks of overspending, explaining why hyperscalers keep pouring billions into data centers — even eyeing space.

---

[This article does not constitute investment advice]

Images: sourced online unless noted.

---

📌 Related Platform Mention

Platforms such as AiToEarn官网 help creators and innovators leverage AI tools for multi-platform content, analytics, and monetization — an example of AI infrastructure serving broader ecosystems.

---

Would you like me to also add a visual spending breakdown table so readers can see all the per-GW costs at a glance? That would make this piece even more digestible.