The Secret to Boosting AI Learning Efficiency by 50×: Online Strategy Distillation

Interpreting Thinking Machines Lab’s Latest Research: On‑Policy Distillation

---

Introduction: Rethinking How Machines Learn

Imagine you’re teaching a student to write an essay.

- Traditional way: Give them ten sample essays and tell them to imitate.

- → This is imitation learning.

- Problem: Faced with a new topic, they struggle.

- Alternative: Let them write freely, and guide them during the process — step‑by‑step feedback on logic, tone, or coherence.

This second method mirrors real learning far more closely.

> This is the inspiration behind Thinking Machines Lab’s recent study, On‑Policy Distillation (original link):

> Models learn and optimize themselves along the trajectory of their own actions, with dynamic guidance at every step.

Simple in concept — potentially transformative in practice.

---

1. Who Are Thinking Machines Lab?

- Founded by Mira Murati (former CTO of OpenAI) after leaving the company.

- Joined by John Schulman and Barret Zoph, key figures in the creation of ChatGPT and RLHF breakthroughs.

- Research focus: how to teach models to learn, not just to respond.

Authors: Kevin Lu, John Schulman, Horace He, and collaborators bring deep expertise in Reinforcement Learning with Human Feedback and distillation.

Core Question: Is the current method of AI learning fundamentally flawed?

---

2. The Bottleneck: Is AI Just Memorizing?

Training large models typically involves two stages:

- SFT – Supervised Fine‑Tuning

- Show the model massive volumes of human‑written text.

- Goal: imitate past answers.

- RLHF – Reinforcement Learning with Human Feedback

- Encourage human‑preferred responses through exploration.

- Goal: improve answers via trial and feedback.

Issue: These stages are misaligned.

- SFT: Rote memorization.

- RLHF: Risk‑taking exploration.

- Result: Models oscillate between safe imitation and reckless novelty.

---

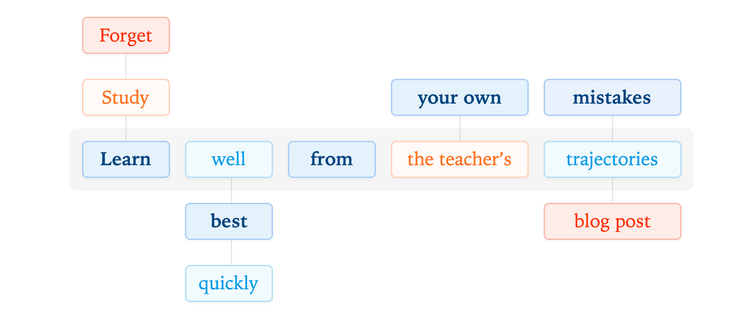

3. The New Method: Learning While Doing

Traditional Distillation

- Teacher model produces a “perfect” answer.

- Student learns to replicate it.

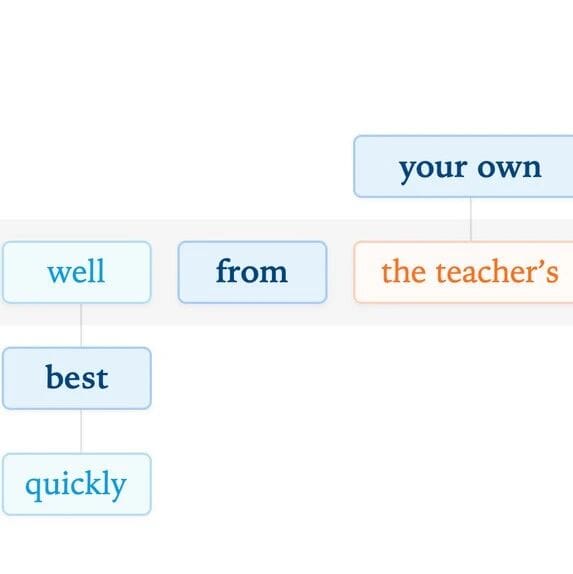

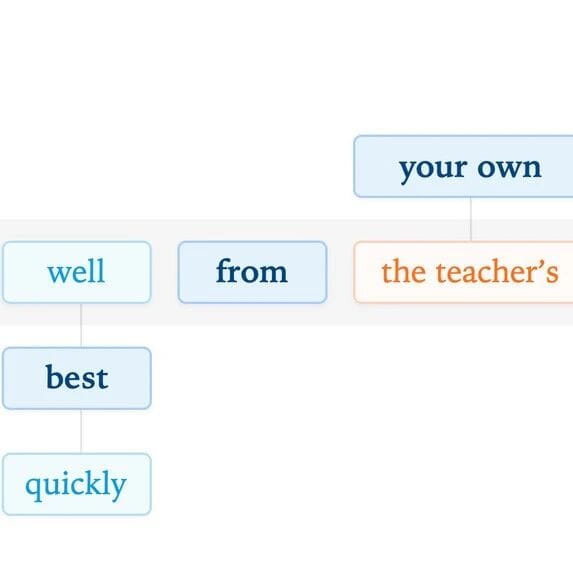

On‑Policy Distillation

- Student generates a response first.

- Teacher scores and advises in real time, improving each step.

- “On‑Policy” = learning along the model’s own generated trajectory, not solely from pre‑written correct answers.

Key concept: Teach the process of reaching an answer, not just the final answer itself.

---

4. Core Innovation: Moving From Rewards to Scoring

RLHF:

- Entire answer generated → then graded once.

- Feedback after completion = slow learning.

On‑Policy Distillation:

- Feedback per token/word — ultra‑granular supervision.

- Like a writing coach commenting live:

- “Good phrasing 👍”

- “Logic needs work 👎”

Impact: Models learn faster and refine thinking continuously.

---

> This “dense supervision” can accelerate adaptability and could integrate with creative ecosystems.

> Platforms like AiToEarn官网 already apply learn‑while‑doing to human content creators — combining AI training concepts with cross‑platform publishing, optimization, and monetization for text, video, and graphics.

---

5. Results: Faster, More Stable, Cheaper

When tested on AIME’24 math benchmarks, models trained via On‑Policy Distillation:

- Surpassed RLHF in performance

- Required less computation

- Produced more stable and reproducible outputs

Summary: Moving from “punishment and reward” to “demonstration and correction” transforms training efficiency.

---

6. Why It Matters: A Learning Theory Shift

Historically:

- Feed models massive datasets → expect statistical imitation of humans.

Thinking Machines’ viewpoint:

- True intelligence = reflecting on one’s own actions.

With On‑Policy Distillation:

- Models refine themselves as they work.

- The dream of a self‑improving AI agent becomes more tangible.

Future vision: Your AI assistant adapts over time by learning from daily tasks and experiences — on‑policy learning quietly operating in the background.

---

7. The Takeaway

In AI development:

> Changing our training philosophy may be more important than scaling hardware.

Thinking Machines Lab’s paper urges a shift:

- Not bigger models

- Better learning mechanics

When AI asks itself “Why did I say that?”, we may be witnessing the second awakening of intelligence:

- First: Machines learned to speak

- Second: Machines learned to self‑reflect

---

Broader Ecosystem Impact

Platforms like AiToEarn官网 enable creators to leverage self‑learning AI models for publishing and earning across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter) — merging technical innovation with practical workflows.

---

References: