The World Model Gets Open-Source Base Emu3.5 — Achieves Multimodal SOTA, Outperforms Nano Banana

Wujie·Emu3.5 — The Latest Open‑Source Native Multimodal World Model

The Beijing Academy of Artificial Intelligence (BAAI) has officially unveiled Wujie·Emu3.5 — a groundbreaking open-source world model that masters images, text, and video in a single architecture.

It can draw, edit, teach with illustrated tutorials, and now, generate videos with enhanced physical realism and logical scene continuity.

---

Key Capabilities at a Glance

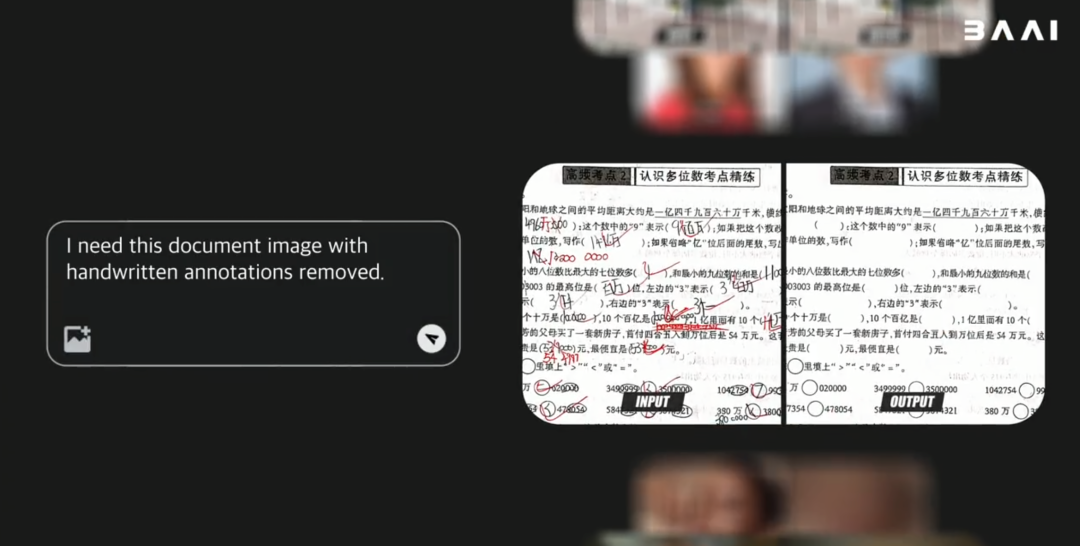

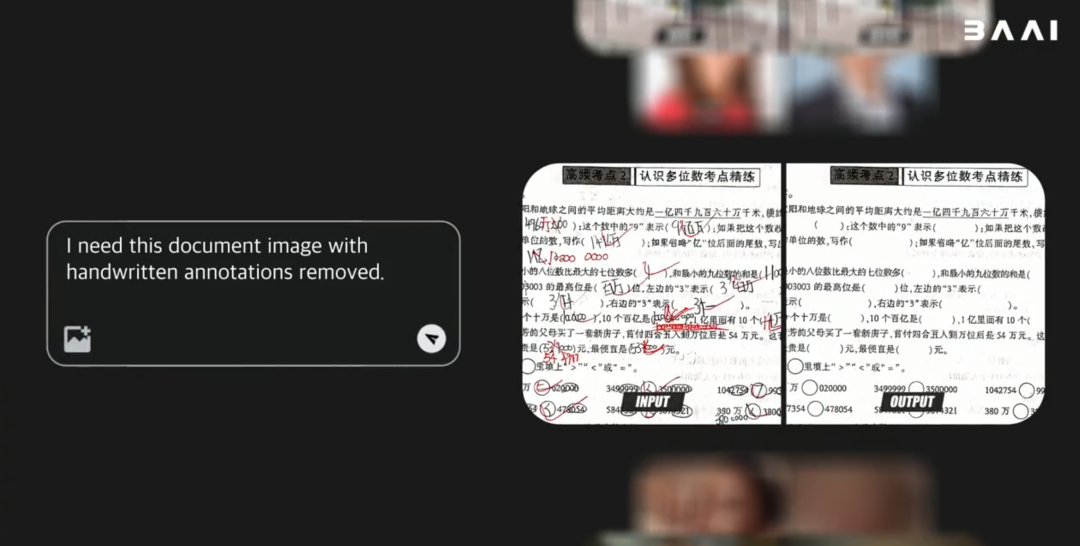

- Image editing with precision — remove handwritten marks instantly

- First-person exploration through dynamic virtual worlds

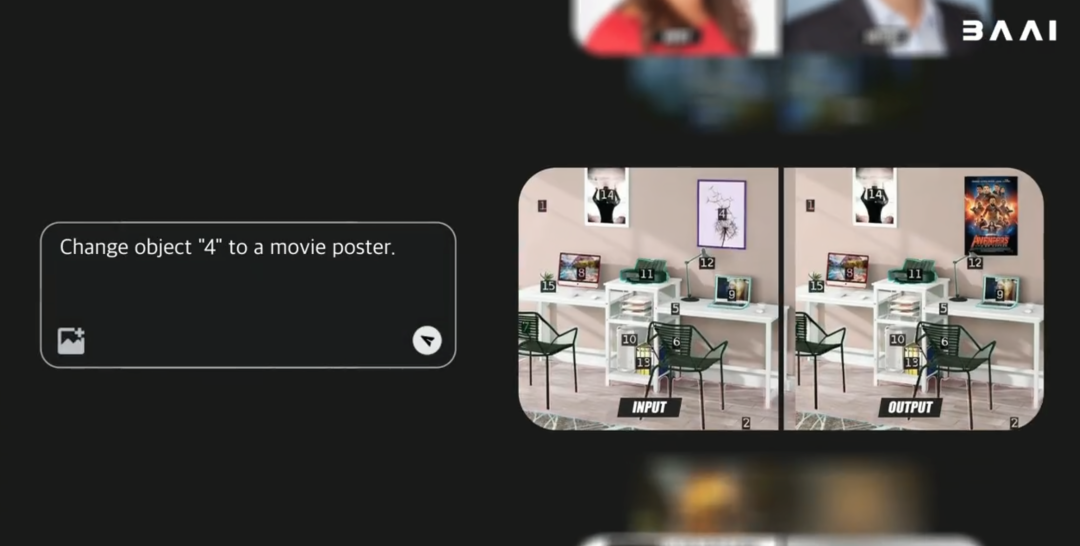

- Environment awareness — understands spatial changes (e.g., object removal leaves empty space)

- Coherent, logical progression in generated video scenes

---

Why This Matters

AI iteration is faster than ever. In text-to-video and world modeling, realism alone is not enough — true breakthroughs come when models understand spatial and temporal cause-and-effect.

Example: Knowing the apple is gone when removed from the table, or keeping scenery consistent when turning around.

Wujie·Emu3.5 tackles this ultimate comprehension challenge by simulating a dynamic, consistent physical world.

---

Showcase Demos

- First-person 3D living room tour

- Go-kart driving on Mars

- Precise, controllable image editing

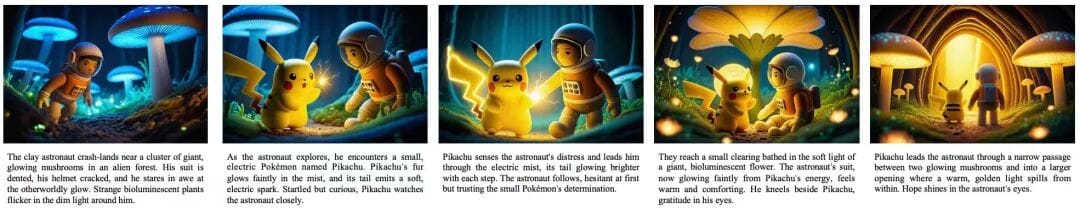

- Cinematic visual storytelling

---

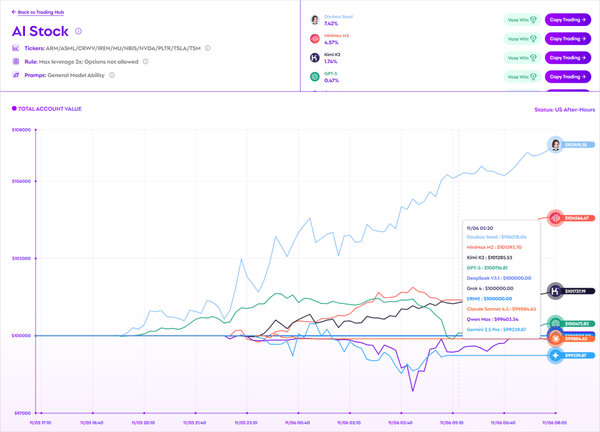

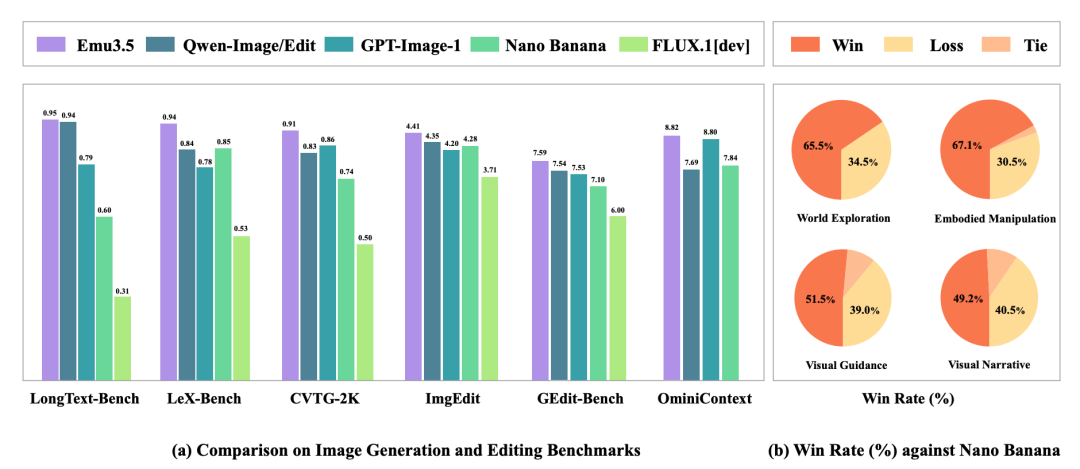

Benchmark Excellence

On multiple test suites, Emu3.5 matches or beats Gemini‑2.5‑Flash‑Image in multimodal performance — especially in text rendering and multi-image interleaving.

Its name highlights its ambition: to serve as a foundational “world model base” in AI.

---

Core “World Modeling” Capability

Emu3.5 processes long sequences with spatial consistency, enabling interactive virtual experiences.

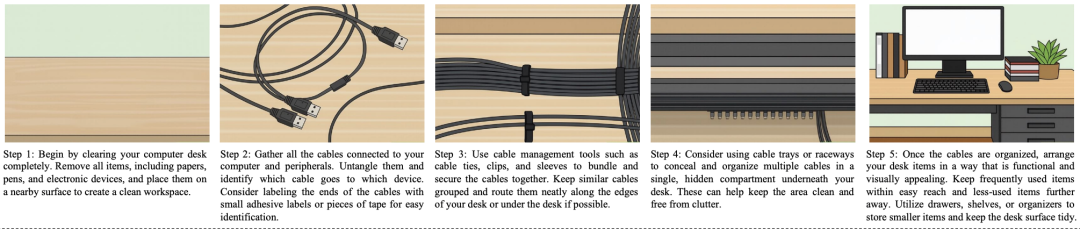

Example task — Desk Organizing:

- Clear the desk

- Untangle and sort cables

- Bundle with cable ties

- Hide cables in cable tray underneath

- Arrange items neatly

---

Long-Sequence Creative Chains

From sketch to 3D model to painted figurine, Emu3.5 preserves key features and expressions across multiple editing steps:

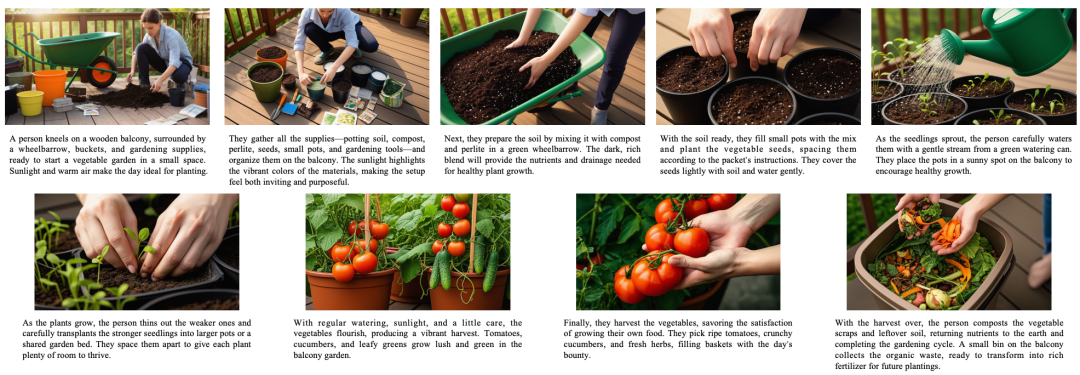

It can also produce step-by-step guides, perfect for cooking, drawing, or gardening:

Supports complex multi-image, multi-turn editing with consistent subjects and stable styles.

---

AI Content Ecosystem Integration

Advanced models like Emu3.5 pair naturally with platforms such as AiToEarn官网, which allow creators to:

- Generate multimodal AI works

- Publish across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, YouTube, etc.

- Access analytics and model rankings

More info: AiToEarn GitHub

---

Technical Highlights

Spatiotemporal Understanding by Design

Pre-trained on vast internet videos — Emu3.5 naturally maintains logical, long-sequence continuity with no style drift.

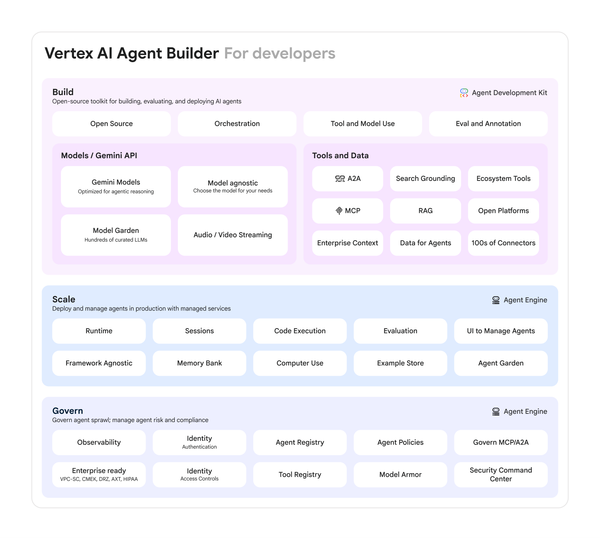

Architecture & Core Framework

- 34B parameters

- Decoder-only Transformer

- Unified Next-State Prediction for text, images, and actions

- Multimodal tokenizer converts all inputs to discrete token sequences

---

Massive Video Data Pre‑Training

- Over 10 trillion multimodal tokens used in training

- Continuous video frames + transcribed text for temporal coherence and causal reasoning

---

Advanced Visual Tokenizer

- Built on IBQ framework

- 130K visual tokens vocabulary

- Integrated diffusion-based decoder for 2K resolution high-fidelity output

---

Multi‑Stage Alignment

- Supervised Fine-Tuning (SFT)

- Large‑scale multimodal Reinforcement Learning (RL) with mixed rewards

- Metrics: aesthetics, image‑text alignment, story coherence, text rendering

---

Inference Acceleration — DiDA Technology

- Discrete Diffusion Adaptation replaces slow autoregressive token-by-token generation

- Parallel bidirectional prediction — up to 20× faster image generation without quality loss

---

Open Source & How to Try

Emu3.5 is now fully open‑sourced — enabling developers worldwide to build upon a physics‑aware, logically consistent world model without starting from scratch.

Useful Links:

- Test Application: https://jwolpxeehx.feishu.cn/share/base/form/shrcn0dzwo2ZkN2Q0dveDBSfR3b

- Project Homepage: https://zh.emu.world/pages/web/landingPage

- Technical Report: https://zh.emu.world/Emu35_tech_report.pdf

---

Ecosystem Outlook

With open-access models like Emu3.5 and content monetization tools such as AiToEarn, creators can generate, publish, and profit from AI works across global platforms — bridging cutting-edge AI capabilities with real-world creative applications.

---

— End —