The World’s First Embodied AI Open Platform Is Here — Giving Large Models a “Body” for Natural, Human-Like Interaction

The Next Leap in Embodied Intelligence

The imaginative potential of the embodied intelligence sector extends far beyond the robots we see today.

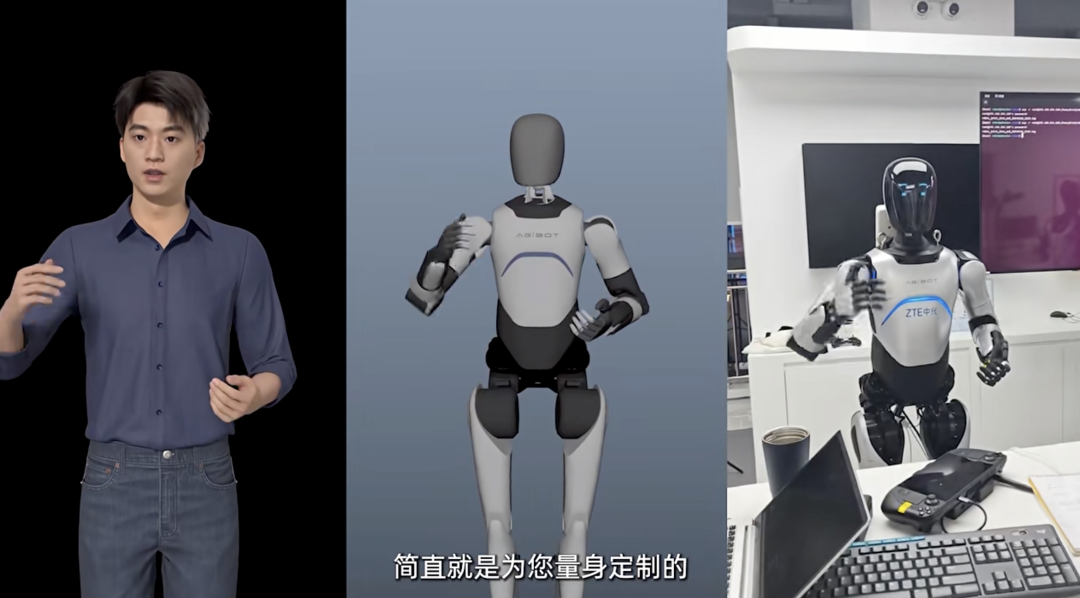

While many are still focused on fitting large models into physical robots, digital humans are now being linked directly with embodied AI capabilities.

---

Morpho Nebula: First Global Embodied Intelligence Platform

Today, Morpho Technology announced the Morpho Nebula, promoted as the world’s first 3D Digital Human Open Platform for Embodied Intelligence — designed for developers worldwide.

Key Highlights

- Brings large language models to life — lets them “grow a body.”

- Enables physical robots to:

- Move naturally

- Emote

- Express themselves like humans

- Architecture delivers:

- End-to-end latency under 1.5 seconds

- Tens of millions of concurrent connections

- Runs on compute resources costing only a few hundred yuan

- Dialogue feels natural — as if chatting face-to-face.

---

How It Works

Morpho Nebula can take text as input and generate — in real time — a digital human’s:

- Voice

- Facial expressions

- Gaze

- Gestures

- Body movements

The result: seamless multimodal interaction on any screen, app, or device.

---

Three Main Application Directions

- Give large models and AI agents an expressive body

- Voice, facial expressions, gestures

- Move beyond text-only interactions

- Upgrade devices into embodied intelligence interfaces

- Phones, tablets, TVs, in-car screens

- Every screen can “speak and move”

- Enable humanoid robots to communicate naturally

- Generate joint-level motion in virtual space

- Map actions to robots via simulation and reinforcement learning

> This allows robots to not only move and operate but also speak, make eye contact, and gesture in scenarios like presentations, guided tours, and Q&A.

---

Real-World Use Cases

- Hospitality: 24/7 digital receptionists

- Government service halls: friendly public information agents

- Exhibitions: human-like guides and explainers

- Training and interviews: natural, warm interactions

- Mobile and web apps: SDK/API integration for AI companions

---

Breaking the "Impossible Triangle"

Challenges for scaled digital human deployment include:

- User experience quality (visual realism, lip-sync, gestures)

- Response speed

- Cost control

- Scalability

- Multi-device adaptation

The "Impossible Triangle"

Balancing high image quality, low latency, and low cost has traditionally been seen as unfeasible:

> High quality + low latency → massive compute costs

> High concurrency + low cost → low quality

> High quality + high concurrency → poor real-time performance

Morpho Nebula is the first to claim a breakthrough here.

---

Industry Connection: AiToEarn

Open-source tools like AiToEarn help creators generate, distribute, and monetize AI content across multiple platforms — including Douyin, Kwai, WeChat, Bilibili, YouTube, and more.

This integrated approach makes embodied digital humans easier to reach global audiences while generating value.

---

MagicFace Nebula: Cloud–Edge Innovation

MagicFace Technology has introduced MagicFace Nebula, built on its own text-to-multimodal 3D large model.

Innovations

- Multi-modal unified driving: synchronizes voice, facial expressions, and actions

- Cloud–edge split architecture:

- Cloud generates voice and motion parameters

- Device-side AI renders visuals directly — no GPU required

- Result: lower bandwidth, latency, and compute needs

Hardware Compatibility

Runs even on low-cost chips like RK3566 and RK3588 and domestic “Xinchuang” chips.

This moves embodied AI from lab prototypes to affordable real-world deployment.

---

Extensive 3D Animation Dataset

Since 2018, MagicFace has produced thousands of hours of high-quality 3D animation assets for gaming, film, and animation — a valuable resource in a field where content can cost thousands of yuan per second.

---

Transition to Open Platform

MagicFace has evolved from projects → products → platform.

Now, developers can quickly build embodied AI agents with human-like expressive power.

Professor Chai Jinxiang outlines a three-layer capability set:

- Perception

- Understanding

- Action

SDK/API integration enables:

- Real-time driving

- Speech synthesis

- Video generation

---

Difference from Traditional Digital Humans

Traditional platforms rely on:

- 2D composites

- Pre-recorded motions

- Limited variety

- Slow response

MagicFace Nebula:

- Generates 3D multimodal outputs in real time

- Synchronizes eye contact, rhythm, gestures

- Provides presence rather than only video

---

Embodied Intelligent 3D Digital Humans

Fusion of:

- Digital humans (expressive persona)

- Large models (reason+action “brain”)

Focuses on Human–Computer Interaction (HCI):

- Gives interfaces “a body” for natural interaction

Forms of Embodiment

- Text AI: non-embodied

- Voice assistants: semi-embodied

- Digital humans: virtual embodiment

- Robots: real embodiment

Mofa Nebula bridges virtual and real embodiment — closing the gap between brains without bodies and bodies without souls.

---

Emotional & Social Importance

As Prof. Chai Jinxiang notes:

> The body is for perception, activities, labor — but also for entertainment, companionship, and communication.

Embodiment restores emotion and presence to interaction — essential for trust, scalability, and seamless integration into society.

Experience link: https://xingyun3d.com

---

Final Thought

The integration of embodied intelligence with multi-platform publishing tools like AiToEarn could enable the next generation of immersive, interactive AI experiences — combining real-time responsiveness, emotional expression, and global content reach.