This Week’s AI Product Picks | From Keling to Popi and OiiOii – The AI Film Showdown

The Most Crowded Track: Where the Next Platform-Level AI Product Will Emerge First

Overview

This week’s AI spotlight is on what many creatives once saw as their lifelong adversary — AI video generation tools.

In 2024, the conversation in AI video generation has shifted from "Can it be generated?" to "Can it be delivered at scale?". The main battleground is no longer eye-catching demos, but engineering reliability and workflow integration.

---

From Demos to Production Pipelines

To truly compete, AI video generation tools must:

- Ensure multi-shot consistency

- Provide precise camera control syntax

- Enable reusable characters across projects

- Integrate audio & video pipelines into a single output

- Move beyond one-off cinematic reels to sustained production capabilities

Industry leaders already set benchmarks:

- OpenAI Sora: Makes reusable characters and shot stitching standard.

- Google Veo: Offers tiered services for speed vs. quality to match computing budgets.

> Demo is just the start — production capacity is the real story.

---

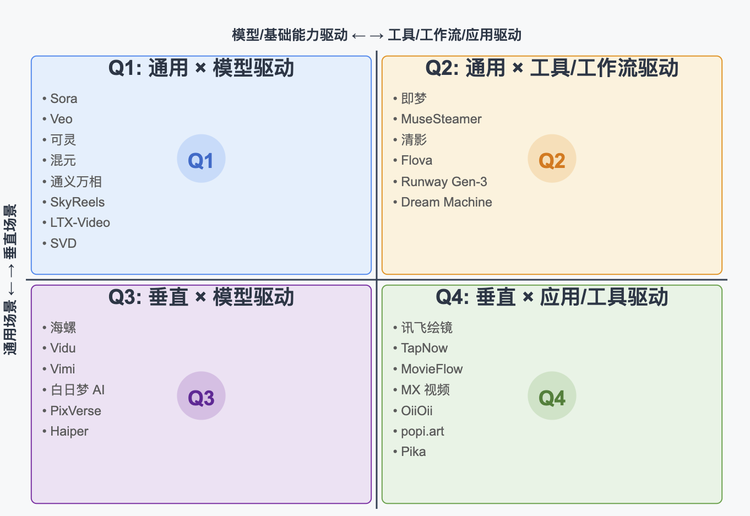

Four-Quadrant Classification

We map AI video tools by:

- Horizontal: Model-driven vs. Tool/Workflow-driven

- Vertical: General-purpose vs. Vertical-specific

Products from tech giants and startups compete in different quadrants.

---

Q1: General × Model-Driven

Focuses on strong foundational models, wrapped with product layers.

Kuaishou | Kling

- Launch: June 2024

- Capabilities: 2-min videos, 1080p @ 30fps, multiple aspect ratios

- Tech Path:

- Large-scale datasets (Koala-36M) + Scaling Law strategy

- Owl-1 general world model: “state–observation–action” learning

- Maintains motion, cinematography, physical realism at scale

- Role: Integrated into Kuaishou ecosystem for short/long content generation

---

Tencent | Hunyuan

- Foundation: Hunyuan large model framework

- Product: HunyuanVideo — text-to-video for public & API use

- Open Source: Code + weights on GitHub & HF (~13B params)

- Focus: Strong in Chinese contexts, cinematic & physical consistency

- Use Cases: Short video platforms, advertising, gaming, film production

---

Alibaba | Tongyi Wanxiang

(Detailed below under separate heading)

---

Q2: General × Tool / Workflow-Driven

Broad use cases with an emphasis on integrated workflows rather than raw model strength.

---

Tongyi Wanxiang

Part of Alibaba’s Tongyi AI ecosystem. Evolved from text-to-image into:

- Text-to-video

- Image-to-video

- Image editing

Available via Bailian platform API.

Model Iteration

- Versions Wan2.1 → Wan2.2

- MoE Architecture:

- High-noise experts manage layout → Low-noise experts handle details

- Cuts inference compute cost ~50%

- High-compression 3D VAE:

- Encodes spatiotemporal info into compact latent

- 5s 720p video in minutes on consumer GPUs

Unique Feature

Cinematic Aesthetics Control: descriptors for light, color, composition, lens mood bring cinematography language directly into generation.

---

LTX Video

By Lightricks (Facetune). Built on open-source LTX-Video / LTX-2.

- Architecture: Latent space video diffusion based on DiT

- Performance: 5s 768×512 video in ~2s on H100 GPU

- Product: LTX Studio — AI movie studio from text/script → scenes → timeline editing

---

Stable Video Diffusion (SVD)

By Stability AI (November 2023).

- Modes: SVD (~14 frames), SVD-XT (~25 frames)

- Strengths: Self-hostable, controllable, lightweight integration

- Limitations: No audio, limited duration, character continuity requires editing

---

Integrated Publishing Example: AiToEarn

Platforms like AiToEarn官网 offer model ranking, cross-platform publishing, analytics — enabling creators to push AI content to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, YouTube, Instagram, X, etc., with monetization workflows.

---

Q3: Vertical × Model-Driven

Specialized in specific styles or content types.

MiniMax | Hailuo

Short clips (3–10s), strong prompt adherence, expressive motion.

---

Vidu by Shengshu

High consistency in 2D animation, reusable characters, API integration.

---

Daydream AI

Strong anime style, adapted for web novel/comic video production.

---

PixVerse

Consumer-facing viral effects: AI Kiss, character transformations.

---

Q4: Vertical × Application/Tool-Driven

Selling complete creative/industry workflows.

---

TapNow

AI brand workbench for e-commerce/ads, node-based workflow.

---

MovieFlow

Automated long-form storytelling; multi-min continuous videos.

---

OiiOii AI

Turns a single photo into animated + music video, beat-synced.

---

popi.art

Automated animation + virtual IP incubation.

---

Pika

Ultra-fast short-form video generation; keyframe transitions, community templates.

---

AIPAI

Unified interface, multi-model routing (Kling, Hailuo, Vidu, Sora, Veo, PixVerse, Runway).

---

Product Strategy Summary

- Model-Centric Path:

- Build stable lower-layer models; integrate physics + time + aesthetics.

- Workflow-Centric Path:

- Understand real production pain points; abstract them into usable toolchains.

- Hybrid/Open Approach:

- Use open-source & local deployment for compute, IP, and data security control.

These products already operate in real production environments, positioning them to become next-gen platform products.

---

Cross-Platform Monetization Infrastructure

For high-frequency creators, AiToEarn integrates AI content generation, publishing to major platforms, analytics, and ranking — turning creativity into revenue quickly.

📄 Documentation | 📊 Model Rankings | 🔥 Trending Content

---

---

Do you want me to add a comparison table for Tongyi Wanxiang, LTX Video, and SVD — summarizing strengths, limitations, and ideal use cases? It will make this guide even easier to scan.