Today’s Open Source (2025-11-28): DeepSeek-Math-V2 Launches with LLM Validator for Self-Verification, Tackling Mathematical Reasoning Rigor

🏆 Foundation Models Overview

This document presents cutting-edge AI and LLM projects that push the boundaries in mathematical reasoning, multimodal learning, bias reduction, and agent development.

Projects include DeepSeek-Math-V2, DifficultySampling, Awesome Nano Banana Pro, UDA_Debias, Wave Terminal, and Acontext.

---

① DeepSeek-Math-V2 – Self-Verifiable Mathematical Reasoning

DeepSeek-Math-V2 is a large language model focused on achieving self-verifiable mathematical reasoning.

Key Highlights

- Reinforcement learning reward based on correctness of the final answer.

- Recognizes that a correct final answer ≠ correct reasoning, especially in theorem proving.

- Trains an LLM-based verifier to assess reasoning completeness and rigor.

- Uses verifier outputs as the reward signal for proof generation.

- Strong competition results indicate self-verifiable reasoning as a promising research direction.

📎 Quick Access: DeepSeek-Math-V2 Project Link

---

🛠 Frameworks, Platforms & Tools

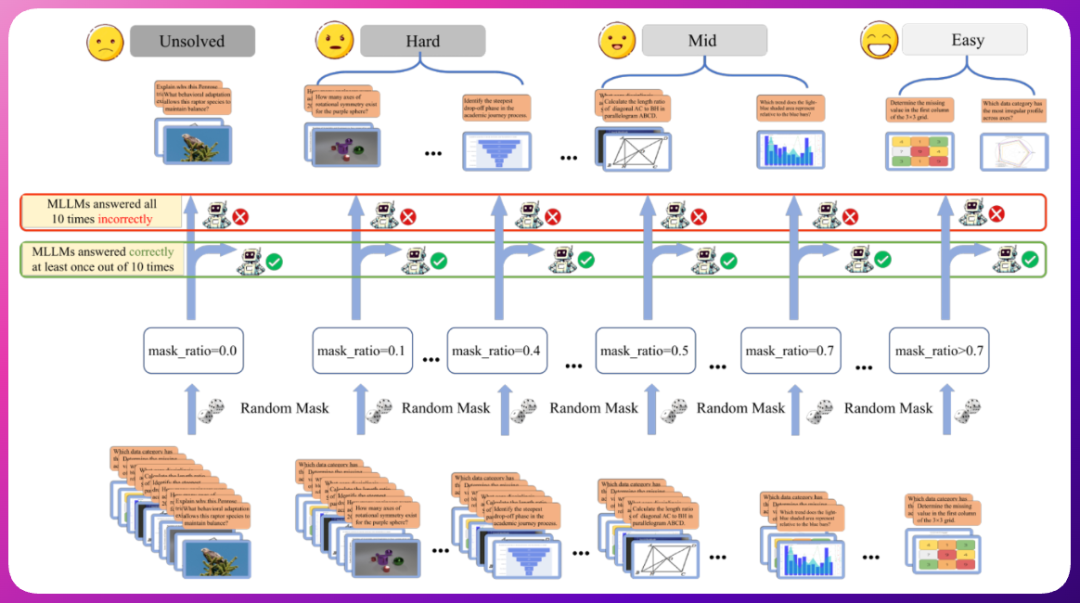

② DifficultySampling – Difficulty-Aware Multimodal Sampling

DifficultySampling explores difficulty-aware data sampling for multimodal post-training without supervised fine-tuning.

Improvements Targeted:

- Mathematical reasoning

- Visual perception

- Chart interpretation

- Other multimodal reasoning tasks

It introduces a difficulty differentiation framework adaptable to various LLM scales and baselines.

📎 Quick Access: DifficultySampling Project Link

---

③ Awesome Nano Banana Pro – Prompt Engineering Resources

A curated prompt collection for Nano Banana Pro (Nano Banana 2) AI image models.

Features:

- Covers styles from photorealism to stylized aesthetics.

- Includes complex creative prompt experiments.

- Sources: X (Twitter), WeChat, Replicate, and top prompt engineers.

- Helps maximize model creative potential.

📎 Quick Access: Awesome Nano Banana Pro Project Link

---

④ UDA_Debias – Unsupervised Bias Reduction in LLM Evaluation

UDA_Debias combats preference bias in comparative LLM evaluations.

How It Works:

- Adjusts Elo rating system dynamically.

- Uses a compact neural network to set the K factor adaptively.

- Optimizes win probability fully unsupervised.

- Minimizes reviewer score dispersion.

Results:

- Lower score variance between reviewers.

- Better correlation with human judgement.

📎 Quick Access: UDA_Debias Project Link

---

⑤ Wave Terminal – Graphical + Command-Line Hybrid

An open-source cross-platform terminal merging traditional CLI power with visual capabilities.

Supported Platforms:

- macOS

- Linux

- Windows

Built-in Tools:

- File preview

- Web browsing

- AI assistance

📎 Quick Access: (link pending)

---

📌 Related Resource – AiToEarn

AiToEarn官网 is a global open-source AI content monetization platform.

Core Capabilities:

- Multi-platform publishing to: Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter).

- Integrates with analytics and AI model rankings (AI模型排名).

- Streamlines AI content creation → distribution → monetization.

📄 Documentation: AiToEarn文档

💻 Repo: AiToEarn开源地址

---

🤖 Agent Development

⑥ Acontext – Context Management for Self-Learning Agents

Acontext is a context data platform improving Agent reliability and success rates.

Core Features:

- Stores contexts and artifacts.

- Observes Agent tasks and user feedback.

- Enables Agent self-improvement via iterative learning.

- Local dashboard for messages, tasks, artifacts, and experiences.

- Works with multi-modal conversational & task-oriented Agents.

📎 Quick Access: Acontext Project Link

---

Why It Matters

As Agent products grow complex:

- Context & feedback tracking become critical.

- Tools like Acontext simplify management.

- Platforms like AiToEarn complement development by enabling monetization of AI-driven workflows.

---

Would you like me to also provide a summary table of all projects with links, focus areas, and main benefits? That could make this document more scannable.