Transformer Author Reveals GPT-5.1 Secrets — OpenAI’s Internal Naming Rules in Disarray

A Quiet Yet Fundamental AI Paradigm Shift

Significance comparable to the birth of the Transformer itself.

---

Two Opposing Views on AI Development

Over the past year, opinions have split into two clear camps:

- Camp 1:

- "AI growth is slowing, models have peaked, pretraining is useless."

- Camp 2:

- Regular "big AI weeks" with releases like GPT‑5.1, Gemini 3, and Grok 4.1.

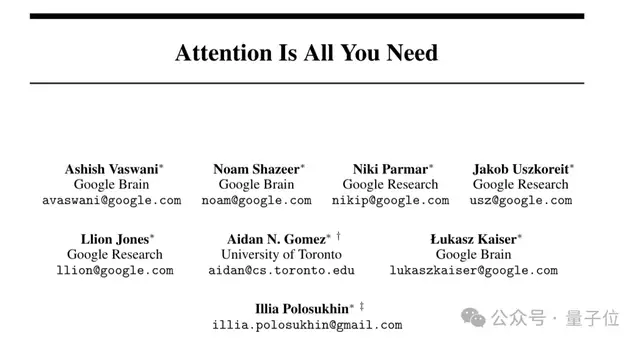

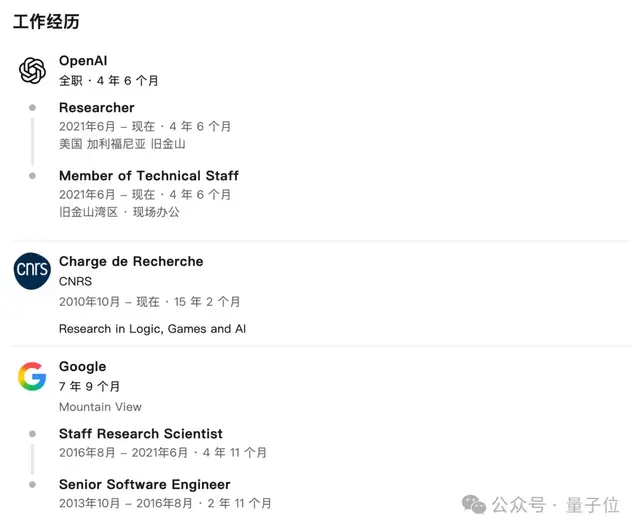

Recently, Łukasz Kaiser — original co‑author of the Transformer and now a research scientist at OpenAI — shared rare, first‑hand insights in an interview.

---

Key Takeaways from Łukasz Kaiser

> AI hasn’t slowed — it has changed generations.

> GPT‑5.1 is not just a minor version bump; OpenAI revised its internal naming rules.

> Multimodal reasoning will be the next breakthrough.

> AI will not make humans entirely jobless.

> Home robotics may become the next major visible AI revolution after ChatGPT.

---

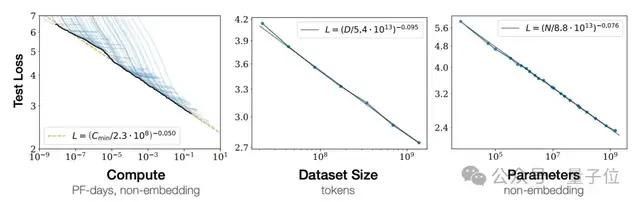

From Pretraining to Reasoning Models: The New Paradigm

Łukasz argues that the perception of slowdown is misleading:

> From the inside, AI capability growth is a smooth exponential curve, similar to Moore’s Law — accelerated by GPUs and technological iteration.

Why the Misperception?

- Paradigm Shift: From pretraining to reasoning models — a second turning point after the Transformer’s invention.

- Economic Optimization: Focus on smaller, cheaper models with similar quality.

Reasoning models are still early in their “S‑curve,” which means rapid progress lies ahead.

---

What Has Changed?

GPT Evolution

- GPT‑3.5: Answers recalled directly from training data.

- Modern ChatGPT: Actively browses sources, reasons, and delivers more accurate outputs.

Coding Assistance

- Shift from “human coding first” to “Codex first, then human fine‑tuning” — a deep though subtle change.

---

Essence of Reasoning Models

- Maintain large foundation model core.

- Prioritize thinking (chain-of-thought) before output.

- Use external tools, like web browsing, during reasoning — and include these processes in training.

- Depend more on reinforcement learning (RL) than gradient descent.

RL in Detail:

- Utilizes reward mechanisms to guide answers.

- Requires fine-grained datasets for precise tuning.

- Enables self-correction during training.

---

Opportunities for Creators in the AI 2.0 Era

Platforms like AiToEarn官网 help creators harness reasoning-model capabilities for monetizable content creation.

Key Features:

- Open-source global ecosystem for AI-driven content.

- Integrates generation, publishing, analytics, and model ranking.

- Simultaneous publishing to major channels — Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter).

---

Future of Reinforcement Learning

Expect broader RL applications:

- Large models evaluating correctness and preference.

- Richer human preference data.

- Expanded into multimodal reasoning.

While Gemini can already generate images during reasoning, RL could greatly enhance this.

---

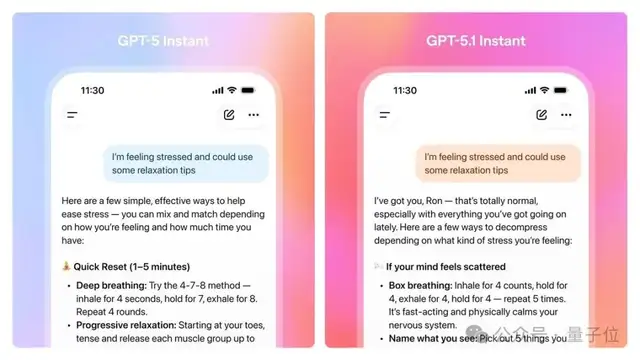

GPT‑5.1: Behind the Naming

- Not a minor tweak — a major stability iteration.

- Transition from GPT‑4 → GPT‑5 driven by RL + synthetic data.

- GPT‑5.1 focuses on post-training improvements:

- Enhanced safety

- Reduced hallucinations

- Style options (nerdy, professional)

Naming Strategy:

- User experience–oriented:

- GPT‑5: Base model.

- GPT‑5.1: Refined variant.

- Mini: Smaller, faster, cheaper.

- Reasoning: For complex task solving.

---

Limitations of GPT‑5.1

Example:

A simple odd/even counting problem with a shared dot — easy for a 5‑year‑old but failed by GPT‑5.1 and Gemini 3.

Issue: Weak multimodal transfer of reasoning patterns between similar problems.

---

Birth of the Transformer

Łukasz Kaiser’s Path:

- PhD in theoretical computer science & math in Germany.

- Tenure in France researching logic/programming.

- Joined Google during deep learning’s rise.

- Initially in Ray Kurzweil’s team, later Google Brain, collaborating with Ilya Sutskever.

Transformer Creation Trivia:

- Eight co‑authors never all met in person.

- Each contributed unique expertise — attention, feed-forward memory, engineering.

At the time, using one model for many tasks was against the norm — but history proved them right.

---

OpenAI vs Google Brain Culture

- Joined OpenAI at Ilya’s invitation.

- Google Brain’s scale & remote culture no longer fit.

- OpenAI uses flexible, project-focused teams.

- GPU resource competition is real — pretraining consumes the most, followed by RL and video models.

---

Future of AI and Work

> AI will change work — but will not eliminate it.

Example: Translation

Even with high-accuracy AI translation, human experts remain essential for trust, nuance, and high-visibility cases.

---

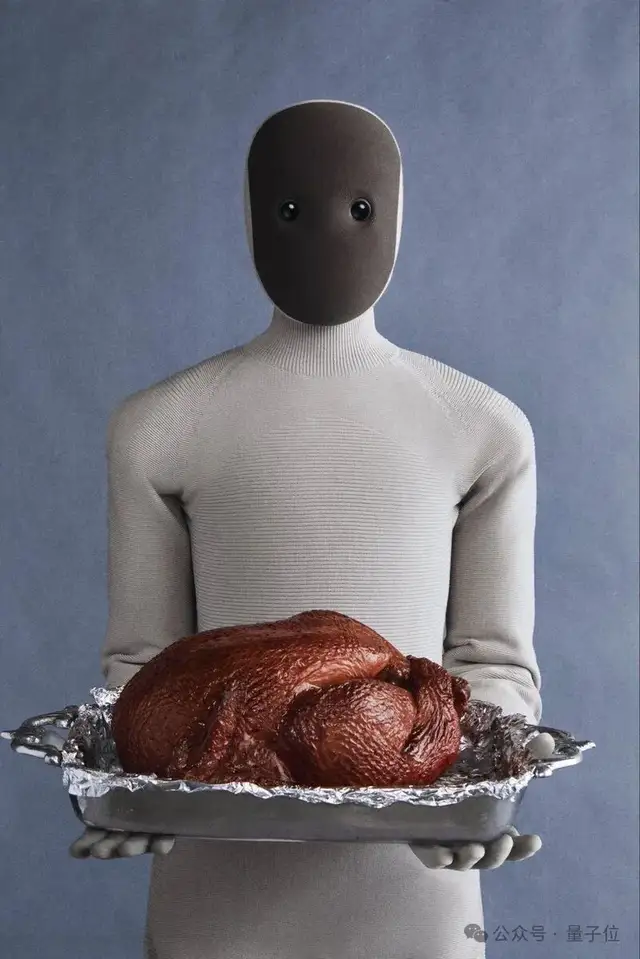

Home Robotics: The Next AI Revolution

Why?

- Breakthroughs in multimodal abilities, RL, and general reasoning.

- Hardware for intelligent manipulation is maturing.

- Will be more tangible and perceptible than ChatGPT.

---

References

[1] https://www.youtube.com/watch?v=3K-R4yVjJfU&t=2637s

---

Creator Takeaway

In this fast‑shifting AI landscape, integrated platforms like AiToEarn官网 can maximize distribution, monetization, and impact for AI-generated creativity. They provide:

- Cross-platform publishing to 13+ channels.

- Analytics and AI model ranking (AI模型排名).

- Seamless workflow for creators riding the wave of reasoning-driven AI.

---

Would you like me to also create a side-by-side visual timeline showing Transformer → Pretraining dominant phase → Reasoning model emergence? That could make this paradigm shift even clearer.