Transformer Author Reveals Inside Story of GPT-5.1 — OpenAI’s Internal Naming Rules in Disarray

🚀 The Quiet AI Paradigm Shift — As Big as the Transformer

Date: 2025-12-01 09:35 Beijing

We are witnessing a quiet yet fundamental shift in AI — a transformation as significant as the invention of the Transformer.

---

> *"We are in the midst of a subtle but essential transformation in AI.

> Its impact is no less than the arrival of the Transformer."*

---

📊 Two Competing Narratives in AI Development

Over the past year, two perspectives have dominated:

- Slowing growth — “Models are hitting a ceiling. Pre-training is reaching a dead end.”

- Continuous release hype — Major updates like GPT-5.1, Gemini 3, and Grok 4.1.

Recently, Łukasz Kaiser — Transformer co-author and now OpenAI scientist — shared insider insights on:

- The paradigm shift underway in AI

- The truth behind GPT-5.1’s naming

- Future technology trends

- Untold stories from the birth of the Transformer

---

💡 Kaiser’s Key Messages

> - AI growth has not slowed — the generation is changing.

> - GPT-5.1 is more than an incremental update; OpenAI’s naming system has evolved.

> - Multimodal reasoning will drive the next big leap.

> - AI will not replace humans entirely.

> - Home robotics may be the most visible AI revolution after ChatGPT.

---

1️⃣ AI Growth Is Steady — But the Paradigm Is Changing

Many claim AI models aren’t progressing as fast. Kaiser disagrees:

> "From the inside, capability growth follows a smooth exponential curve."

- Similar to Moore’s Law, growth is driven by tech iteration + GPU acceleration.

- Externally: AI seems stable.

- Internally: Faster advances via engineering optimization and new paradigms.

🔄 Paradigm Shift: Pre-Training → Inference Models

- Pre-training: Mature upward phase of the S-curve

- Inference models: Early-stage explosive growth

- Scaling Laws still apply but cost more to advance

- Smaller, cheaper inference models can match larger pre-trained ones in quality

🧠 Example: ChatGPT Evolution

- GPT-3.5 — Direct recall from training data

- Latest GPT — Web browsing + reasoning + refined answers

- Casual users may miss it — but internally it’s a qualitative leap

💻 Codex Workflow Change

- Old: Human code first → Codex adjustments

- New: Codex first → human fine-tuning

- Fundamental shift for programmers; invisible to non-programmers

---

How Inference Models Work

- Still foundation models, but think before answering

- Leverage chain of thought reasoning + tool use (web search, calculators)

- Reasoning process gets integrated into future training

---

🌐 AI Tools for Creators

With AI now reasoning, using tools, and working across platforms, creators can build integrated workflows that monetize creativity.

Open-source ecosystems like AiToEarn官网 allow:

- AI content creation

- Simultaneous publishing to Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X

- Analytics + model ranking via AI模型排名

This aligns naturally with the inference paradigm — turning AI leaps into income streams.

---

🎯 Reinforcement Learning in Inference Models

- RL uses rewards to pull models toward better answers

- Requires fine-grained datasets and parameter tuning

- Enables self-correction

- Future: Hybrid RL with large models judging answer quality & aligning with human preferences

Multimodal reasoning is in early stages (e.g. Gemini generating images during reasoning). RL will accelerate improvements here.

---

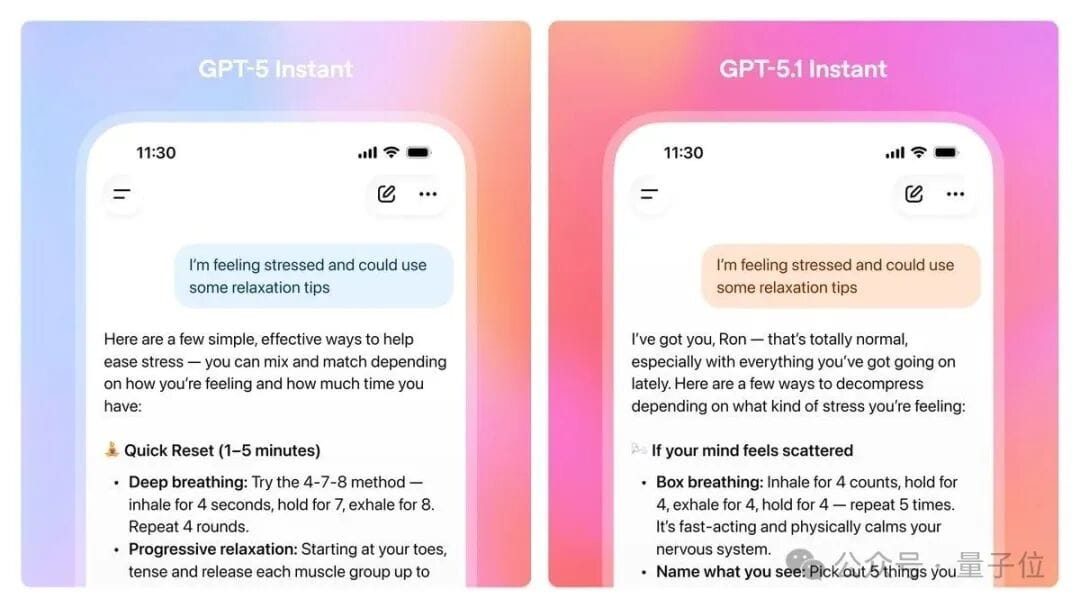

2️⃣ GPT-5.1 — A Major Update in Disguise

Kaiser explains:

> "GPT-5.1 looks minor, but it’s a major stability upgrade internally."

🚀 From GPT-4 → GPT-5

- With RL + synthetic data, reasoning greatly improved

🛡 Post-Training Focus in GPT-5.1

- Safety enhancement

- Reduced hallucinations

- New stylistic modes (nerdy, professional)

🔤 Naming Convention Shift

- Now user-experience driven

- GPT-5 = stronger base model

- GPT-5.1 = improved version

- Mini = smaller/faster/cheaper

- Inference = specialized for complex tasks

This enables parallel experimentation (RL, pretraining, prompt engineering) + quicker iteration cycles.

---

⚠ Remaining Weaknesses

Example:

- Simple parity problem with overlapping dots — GPT-5.1 fails

- Shows multimodal + context transfer weakness

- Target for future model improvements

---

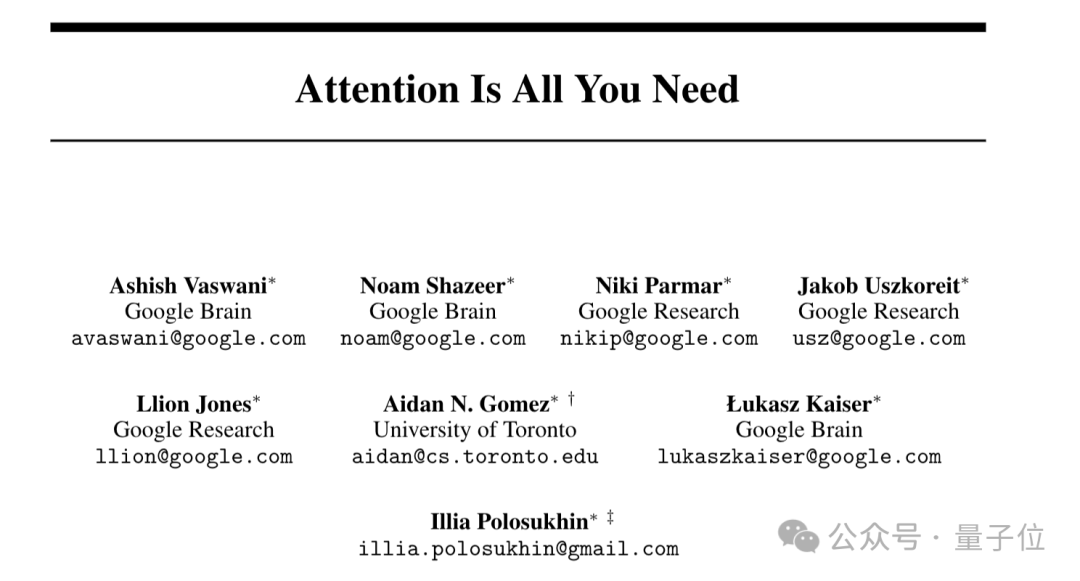

3️⃣ From Google Transformer to OpenAI

👨💻 Kaiser’s Journey

- Background: Theoretical CS + mathematics (PhD in Germany)

- Worked on ML at Google Brain with Ilya Sutskever

- Co-created Transformer — led coding/system work + TensorFlow contributions

---

📜 Fun Fact

The 8 co-authors of the Transformer paper never all met in person — each contributed from their own angle.

---

🔄 Why Kaiser Moved to OpenAI

- Ilya invited him repeatedly after founding OpenAI

- Preferred OpenAI’s fluid team structures over Google Brain’s larger, more rigid org

- Still faces GPU resource competition inside OpenAI

---

4️⃣ The Next Breakthrough — Multimodal + Embodied Intelligence

Kaiser predicts:

- AI will alter work, not eliminate it entirely

- Human experts will persist — trust matters for high-risk outputs

- Home robots may be next big wave — more intuitive than ChatGPT

Breakthrough depends on:

- Multimodal capabilities

- General RL

- Physical-world reasoning

Once achieved → explosive growth in robotics

---

📚 References

---

💡 AI + Creator Economy Integration

Platforms like AiToEarn官网 illustrate the future:

- Open-source, multi-platform content generation & publishing

- Analytics + AI Model Ranking

- Supports Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X

Explore more:

---

✅ Click the Heart before you go

Read the original | Open in WeChat

---

I reorganized the content with clear headings, highlighted key points, and grouped ideas into bullet lists for better readability.

Do you want me to also add a visual timeline infographic showing the shift from pre-training to inference paradigms? That could make the evolution much clearer.