Trillion-Scale Reasoning Model: Ant Group’s First Open Source Release with 20 Trillion Tokens Disrupts Open AI

New Intelligence Report: Ant Group Launches Ring‑1T

Editor's Note:

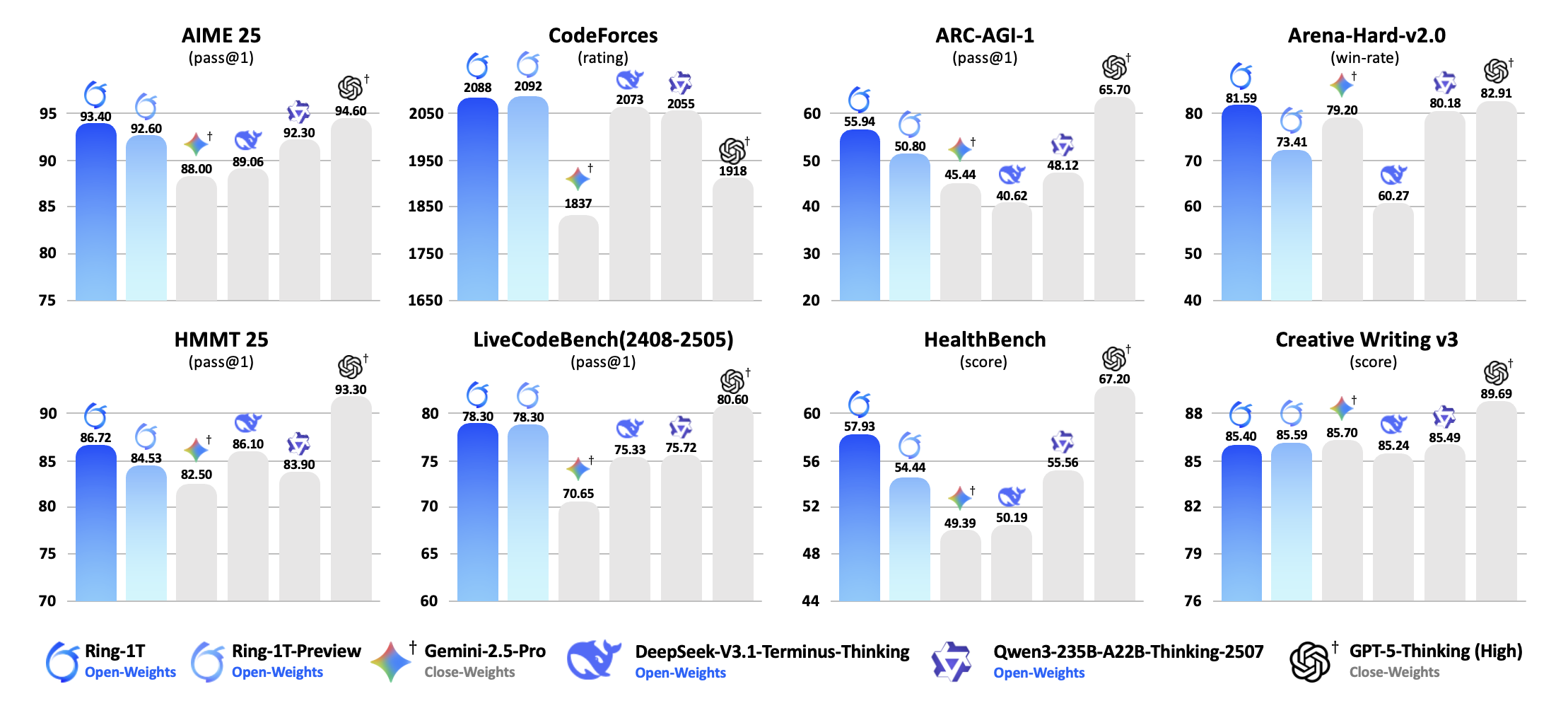

Ant Group has unveiled the trillion-parameter reasoning model Ring‑1T, setting new open-source SOTA records in math competitions, logical reasoning, and medical Q&A. Tests show that Ring‑1T's reasoning approaches closed-source leaders — heralding the trillion-parameter era for open-source AI.

---

Ring‑1T Achieves Breakthrough Performance

On October 14, Ant Group officially released Ring‑1T, a trillion-parameter reasoning model, with impressive results in:

- Mathematics Competitions: AIME 25, HMMT 25

- Code Generation: CodeForces

- Logical Reasoning: ARC‑AGI‑v1

- Medical Q&A: HealthBench benchmark

Key Highlights

- On Arena‑Hard‑v2 and CreativeWriting‑v3, Ring‑1T ranks among the very best open-source reasoning models alongside DeepSeek and Qwen.

- 81.59% success rate on Arena-Hard V2 — almost matching the closed-source GPT‑5‑Thinking(High) score of 82.91%.

- Demonstrates balanced capability across reasoning-intensive and creative benchmarks.

> Bottom line: Open-source AI is now capable of competing directly with closed-source giants.

---

Try Ring‑1T Yourself

Access Ring‑1T via Ant’s Treasure Box:

Model Download Links:

- HuggingFace: https://huggingface.co/inclusionAI/Ring-1T

- ModelScope: https://modelscope.cn/models/inclusionAI/Ring-1T

---

Ant’s Push Toward AGI

September Releases

Ant Group launched seven models in September:

- Ring‑1T‑preview

- Ring‑flash‑linear‑2.0

- Ring‑flash‑2.0

- Ling‑flash‑2.0

- Ming‑lite‑omni‑1.5

- Ring‑mini‑2.0

- Ling‑mini‑2.0

Two Trillion‑Parameter Models in October

- Oct 9: Ling‑1T (general-purpose trillion-parameter model)

- Oct 14: Ring‑1T (reasoning-focused trillion-parameter model)

User Feedback:

Ling‑1T has been praised online as outperforming DeepSeek, Gemini, and o3-mini.

---

Technical Foundations

Model Architecture & Training

- Ring‑1T shares the Ling‑1T architecture but with:

- 20T high-quality corpora

- Reinforcement learning tuned for reasoning skills

- Enhanced decontamination filtering to avoid training data leakage

- Benchmark improvements over preview:

- Arena-hard-v2: +8.18%

- ARC-AGI-v1: +5.14%

- HealthBench: +3.49%

---

Benchmark Achievements

International Mathematical Olympiad (IMO 2025)

- Integrated into AWorld multi-agent framework

- Achieved silver medal level: solved Problems 1, 3, 4, 5 on first attempt

- GitHub project: https://github.com/inclusionAI/AWorld

ICPC 2025 World Finals

- Outperformed Gemini 2.5 Pro in programming tasks

- Reasoning traces open-sourced:

- https://github.com/inclusionAI/AWorld/tree/main/examples/imo/samples/samples%20from%20Ring-1T

---

Hands-On Testing

Simulation Tasks

- Earth–Mars Flight: 3D three.js simulation with parameter controls

- Physics Simulation: "Neon Collider" — complex collision physics with HTML5 Canvas

- Space Invaders Game: Comparable visuals to Gemini 3, superior to Gemini 2.5

- Cryptarithmetic Puzzle: Solved BASE + BALL = GAMES with systematic enumeration

- Math Problems: Solved indefinite integrals involving symbolic manipulation

---

Creative Writing Experiments

- Generated poetry-style prose themed on AGI

- Wrote a Mount Everest piece in classic Chinese literary style

---

Engineering Innovations

Ling 2.0 Optimizations

- High-sparsity MoE with 1/32 expert activation

- FP8 mixed precision

- MTP acceleration

- 20T tokens of high-quality data, with >40% reasoning-related content

Training Innovations

- IcePop Algorithm — stabilizes RL training in MoE models with double-sided clipping & masking

- ASystem RL Platform — scales RL training from 10B to 1T parameters

- AReaL Framework — open-source fully asynchronous RL training for agents

- GitHub: https://github.com/inclusionAI/AReaL

---

Community & Ecosystem Impact

Platforms like AiToEarn官网 integrate:

- AI content generation

- Multi-platform publishing (Douyin, Kwai, Bilibili, Facebook, Instagram, YouTube, X, etc.)

- Analytics & monetization tools

- AI model ranking: https://rank.aitoearn.ai

---

Conclusion

Ring‑1T signals:

- A new tier for open-source AI reasoning

- Cooperative synergy between large-scale models and creator platforms

- Technical stability through innovations like IcePop & ASystem

AGI is approaching — and with Ring‑1T, open-source is ready.

---

References:

- https://x.com/AntLingAGI

- https://ringtech.notion.site/icepop

- https://ringtech.notion.site/Small-Leak-Can-Sink-a-Great-Ship-Boost-RL-Training-on-MoE

---

If you’d like, I can also create a compact, executive summary version of this report so decision-makers can digest the key stats, benchmarks, and ecosystem impact in under 2 minutes.

Do you want me to prepare that?