Turing Award Winner LeCun Warns Meta: My 40 Years in AI Show Large Models Are a Dead End

Yann LeCun Parts Ways with Meta: From LLM Skeptic to World Model Visionary

Editor: KingHZ

Source: XinzhiYuan Report

---

Overview

Turing Award winner Yann LeCun, Meta’s Chief AI Scientist and head of Fundamental AI Research (FAIR), is expected to leave the company soon. He believes large language models (LLMs) are a dead end and is championing world models as the future of AI.

---

Why LeCun Is Leaving Meta

Loss of Influence

- Organizational Changes:

- Meta recently appointed Alexandr Wang as Chief AI Officer and Shengjia Zhao as Chief Scientist — both roles ranked above LeCun.

- Strategic Differences:

- Zhao is praised for scaling LLMs, while LeCun openly advises PhD students not to work on LLMs.

- Team Reorganizations:

- Meta’s AI division has undergone four major reorgs in six months, resulting in layoffs, budget cuts, and reduced influence for FAIR.

Shift from Research to Productization

- FAIR’s Past:

- Once an “ivory tower” for exploratory research without product pressure.

- Current Focus:

- Under Wang, Meta hires high-paid talent to deliver quickly and focus on commercialization.

---

LeCun’s Career Highlights

The Pioneer

- Early Work:

- Collaborated with Geoffrey Hinton before his fame.

- Worked at Bell Labs developing handwriting recognition and document digitization.

- Physics Enthusiast:

- Learned from physicists and textbooks despite training as an electrical engineer.

- Academic Roles:

- NYU Computer Science Professor.

- Founding Director of the Center for Data Science.

Facebook to Meta

- Joined in 2013 at Zuckerberg’s invitation to create a new AI lab.

- Led FAIR for 4 years, then became Chief AI Scientist in 2018.

- Received the 2018 Turing Award with Hinton and Bengio.

---

Turning Away from LLMs

Critical Views

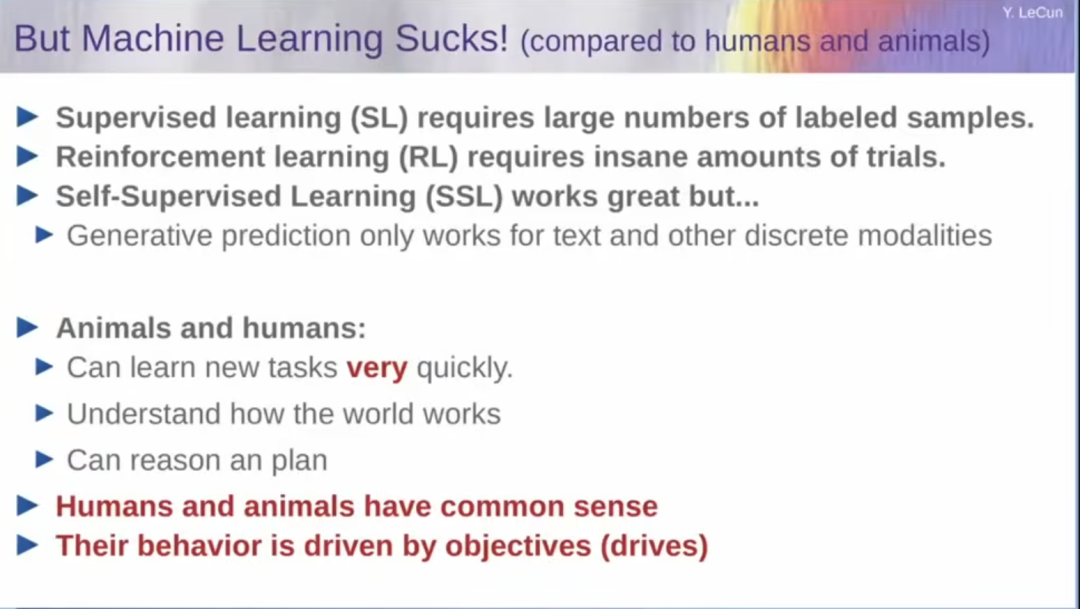

LeCun believes:

- Current LLMs are “over the hill” — a distraction and dead end.

- In 3–5 years, world models will dominate AI architectures.

> “Most human knowledge is not linguistic. These systems can never reach human-level intelligence unless you completely change their architectures.”

---

Understanding World Models

What They Are

- Systems that learn the rules of the world via sensory input (vision, touch).

- Contrast with LLMs, which learn exclusively from text.

Data Perspective

- Training datasets for LLMs would take ~100,000 years for a human to read.

- A 4-year-old child has processed 50× more sensory data than the largest LLM today.

Objective-Driven AI

- Goal-oriented systems trained on sensor/video data.

- Can represent consequences of actions and update knowledge in real time.

---

Why He Believes in World Models

The Wearable Future

- Envisions AI in wearables, enabling human-like interaction.

- Notes that current LLMs can’t replicate the intelligence of even a cat or mouse.

Mental Models Example

- Rotating cube experiment: Humans can visualize; LLMs cannot.

- Sensory data in children far exceeds text-based training capacity.

---

Building the Vision

LeCun’s Plan

- Maintain an abstract state representation of the world.

- Predict final states after action sequences, rather than next-word tokens.

- Enable hierarchical planning and reasoning.

- Improve safety via built-in control mechanisms.

Challenges

- Multi-year, potentially billion-dollar investment.

- Moonshot ambition comparable to ChatGPT’s breakthrough.

---

Broader Industry Context

Platforms like AiToEarn show how AI frameworks can bridge research innovation with real-world application:

- Open-source content monetization.

- Cross-platform publishing & analytics (Douyin, WeChat, Bilibili, Instagram, YouTube, X, etc.).

- AI model ranking (AI模型排名).

Such ecosystems empower creators and innovators to deploy AI capabilities beyond the lab.

---

LeCun’s Final Thoughts at Meta

On AI’s Current Limitations

- No common sense or deep reasoning.

- Poor at long-term planning.

Path Forward

- New Architectures: Develop internal models of the world.

- Self-Supervised Learning: Scale intelligence without heavy labeling.

- Open Research Culture: Accelerate innovation through collaboration.

---

Implications & Ethics

As AI approaches real-world autonomy:

- Need for fairness and bias mitigation.

- Explainability to ensure trust.

- Responsible deployment to avoid misuse.

---

Related Links

---

Bottom line:

Yann LeCun is preparing to leave Meta, not because he’s stepping away from AI — but because he’s doubling down on his conviction that world models, not LLMs, will define the next era of artificial intelligence.

---

Do you want me to also rewrite the technical deep dive section on Objective-Driven AI into an easy-to-read visual explainer style for a broader audience? That could make LeCun’s ideas accessible beyond AI researchers.