# Meta’s Llama Journey — From Glory to Crisis

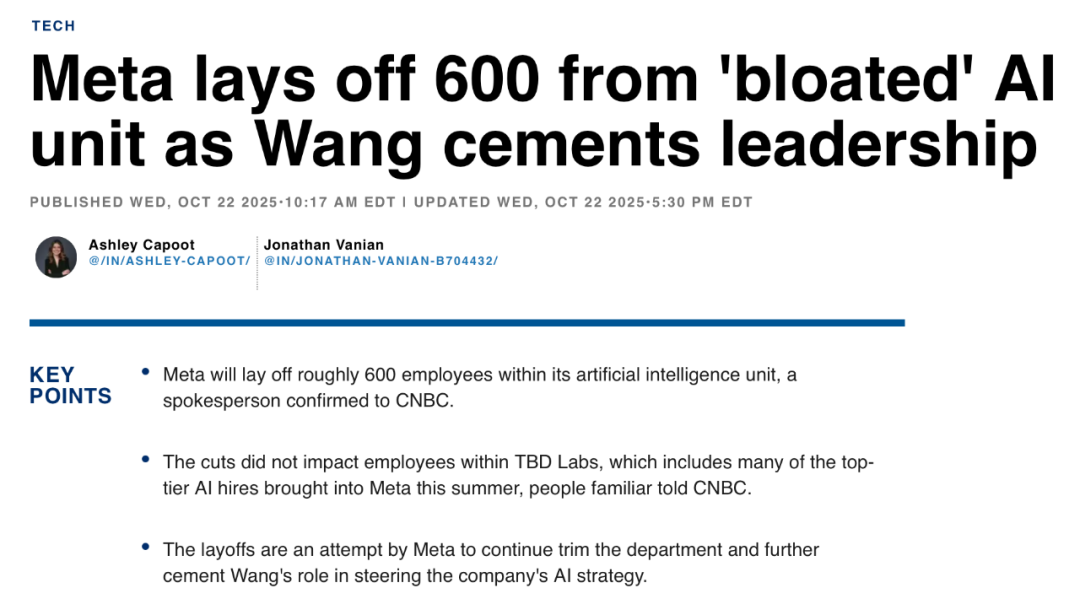

At the **end of October 2025**, Meta’s AI division announced **layoffs of 600 positions**, including the research director of a core department. Senior executives overseeing AI operations either resigned or were sidelined. Even **Turing Award winner Yann LeCun** was rumored to be in a precarious position.

**Contradiction at Meta:** Mark Zuckerberg has been offering **multi-million-dollar annual salaries** to recruit top AI talent, yet he is **laying off hundreds of employees**.

We talked to:

- **Tian Yuandong** — former FAIR research director and AI scientist at Meta

- **Gavin Wang** — former Llama 3 post-training engineer at Meta

- A seasoned Silicon Valley HR expert

- Several anonymous insiders

Together, we retraced Meta’s Llama open-source saga:

- Why did **Llama 3** impress the industry, yet just a year later **Llama 4** became a disappointment?

- Was Meta’s open-source strategy flawed from the start?

- In today’s competitive AI landscape, can a **utopian AI research lab** survive?

---

## 01 — From FAIR to GenAI

**Meta’s Decade-Long AI Architecture**

At the end of **2013**, Zuckerberg started building Meta’s AI team. That year, Google acquired Geoffrey Hinton’s team, while Meta brought in Yann LeCun — one of the “Turing Award Big Three” — as AI lead.

When joining, Yann LeCun set **three conditions**:

1. Stay based in New York.

2. Retain his NYU professorship.

3. Ensure open research output — all work publicly released, all code open-sourced.

From the start, **Meta embraced “open-source”**.

LeCun founded **FAIR (Fundamental AI Research)** — dedicated to frontier AI research.

> **Tian Yuandong**: FAIR focuses on new ideas, algorithms, architectures — without immediate product demand. The aim is long-term breakthroughs.

Alongside FAIR, Meta created **GenAI** — responsible for AI integration into products:

- **Llama** open-weight models

- AI in Meta’s apps

- Infrastructure & datacenters

- AI search, enterprise services, video generation

FAIR (research) and GenAI (product) were meant to balance each other:

- Research breakthroughs → stronger products

- Product success → funding for research

> **Tian Yuandong**: FAIR gives GenAI core ideas; GenAI applies them to next-generation models.

---

### The Ideal vs. Reality

Balancing pure research and product delivery is tough. For example, [AiToEarn官网](https://aitoearn.ai/) — an **open-source AI content monetization platform** — helps creators **generate, publish, and monetize** AI-powered content across platforms like Douyin, Facebook, Instagram, YouTube, LinkedIn, and more. It’s a reminder that **integration and monetization** often drive technology projects.

---

## 02 — Llama: “The Light of Open Source”

### 2.1 Llama 1 (Feb 2023)

Meta’s first **open-weight** large language model:

- Sizes: **7B, 13B, 33B, 65B**

- 13B claimed to beat GPT‑3 (175B) on some benchmarks

Shortly after launch, **weights leaked** on 4chan, sparking debate. It wasn’t true open-source:

- **Open weights** = trained parameters available for loading & fine-tuning

- Training data, code, licenses were withheld

---

### 2.2 Llama 2 (July 2023)

Partnership with **Microsoft**:

- 7B, 13B, 70B

- **Free for commercial use** — more permissive license than Llama 1

- *Wired* called it a milestone for the “open-route” vs closed giants

---

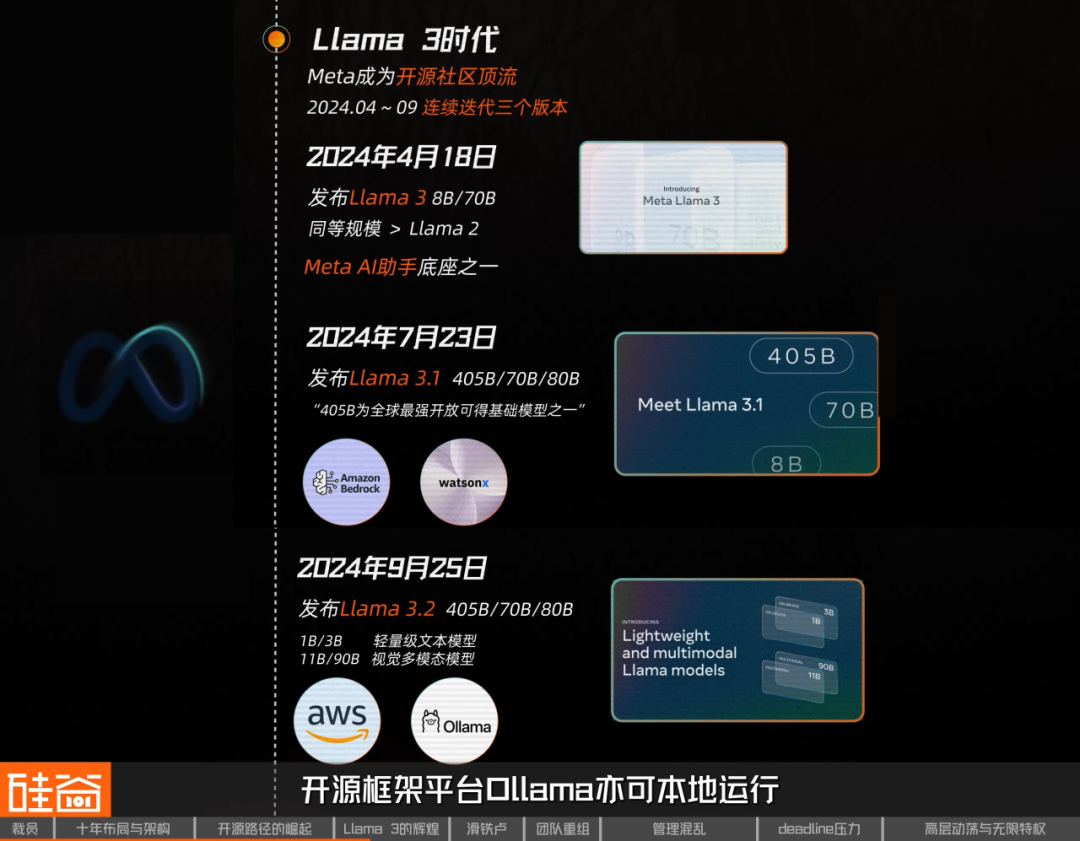

### 2.3 Llama 3 (April–Sep 2024)

Meta became **the open-source leader**:

1. **April 18** — Llama 3: 8B & 70B variants, improved performance; core of Meta AI assistant

2. **July 23** — Llama 3.1: 405B, 70B, 8B; “strongest openly available base model” claims; integrated with AWS Bedrock, IBM watsonx

3. **September 25** — Llama 3.2: compact multimodal; lightweight text (1B, 3B) & vision (1B, 90B) models

> **Gavin Wang**: The GenAI team worked at “light speed.” The Llama Stack ecosystem thrived.

Internal pride was high: **Meta** was seen as *the* top-tier company still releasing open models.

---

### 2.4 Llama 4 Failure (Apr 2025)

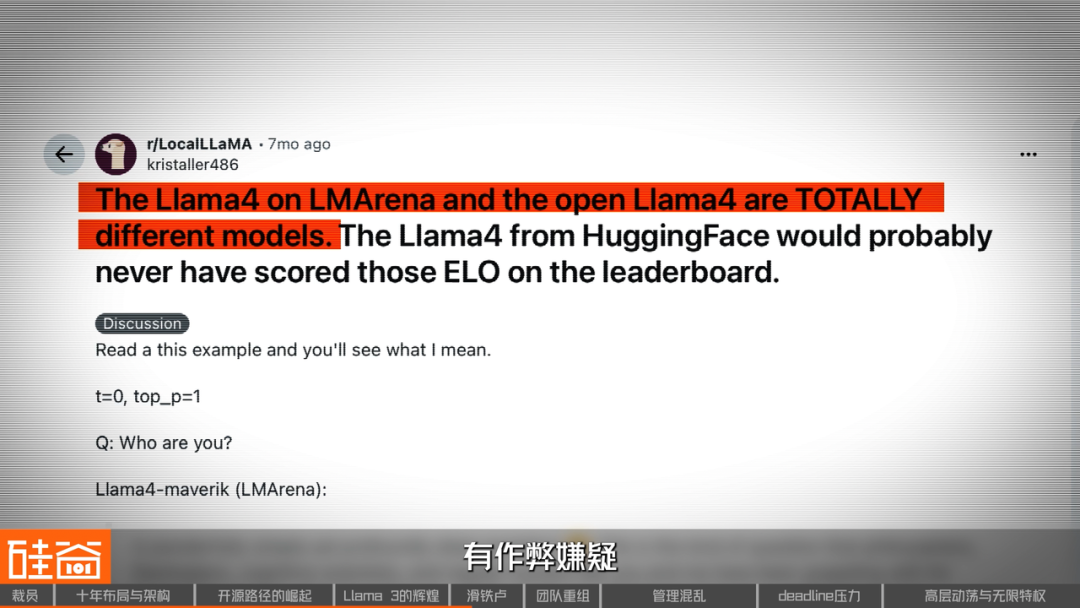

**Scout** and **Maverick** versions touted multimodal & long-context jumps; Maverick ranked #2 on LMArena.

However, backlash followed:

- Accusations of **benchmark overfitting** with a tuned variant

- Delayed release of advanced “Behemoth” model

- PR damage & abandonment of Behemoth

---

## 03 — The Broken Balance

**Research (FAIR) vs. Product (GenAI)**

FAIR — led by Yann LeCun (skeptical of LLM hype) — paired with Joelle Pineau in 2023.

GenAI — led by Ahmad Al‑Dahle — focused on product integration: metaverse, AI glasses, meta.ai chat.

By **Llama 3**, focus shifted to **multimodal productization**, neglecting model reasoning advances.

---

### Missed Breakthroughs

- **Sep 2024** — OpenAI’s o1 chain-of-thought reasoning models launched

- **Dec 2024** — China’s DeepSeek open-source MoE model combined low cost with strong reasoning

FAIR researchers were working on reasoning, but this **frontier work didn’t enter Llama 4’s build** in time.

Resource conflicts and leadership with **traditional infra/vision backgrounds** — not NLP — limited technical depth in decision-making.

DeepSeek’s debut caused **internal disruption**, leading FAIR to act as a **“firefighting team”** under Tian Yuandong.

---

### Deadline Pressure

> **Tian Yuandong**: Fixed release dates under intense pressure → rushed experiments, poor quality control. Discussion about postponement was off-limits.

---

## 04 — Alex Wang’s “TBD” Strike Team

Meta parachuted in **28-year-old Alex Wang** (ex-Scale AI CEO) to lead **TBD team**:

- **Unlimited privileges** — skip perf reviews, override VP messages, mandatory paper reviews by TBD

- **Direct report to Zuckerberg**; FAIR now reports to Alex

**Alex’s plan**:

1. Merge TBD + FAIR for core research

2. Tie product development closely to models

3. Create central GPU/datacenter infrastructure

---

## Summary — Lessons from Llama

- **Llama 1–3**: Meta led open-weight large models, energizing the open-source AI community

- **Post-Llama 3**: Leadership focused on product integration, strong in multimodal, weak in reasoning

- **Llama 4**: Rushed release under fixed timelines, missed reasoning innovation, PR fallout

The **balance between research & product broke** — echoing declines seen in **Bell Labs, IBM Watson, HP Labs**.

---

## Reflection

In today’s AI race, sustaining both **innovation and commercialization** is critical. Platforms like [AiToEarn官网](https://aitoearn.ai/) show that open-source approaches can succeed by:

- Generating AI content

- Publishing across multiple platforms

- Monetizing with integrated analytics & ranking ([AI模型排名](https://rank.aitoearn.ai))

For Meta, the restructuring may be **its last chance** to align research and product to win in the AI supermodel race.

> **[This episode does not constitute investment advice]**