Unveiling Six Engineers’ AI "Mastery Secrets

Inside the AI Workflows of Every’s Six Engineers

October 27, 2025

At Every, each engineer has built a personalized AI-powered workflow suited to their style and preferences.

Writing software—like writing prose—is a creative act, and creativity is inherently messy. My own writing often bounces between Google Docs and whichever large language model (LLM) I’m relying on most (currently GPT‑5).

But for engineers—how exactly do they build and ship products using AI every day?

Daily standups and quick Vibe Checks give me hints, but they’re only glimpses. To understand the full process, I asked six engineers to explain their real tech stacks and workflows—the systems that let a six‑person team run four AI products, a consulting business, and a newsletter with 100,000+ readers.

---

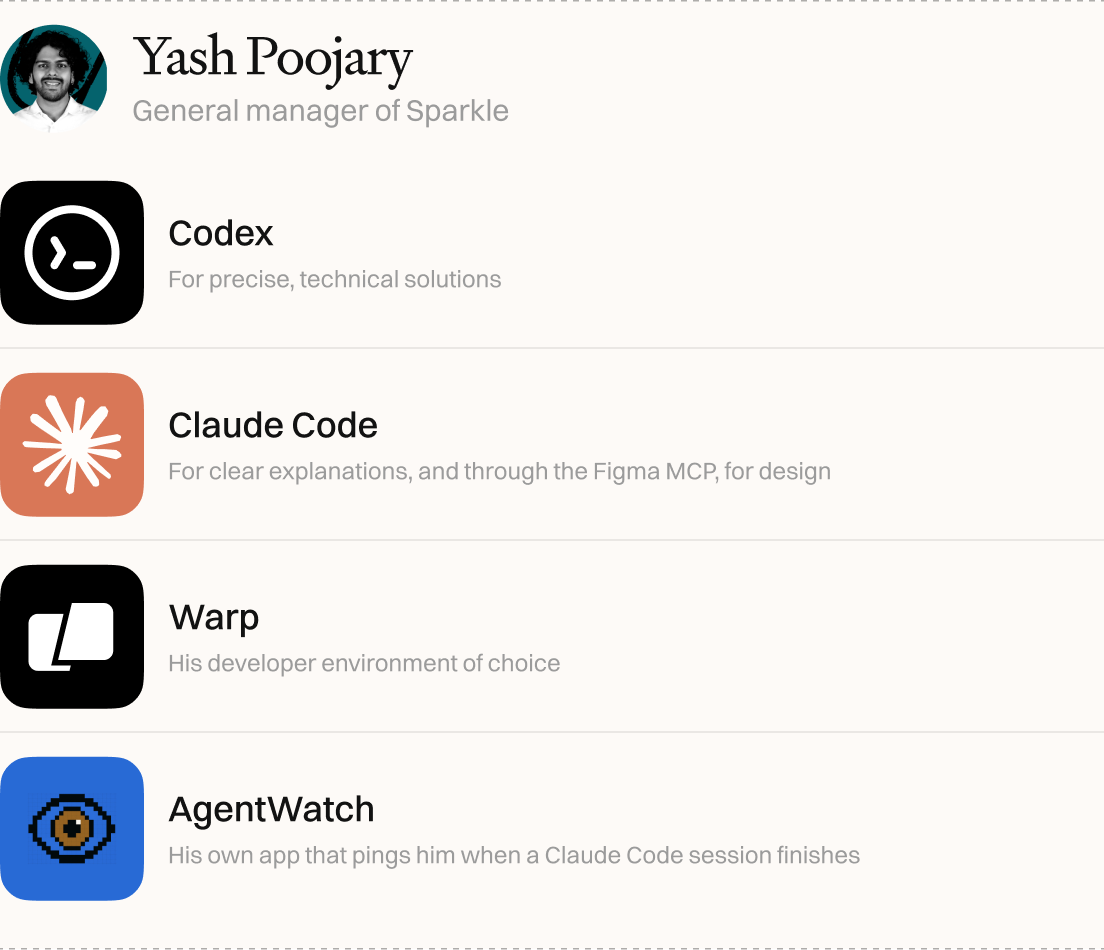

1. Yash Poojary – General Manager of Sparkle

Core Workflow

- Hardware: Recently upgraded to dual‑machine setup → Mac Studio + laptop.

- AI Models: Runs Claude Code and Codex in parallel to compare solutions.

- Design Integration: Uses Figma MCP → AI reads design system directly (colors, spacing, components) and converts to code.

- Terminal Tool: Warp for command-line work, paired with a “learning document” for continuous context to feed AI.

Guardrails & Productivity Strategy

- One main task + several smaller background tasks per day.

- Morning: Focused execution, only Codex + Claude Code.

- Afternoon: Tool exploration & experimentation.

- Built AgentWatch → notifies when Claude sessions finish.

---

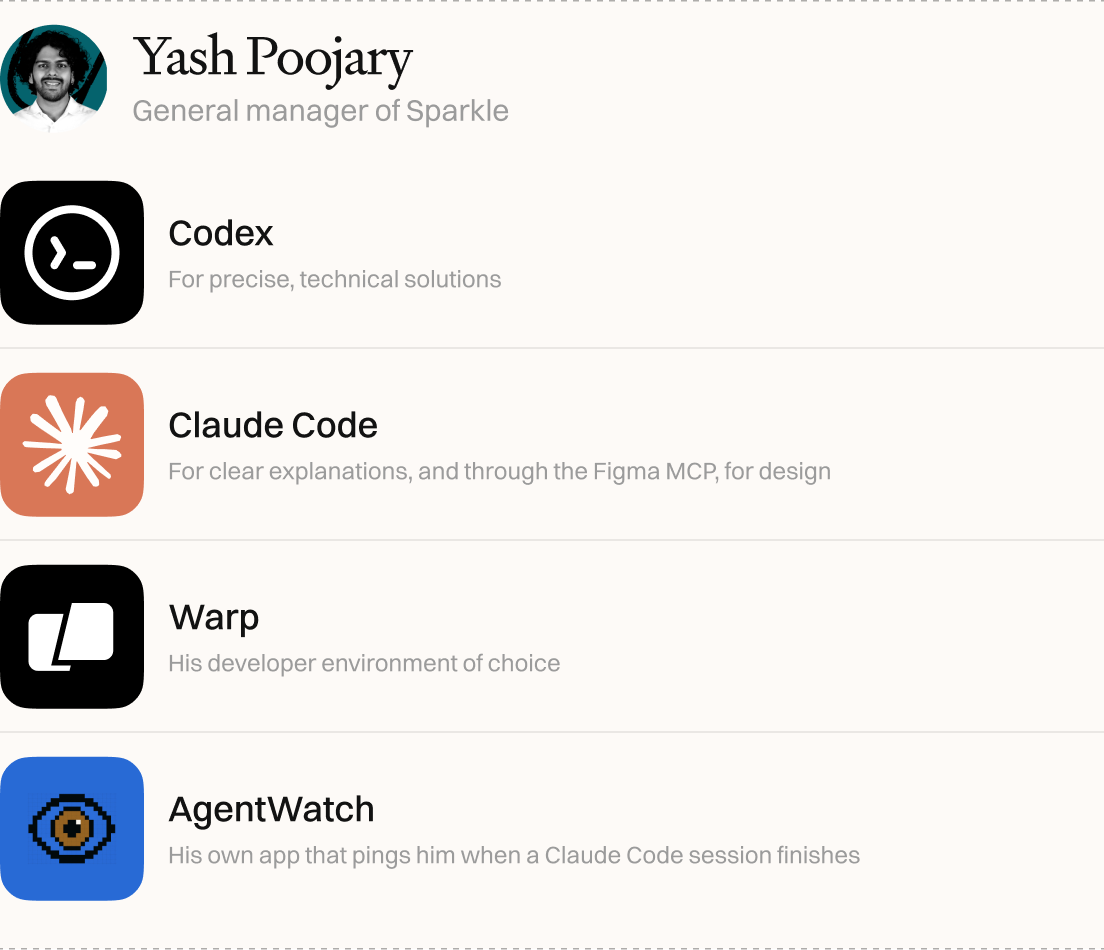

2. Kieran Klaassen – GM of Cora

Planning & Execution Flow

- All features start with Claude Code‑generated plan.

- Categorizes tasks:

- Small: Single shot

- Medium: Several files + review step

- Large: Manual coding, deep investigation

- Context via Context 7 MCP → fetches verified documentation.

- Plans pushed to GitHub → “work” command triggers AI coding agent task.

Toolset

---

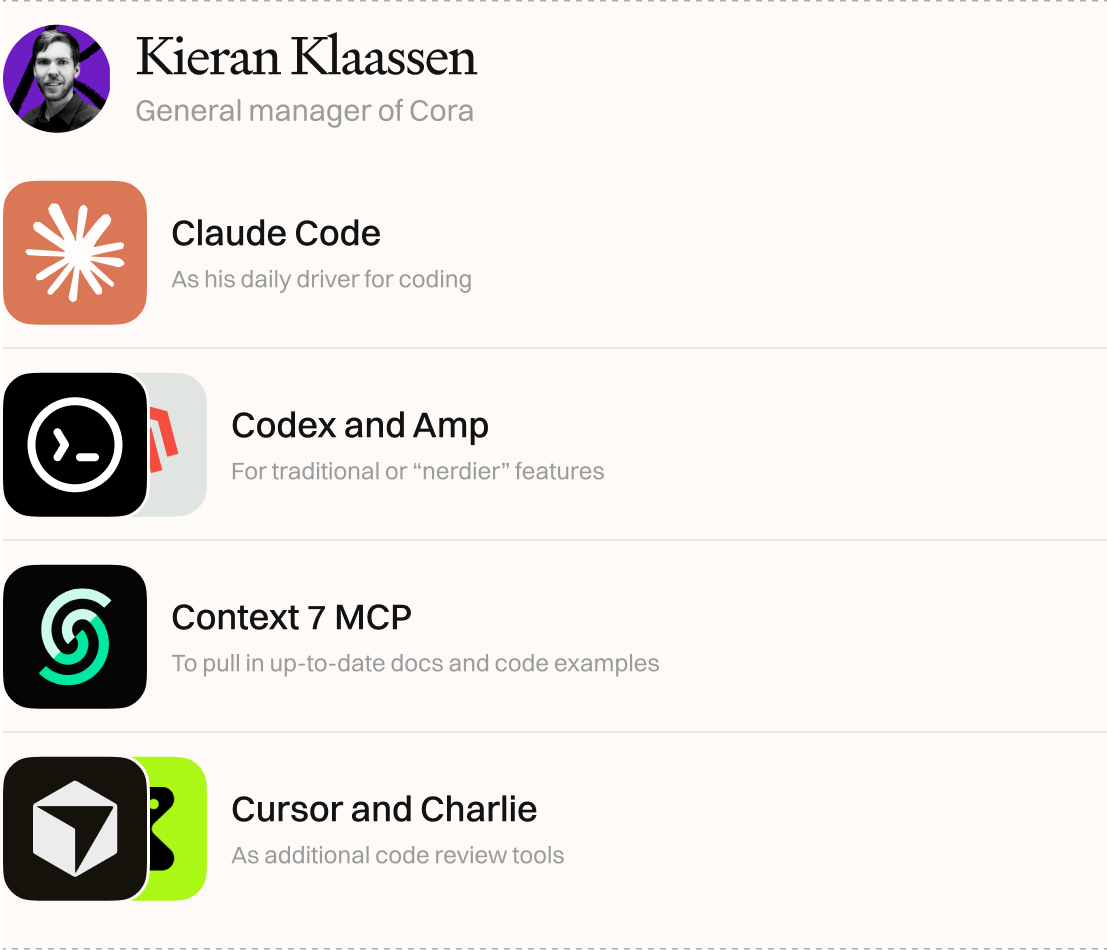

3. Danny Aziz – GM of Spiral

“Droid‑Centric” Workflow

- Uses Droid CLI → runs GPT‑5 Codex + Anthropic models concurrently.

- 70% of work in Droid.

- Planning focuses on second- and third-order effects of technical decisions.

- Minimal setup: usually one monitor; adds second only for design work.

Key Tools

---

4. Naveen Naidu – GM of Monologue

Central Source of Truth

- Project tracking in Linear → every ticket links to original request.

- Small fixes: Context in Linear → copy to Codex Cloud → AI task.

- Large features: Write `plan.md` locally in Codex CLI → governs iterative AI work.

Execution

- Codex Cloud for brainstorming, background tasks, discovering edge cases.

- Codex CLI + Ghostty for serious development.

- Uses Xcode for macOS and Cursor for backend.

- Error tracking via Sentry.

Code Review Process

- `/review` command in Codex for automated scan.

- Manual before/after comparison.

- Check Sentry metrics to confirm bug reduction.

Side Project

- Built Monologue → voice-to-text tool for prompts, plans, and task updates.

---

5. Andrey Galko – Engineering Lead

Simplicity First

- Avoids chasing every new AI tool.

- Formerly loyal to Cursor; switched to Codex post‑pricing changes.

- GPT‑4.5 & GPT‑5 significantly improved AI code quality and style adherence.

Observations

- Codex excels at both logic & UI with GPT‑5.

- Claude still better for highly creative UI—but sometimes too creative.

---

6. Nityesh Agarwal – Cora Engineer

Compact, One‑Tool Workflow

- Single device: MacBook Air M1.

- Primary model: Claude Code (Max plan) → all coding tasks planned in depth before starting.

- Avoids running multiple AI agents at once—focuses on one task until done.

Collaboration

- GitHub pull requests from Claude Code reviewed line-by-line by humans.

- Claude Code reads team comments directly from GitHub into terminal for revisions.

---

Cross‑Team Observations

Across these engineers, common themes emerge:

- Structured planning → AI execution → iterative review cycles.

- Balancing focus time and exploration time prevents productivity loss.

- Integrating design, error tracking, and source control into the AI loop increases output quality.

- Command-line interfaces (Warp, Droid, Ghostty) are preferred for speed and control.

---

Bonus: Monetizing AI Workflows

Platforms like AiToEarn offer open-source infrastructure to:

- Generate AI-driven content

- Publish simultaneously to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Track analytics & rank AI models

- Connect creation directly to monetization

---