Using Dify’s Architecture Design to Understand AI-Native Application Development Platforms in One Read

LLM AI-Native Application Platforms — Architectural Analysis of Tasking AI & Dify

---

Table of Contents

- Platform Positioning

- Core Capabilities

- System Architecture

- AI Task Orchestration Execution Engine

- Conclusion

- Outlook

---

This article analyzes the architectural designs of Tasking AI and Dify, two leading LLM AI-native application development platforms.

It covers LLM integration, plugin/tool expansion, AI Assistant workflows, and complex orchestration engines, while exploring trends in future system architecture philosophy and AI application development models.

---

Platform Positioning

LLM applications are increasingly common, but most high-value, mature implementations remain limited—primarily in AI assistants and knowledge bases.

Platforms can be broadly categorized into:

- General-purpose LLM application platforms — For product/business developers

- Examples: Dify, Coze, Tasking AI

- LLM development frameworks — For AI developers

- Examples: LangChain, OpenAI Assistant API

Tasking AI and Dify provide all-in-one environments for AI-native app development and deployment, prioritizing ease-of-use.

In contrast, frameworks such as LangChain and OpenAI’s Assistant API often require developers to handle state management and vector storage manually, inflating complexity and maintenance costs.

> 📌 Example: AiToEarn官网 is an open-source global AI content monetization platform that supports multi-platform publishing (Douyin, Instagram, YouTube, etc.) and integrates content generation tools, analytics, and model rankings. It combines AI creativity with monetization while fitting naturally into these AI-native workflows.

---

Core Capabilities

Despite differences, modern LLM platforms share these foundational capabilities:

1. Stateful & Stateless App Support

- Tasking AI: Uses a three-tier storage (local memory, Redis, Postgres), so developers don’t have to implement state management or vector storage.

- Dify: Integrates with multiple vector databases and supports dynamic switching.

2. Modular Tool/Model/RAG Management

Both platforms:

- Break free of fixed tool/model limitations

- Support dynamic loading/unloading

- Provide unified APIs to simplify app development

3. Multi-Tenant Isolation

- Ensures separation of private data, models, and resources

- Example: Dify uses a `tenant_id` column to provide low-cost, flexible isolation for small enterprises.

4. Complex Workflow Orchestration

- Dify offers Visual Workflow Development

- DAG nodes (LLM, branching, tools, HTTP, code) enable clear orchestration of AI processes.

---

System Architecture

The three pillars of LLM-native platforms:

- LLM Access & Integration

- Tool/Plugin Access & Management

- Workflow Orchestration

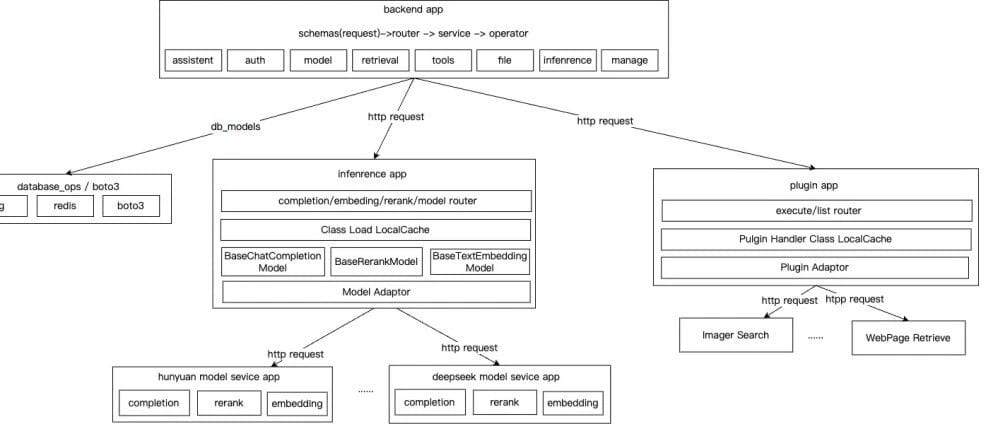

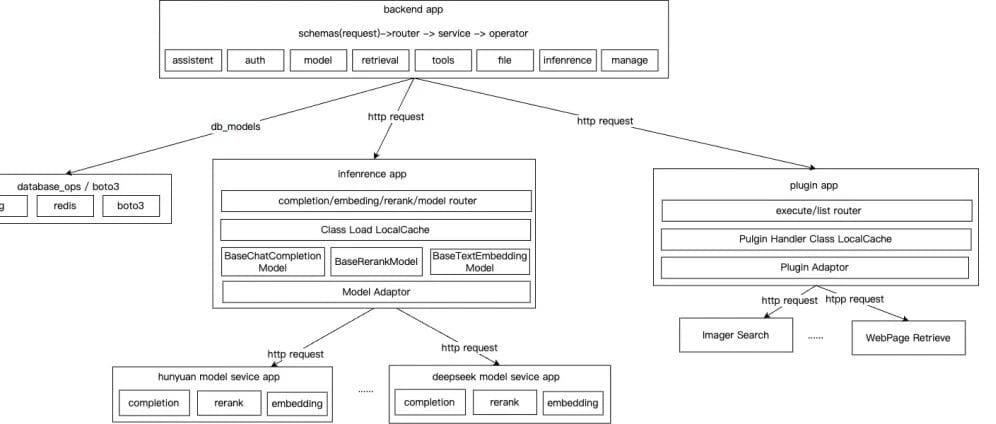

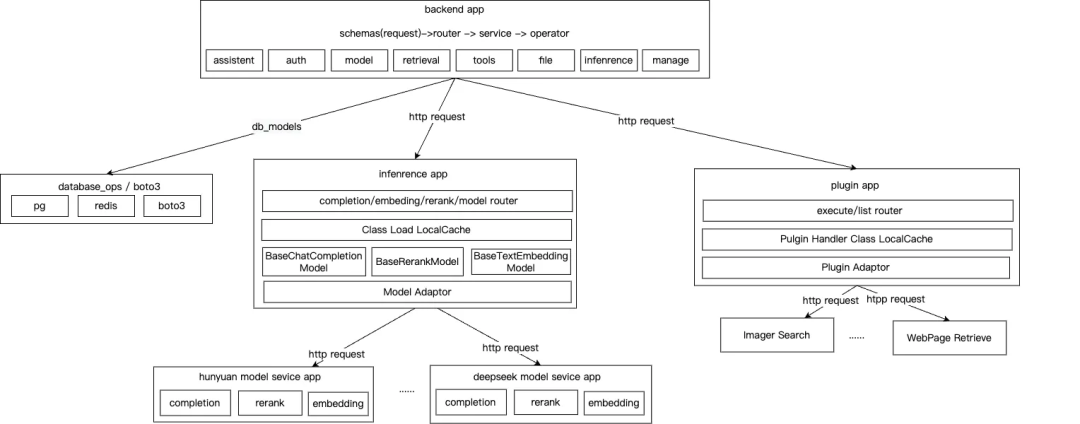

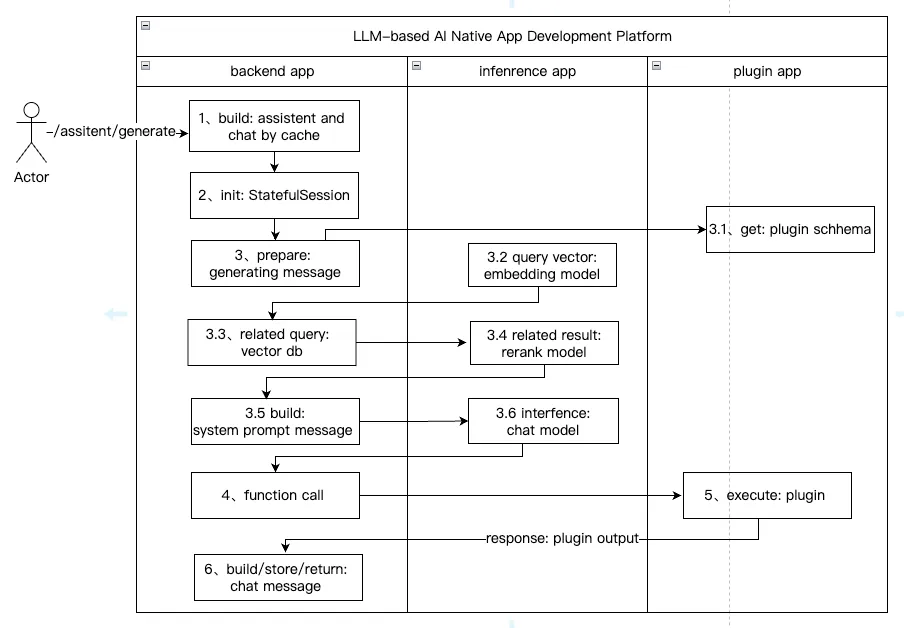

Tasking AI

- Built on microservices:

- Backend app — Development and configuration of assistants, models, RAG, plugins

- Inference app — Model inference abstraction

- Plugin app — External tool execution

---

Backend App

Adopts Domain-Driven Design (DDD):

- Infra Layer: Data APIs (Postgres, Redis, boto3)

- Domain Layer: Core services for assistants, tools, RAG

- Interface Layer: Business orchestration, request handling

> ⚠️ Note: Lacks a dedicated App Layer, which could reduce complexity.

---

Inference App

- Abstracts completion, embedding, and rerank model types

- Models are dynamically loadable via configuration files

---

Plugin App

- Manages both system and user-defined plugins

- Standardizes input/output via schema files

- Enables LLM function calls for capability expansion

---

AI Assistant Lifecycle

Key process steps:

- Retrieve configuration (plugins, models, retrieval)

- Start stateful session

- Perform text embeddings, knowledge retrieval, reranking

- Call plugins via function calls

- Save and return assistant responses

---

AI Task Orchestration Execution Engine

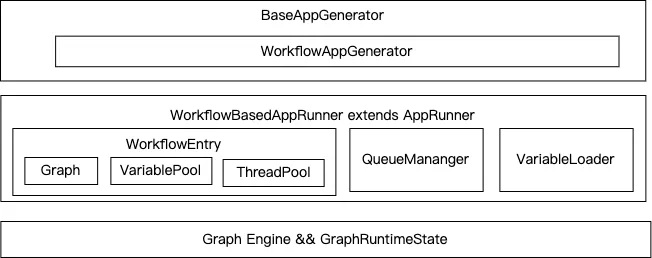

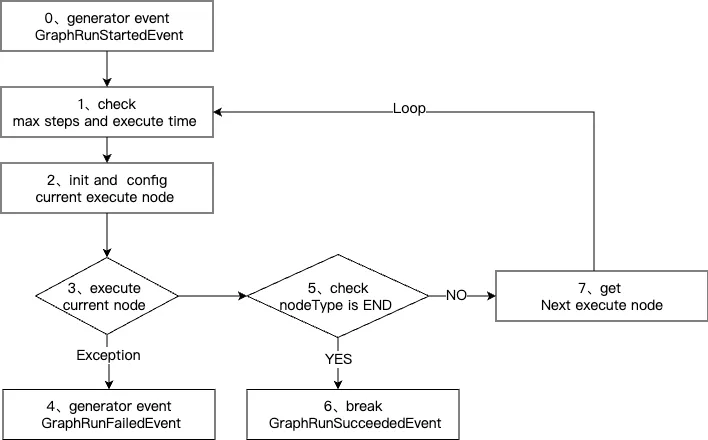

Dify’s GraphEngine

- Purpose: Executes orchestrated workflows as DAGs

- Node Types: LLM, branching (IFElse), retrieval, classification, tools, HTTP, loops, variable assignment

- Execution:

- Event-driven

- Topological sorting of nodes

- State persistence and logging

---

Core Flow

- WorkflowAppGenerator builds a DAG graph from node configuration

- WorkflowAppRunner runs the graph via GraphEngine

- Events control execution, retries, failure handling

- End Node terminates execution and logs success

> 💡 Optimization Potential: Replace local queues with external messaging (Redis, Pulsar) for better fault tolerance and scalability.

---

Summary

Tasking AI

- Microservices + DDD

- Clear, layered architecture

- Great for lightweight apps

Dify

- MVC + microservices

- Some coupling across layers

- Strong workflow orchestration via GraphEngine

Complementary Tools:

- Platforms like AiToEarn integrate with orchestration engines for multi-platform publishing + monetization.

---

Outlook

1. Natural-Language-Driven Development

- Platforms will auto-generate AI apps from user intent, domain knowledge, and optimized workflows.

2. Fault-Oriented Self-Healing

- Automatic risk detection, fault recovery, and continuous architecture optimization via ML.

---

References

---

💬 Your Thoughts?

Leave a comment — one selected high-quality response wins a Tencent Cloud document pouch (draw at noon, Nov 5).

---

✅ Final Takeaway:

Tasking AI = Best for clear, maintainable lightweight apps

Dify = Best for complex AI workflow orchestration

AiToEarn = Ideal monetization + publishing companion

---

If you want, I can also produce a side-by-side comparison table for Tasking AI vs Dify to make the differences visually clearer. Would you like me to create that next?