# VibeCut: Intelligent Web-Based Video Editing Agent

**原创 大前端 2025-10-11 12:03 上海**

---

## Introduction

To address the industry pain points of **complex workflows in professional video editing software** and the **creative limitations of template-based tools**, this article explores and implements **VibeCut**, an intelligent editing agent for the **WebCut** platform.

**VibeCut breaks down the boundaries between fully manual and fully automated modes**, offering creators both efficiency, ease of use, and personalized expression.

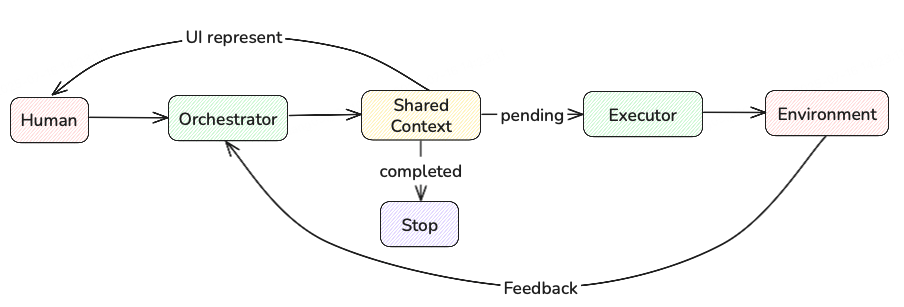

Key innovation: **Planner–Executor dual-agent architecture**

- **Planner**: Understands natural language intent, creates macro-level editing plans.

- **Executor**: Calls tools to complete operations precisely.

- **Shared Context**: Serves as the single source of truth for instructions and state, enabling transparent “what you see is what you get” interactions.

On WebCut, VibeCut—powered by LLMs—has succeeded in tests like:

1. Adding custom-styled subtitles

2. Auto-adjusting subtitle colors by visuals

3. Semantic video cutting

---

## Background

### Paradigm Shifts & Challenges in Video Content Creation

In the digital media era, video drives **information, social engagement, and brand marketing**.

- **Rise of short-form video content** (UGC explosion)

- Traditional workflows: a bottleneck

- **Professional tools** (Premiere Pro, Final Cut): powerful, steep learning curve

- **Online template tools**: accessible, but generic results

The industry is seeking paths beyond this binary — exploring **AI-enhanced workflows** that mix pro-level depth with online ease-of-use.

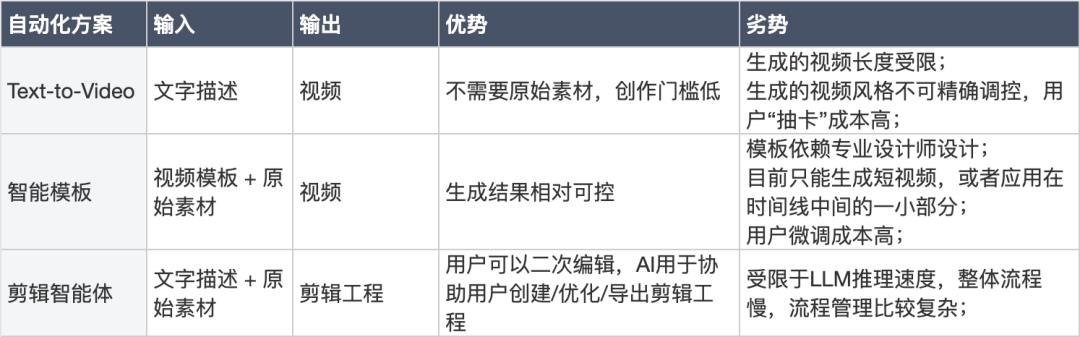

AI solutions like **Text-to-Video** show promise but face limitations in:

- Duration

- Logical coherence

- Semantic controllability

The challenge: **balance automation for efficiency** and **creative control**, bridging the gap between “full manual” and “full automatic.”

---

## LLM-Driven Opportunities

Large language models (LLMs) offer:

- Deep natural language understanding

- Intent recognition

- Task planning

**Multi-Agent systems** distribute complex tasks among specialized agents:

- Refining intentions

- Reviewing drafts

- Material search/retrieval

- Asset understanding

- Plan generation

- Execution

This shifts user focus from “editor” to “director.”

---

## Purpose of This Work

We experiment with **VibeCut**, a cutting-edge automated editing approach within **WebCut**:

- Input: user text + raw footage

- Output: editable draft via agent cooperation

- Goal: enhance WebCut competitiveness and serve as a model for next-gen intelligent creation platforms

---

## Related Work

### Core Concepts: LLM, Function Calling, MCP, Agent

1. **LLM** – Cognitive core: context understanding, intent parsing, task decomposition

2. **Function Calling** – Converts LLM plans to structured API calls

3. **Model Context Protocol (MCP)** – Encapsulates tool calls in a standard format

4. **Agents** – Autonomous LLM-based entities with goals and toolsets

---

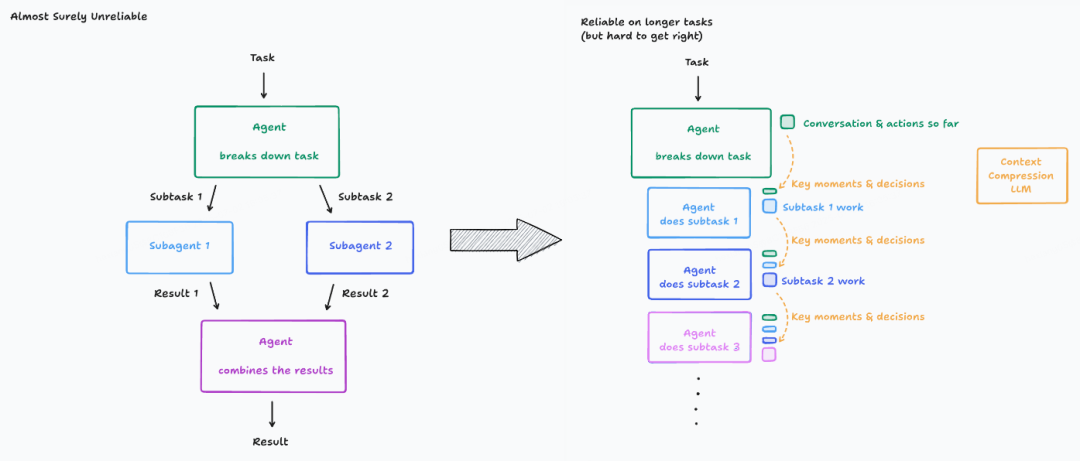

### Multi-Agent Architectures

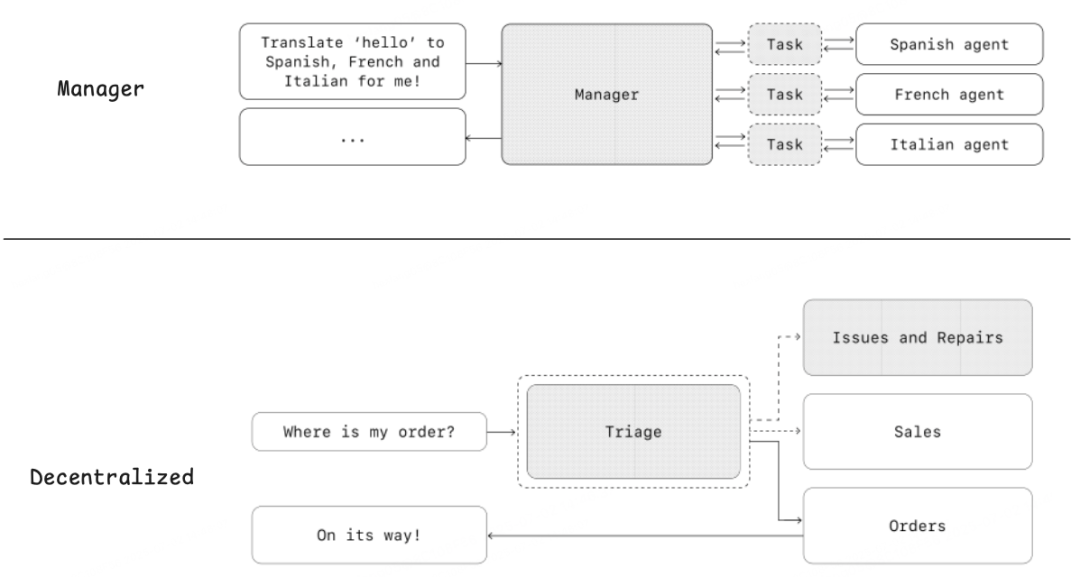

#### OpenAI Handbook

- **Manager Mode**: Central planner orchestrates tools (“agents as tools”)

- **Decentralized Mode**: Agents pass tasks among themselves (“handoff model”)

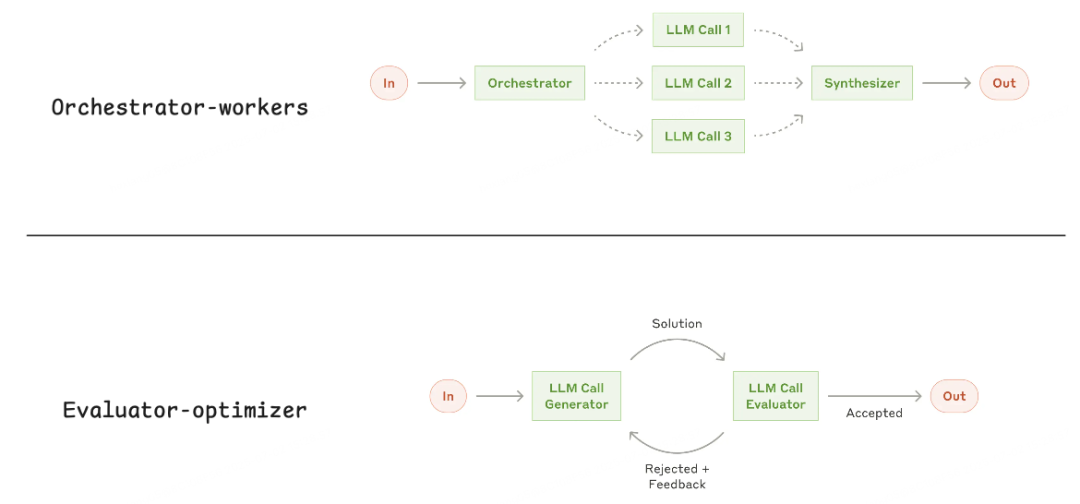

#### Anthropic Handbook

- **Orchestrator–Workers**: Similar to Manager

- **Evaluator–Optimizer**: Iterative generate–evaluate loop

#### Cognition AI

Common problems:

1. **Error accumulation**

2. **Context loss & comm overhead**

3. **Rigid graphs**

4. **Diffused responsibility**

Proposed solutions:

- **State management & context engineering**

- **Single long-running agent**

- **Minimal robust toolset**

---

## Smart Editing Exploration

### Commercial Tools

#### Premiere Pro

- Deep AI integration (Firefly generative features)

- Pros: Seamless workflow integration for professionals

- Cons: Cost, complexity

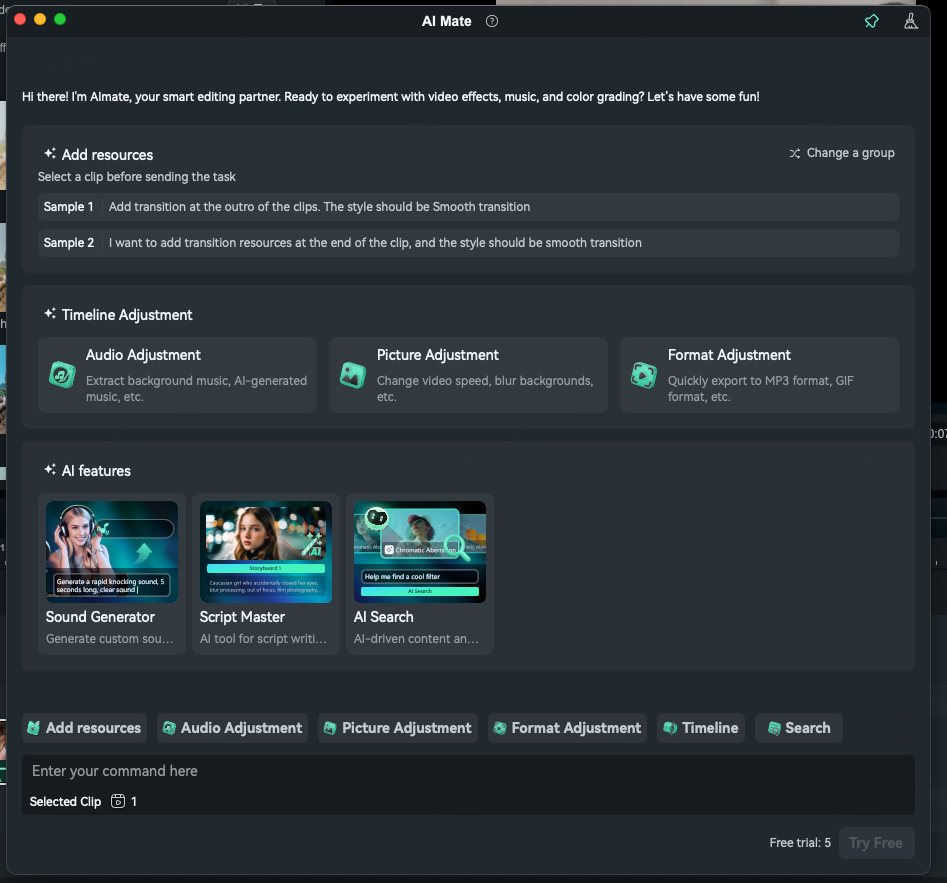

#### Filmora

- **AI Mate**: Centralized hub for AI features

- Pros: Rich UI-linked features

- Cons: Lacks true planning/execution capabilities

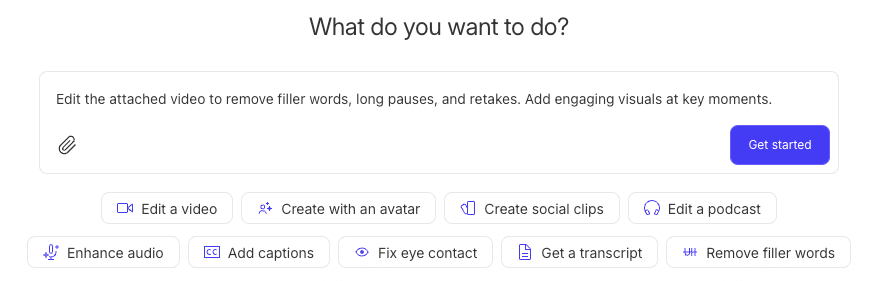

#### Descript

- Copilot assistant focused on content creation features

- Example test: failed semantic cutting task

---

## Internal Explorations

### PC Bicut AI Integration

- Used MCP to bridge with **CherryStudio** conversational LLM tool

- Toolset inspired by Filmora, tightly scoped to timeline actions

- Observed variability in tool chain robustness

### WebCut Multi-Agent

- Added **RAG material search** + **video comprehension**

- Planner generates editing plans; Executor selects tools

- Result: High-context load, susceptibility to vague/ambiguous user intent

---

## VibeCut Architecture

### Design Principles

- **Planner–Executor split**

- **Structured shared context**

- **Direct draft manipulation**

**Planner**:

1. Generates shared context from user intent

2. Updates sub-task states

3. Re-plans failed sub-tasks

**Executor**:

- Chooses best tool per sub-task context

---

## Tool Design

### Core Tools

- **UI Interaction Tool**

- **Resource Query Tool**

- **Editing Tool**

### Resource Understanding

- Specialized agent for structured asset comprehension

### Intelligent Asset Search

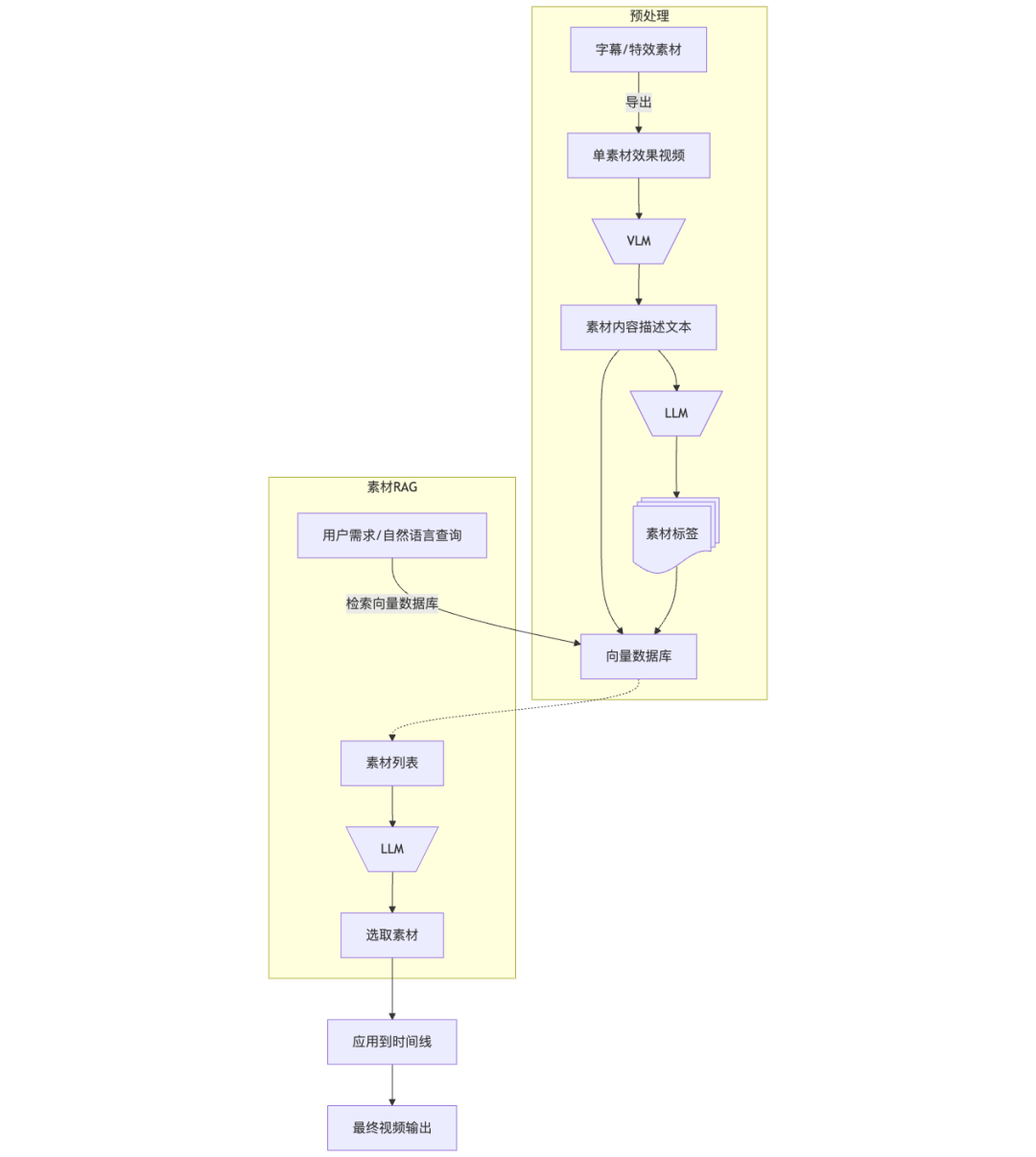

Two phases:

1. Preprocess: batch-render, VLM analysis, vector DB tagging

2. Retrieval: cosine similarity search by description

---

## Experimental Results

### Setup

- Tested on 30s single-track videos

- Mixed video/subtitle edits

### Quality Evaluation

**Requirement-1**: Near match to intent with human-in-loop approvals

**Requirement-2**: Higher token use due to pre-existing subtitles

**Requirement-3**: Successful execution with previews

---

### Efficiency

Stat logs for:

- Executor Agent

- Orchestrator Agent

---

## Ablation Experiment

Compared LLM models (**deepseek-v3**, **deepseek-r1**, **qwen3-8b**) on Fibonacci cut task:

- Without “deep thinking,” models failed due to structured data output errors

- Smaller models struggled with task state tracking and correct tool selection

---

## Additional Scenario: Image–Text Template Editing

- Extended Planner prompts for narrative logic

- Added 3 tools: Storyboard Script, Character Sketch, Storyboard Images

- Workflow: From text → storyboard → assets → timeline edits

---

## Conclusion

1. **Human–AI collaboration feasible**

2. **Planner–Executor + Shared Context key to reliability**

3. **Tool orchestration defines intelligence ceiling**

---

## Future Work

### Model & Performance

- Fine-tune smaller models (qwen3-8b) for planning vs execution

- Caching and pre-processing to reduce token costs

### Expanded Capabilities

- Multimodal inputs (voice, style ref images)

- Advanced tools (smart music, noise reduction, motion effects)

### Architecture & Evaluation

- Persistent user preference memories

- Establish AI video editing benchmarks

---

## References

- OpenAI Agent Guide

- Anthropic Agent Patterns

- Cognition AI Blog

- Manus Context Engineering Blog

---

**Developer Question:**

*Cloud vs local AI editing — pros and cons?*

---

**Giveaway:**

Comment + share → chance to win "Snake Brings Good Fortune" plush

Ends: **Oct 17, 12:00 PM**

---

**Past Reads:**

- [Bilibili Creator Platform Integrated with Self-Developed Editing Engine](https://mp.weixin.qq.com/s?__biz=Mzg3Njc0NTgwMg==&mid=2247501617&idx=1&sn=06d31bd7f53456fe0cbfba70b6860a5d&scene=21#wechat_redirect)

- [Color Spaces in Video Editing](https://mp.weixin.qq.com/s?__biz=Mzg3Njc0NTgwMg==&mid=2247499782&idx=1&sn=1d87a155f9f161b2a91dfa00d3ef8dc9&scene=21#wechat_redirect)

- [Pure Web-Based Video Editing](https://mp.weixin.qq.com/s?__biz=Mzg3Njc0NTgwMg==&mid=2247501195&idx=1&sn=586f810c706487da269f4a013f7d7ec3&scene=21#wechat_redirect)

---

**Pro Tip:**

For instant, multi-platform publishing and monetization of AI-enhanced video edits, consider open-source ecosystems like [AiToEarn官网](https://aitoearn.ai/):

- Generate, publish, monetize content across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X

- Tools for analytics & AI model ranking

Explore: [AiToEarn文档](https://docs.aitoearn.ai/) | [AiToEarn博客](https://blog.aitoearn.ai/) | [GitHub](https://github.com/yikart/AiToEarn)

---