# Understanding Short-Term vs Long-Term Memory in AI Agents

In artificial intelligence, **memory** is crucial for the intelligence, coherence, and usefulness of an AI Agent.

This guide explores the differences between **short-term** and **long-term** memory, and how they work together to power intelligent systems.

---

## What is an AI Agent?

An **AI Agent** is an autonomous system capable of:

- **Perceiving** its environment

- **Reasoning** about information

- **Taking actions** to achieve specific goals

**Memory** gives the Agent *continuity* and *personality.*

Without it, interactions are isolated and stateless – like suffering from digital amnesia.

In designing Agent architectures, memory is often split into:

- **Short-term memory** — like human working memory

- **Long-term memory** — like human experience archives

---

## 1. Short-Term Memory: The Workbench of Consciousness

### 1.1 Definition & Core Features

Short-term memory is **temporary information storage** during a current task or dialog. It is analogous to a CPU’s RAM or human working memory.

Key characteristics:

- **Timely & context-dependent**

Tracks immediate interactions (e.g., recent user utterances)

- **Limited capacity**

Bound by the model’s context window (e.g., 4K–200K tokens)

- **Fast access**

Active, instantly available without retrieval steps

- **Volatile**

Overwritten or erased when sessions end

---

### 1.2 Technical Implementation

Common technical foundations:

- **Transformer context window**

Self-attention spans only the most recent tokens; when exceeded, older context is dropped.

- **System prompts & few-shot examples**

These define the initial state for dialogue, living inside the short-term window.

- **Chain-of-thought reasoning**

Step-by-step reasoning persists in the context until task completion.

---

### 1.3 Application Scenarios

Short-term memory is key for:

- **Multi-turn dialog agents**

E.g., Booking travel with coherent follow-ups

- **Task decomposition**

Holding decomposed steps until task finishes

- **Code writing/debugging**

Remembering variable names and logic during development

- **Pronoun/context resolution**

Interpreting “it,” “that,” or “the other one” based on recent conversation

---

## 2. Long-Term Memory: The Library of Experience

### 2.1 Definition & Core Features

Long-term memory persists across sessions, storing **knowledge and experiences** similar to human autobiographical and factual memory.

Key differences from short-term memory:

- **Persistent & cross-context**

Accessible across sessions

- **Virtually unlimited capacity**

Expandable via external storage

---

### 2.2 Retrieval & Usage

- **On-demand retrieval**

Not always active; recalled when needed

- **Non-volatile**

Retains personal history, exclusive knowledge

---

### 2.3 Technical Implementation

Core components:

- **Vectorization & vector databases**

Embed unstructured data (text/images) → store in high-dimensional vector space → retrieve via similarity search

- **Traditional databases**

Persist structured data (user profiles, transaction logs)

- **Retrieval-Augmented Generation (RAG)**

Fetch relevant data from long-term memory, inject into context window

- **Reinforcement learning experience replay**

Save (state, action, reward) tuples for retraining & strategy retention

---

### 2.4 Application Scenarios

Long-term memory enables:

- **Personalized assistants**

Remember preferences & routines

- **Enterprise knowledge base Q&A**

Instant answers from internal documents

- **Companion robots**

Store shared stories & emotional histories

- **Skill/tool proficiency**

Retain API usage or bug fixes

---

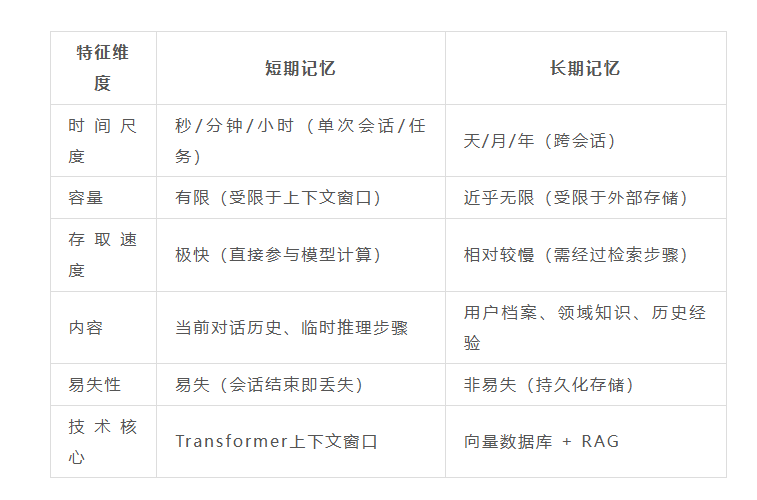

## 3. Core Differences & Synergy

| Feature | Short-Term Memory | Long-Term Memory |

|-------------------------|--------------------------------------|--------------------------------------|

| Duration | Temporary (session bound) | Persistent across sessions |

| Capacity | Limited (context window) | Virtually unlimited |

| Access | Instant | Requires retrieval |

| Volatility | High | Low |

| Storage medium | In-model context window | External database/vector store |

---

### How They Work Together: The RAG Example

1. **Trigger:** User asks “What is our latest leave policy?”

2. **Retrieve (Long-term memory):** Query vector matches policy document excerpts

3. **Inject (Short-term memory):** Retrieved text loaded into model’s context window

4. **Generate:** LLM produces precise answer using enriched context

**Short-term memory** acts as a focused processing workspace,

**Long-term memory** as a vast knowledge reservoir.

---

## 4. Future Outlook & Challenges

Challenges ahead:

- **Short-term memory limits** — Context window size vs. cost & latency

- **Long-term retrieval quality** — Accuracy, freshness, conflict resolution

- **Memory abstraction/compression** — Storing concepts, not just raw data

- **Security & privacy** — Safeguarding sensitive stored information

---

### Opportunities

Advanced frameworks may integrate:

- Context compression

- Retrieval optimization

- Multi-platform publishing

Example: [AiToEarn官网](https://aitoearn.ai/)

An open-source ecosystem connecting AI content generation, analytics, ranking, and distribution across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter).

---

## 5. Summary

- **Short-term memory** = agility & immediate reasoning

- **Long-term memory** = continuity & accumulated intelligence

- Combining both enables Agents to be **coherent now** and **smarter over time**

**Key takeaway:**

Mastering both memory types is essential for building credible, capable, and evolving AI Agents.

---

**———— / E N D / ————**