We Asked “Overnight Q&A” Yang Zhilin: $4.6M and Kimi’s Text-Only Path

Moonshot AI’s AMA: Inside the Minds Behind Kimi K2 Thinking

In recent months, the AI landscape has been buzzing about Moonshot AI and its latest breakthrough — the Kimi K2 Thinking model. Known for exceptional reasoning and coding capabilities, K2 Thinking has surpassed many state‑of‑the‑art models, earning recognition from developers worldwide.

To capitalize on this momentum, the Kimi team hosted an AMA (Ask Me Anything) session on Reddit — an unusual move for a Chinese AI company, offering rare direct interaction with the global developer community.

---

Who Joined the AMA?

The AMA featured Moonshot AI’s three co‑founders:

- Yang Zhilin

- Zhou Xinyu

- Wu Yuxin

Yang Zhilin participated under the Reddit username ComfortableAsk4494, answering multiple technical and strategic questions from the community.

---

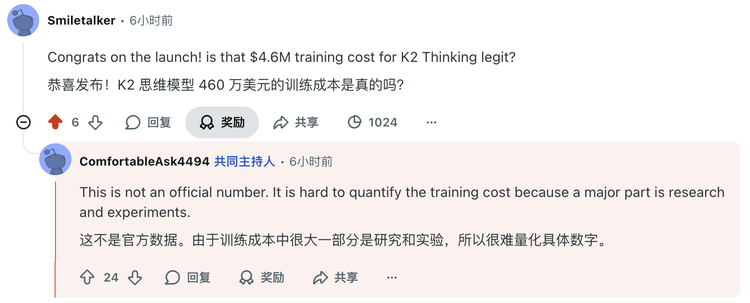

Key Topic 1 — Training Cost & Pure Text Strategy

Q: Is the rumored $4.6M training cost of K2 Thinking real?

> Not an official figure. Since much of the cost comes from ongoing R&D and experimentation, it’s difficult to pin down an exact number.

Q: Why focus on pure text instead of multimodal from the start?

> Training a vision‑language model takes time to source data and adjust training. We prioritized launching a text‑only model first.

---

What is AMA in Tech Communities?

AMA is a well‑known Reddit tradition used by figures like Barack Obama, Keanu Reeves, and tech leaders from OpenAI including Sam Altman. It’s a candid, community‑driven Q&A format.

Kimi’s AMA took place in r/LocalLLaMA, a hub for open‑source and locally deployed AI models — popular among hardcore developers.

---

Summary of AMA Highlights

- Training Cost: $4.6M — not official.

- Training Hardware: H800 GPUs with InfiniBand.

- K3 Architecture: Will use a hybrid approach.

- INT4 Precision: Chosen for GPU compatibility over fp4.

- Multimodal Plans: In progress.

- Muon Optimizer: Scales to 1 trillion parameters.

- K3 Launch: “Before Sam’s trillion‑scale datacenter is built.”

---

Selected AMA Exchanges

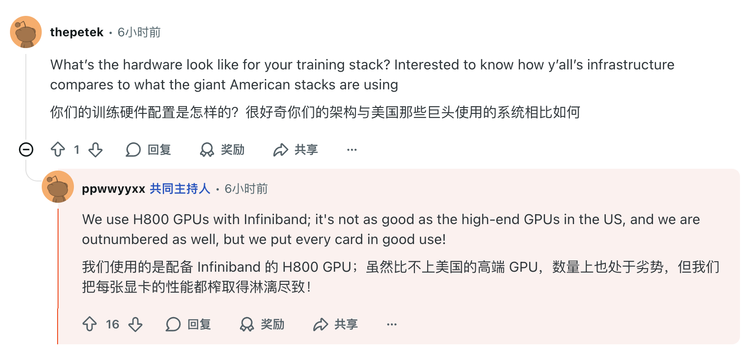

Hardware & Scaling

Q: How does your hardware compare to US giants?

> H800 GPUs with InfiniBand. While not the most powerful globally, we maximize each card’s capacity.

---

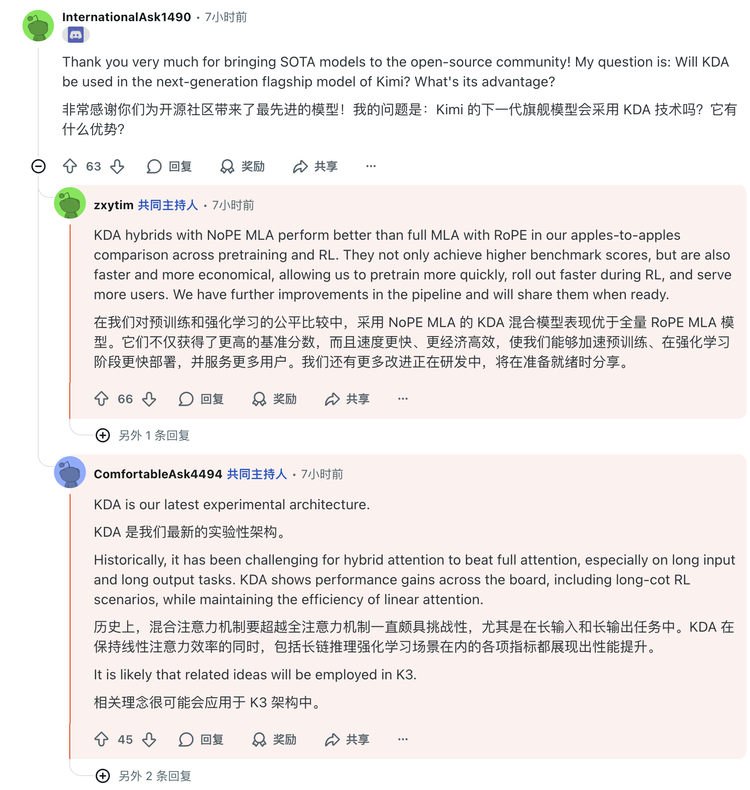

Q: Will Kimi’s next model adopt KDA technology?

> Yes — KDA hybrid (NoPE MLA) outperforms full RoPE MLA in benchmarks, speed, and cost efficiency. We’ll share further improvements soon.

---

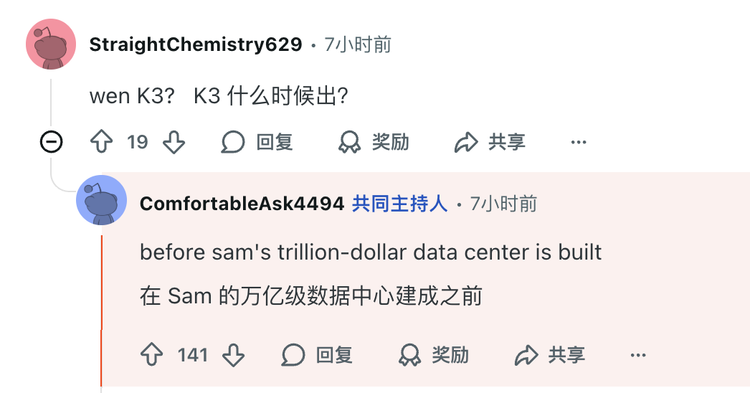

Release Timeline

Q: When will K3 be launched?

> After Sam’s trillion‑scale datacenter is built.

---

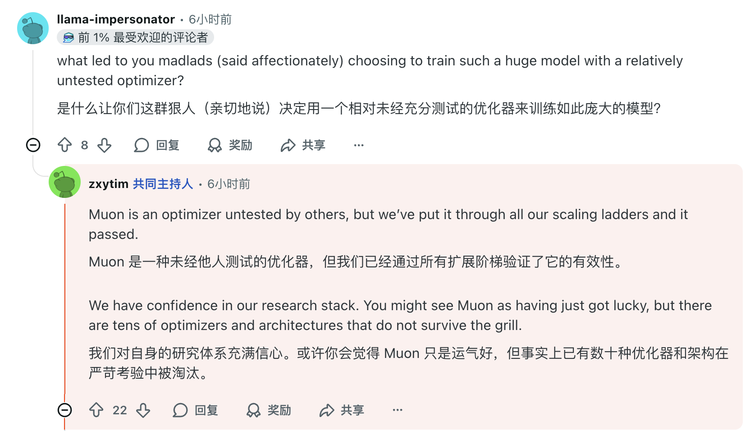

Optimizer Choice

Q: Why use an untested optimizer like Muon?

> We tested it at all scaling stages and eliminated dozens of less effective alternatives. We trust our research process.

---

INT4 vs FP4

Q: Does fp4 offer advantages over int4?

> We chose int4 for better compatibility with non‑Blackwell GPUs, using the Marlin kernel (GitHub link).

---

Token Efficiency

Q: K2 uses many tokens — will this improve?

> Future models will integrate efficiency into the reward mechanism.

---

Development Challenges

Q: Biggest challenge in building K2 Thinking?

> Supporting “think–tool–think–tool” cycles in LLMs — technically complex and new.

---

Architectural Breakthroughs

Q: Next big LLM architecture leap?

> Kimi Linear model shows promise, possibly with sparsity techniques.

---

Industry & Competition

Q: Why is OpenAI spending so much?

> No idea. Only Sam knows. We have our own approach.

---

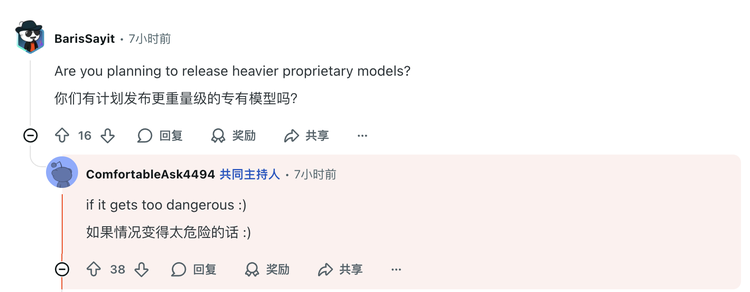

Closed‑Source Potential

Q: Will Kimi release heavier closed‑source models?

> Only if it becomes too risky.

---

Beyond Kimi: Broader AI Ecosystem & Monetization

AMA formats foster direct engagement between AI leaders and developers.

Similarly, open‑source monetization platforms like AiToEarn官网 give creators tools to:

- Generate AI‑driven content

- Publish to multiple channels (Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X)

- Analyze performance

- See AI model rankings

This combination of technical transparency and global distribution tools lowers barriers and empowers more participants in the AI era.

---

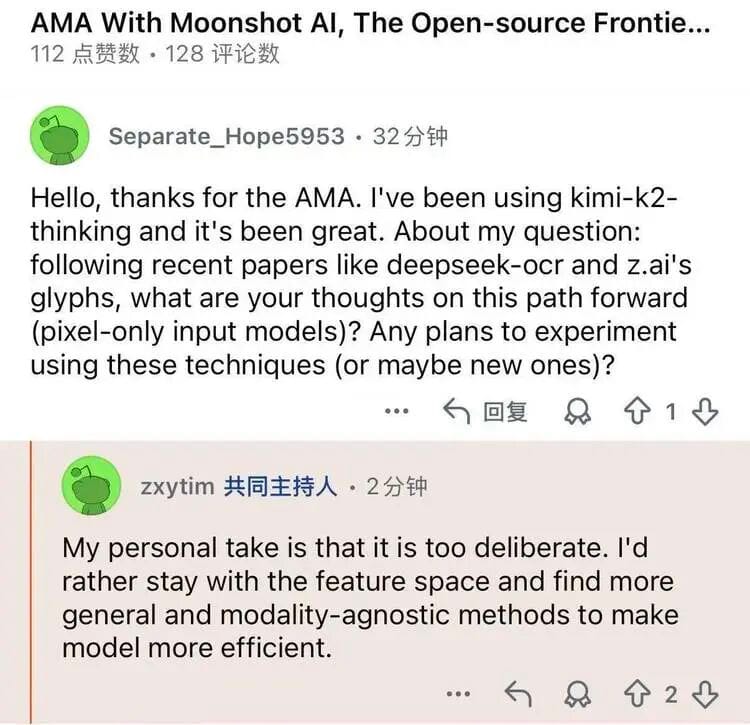

Bold Opinions on Vision vs Text Approaches

A user praised the DeepSeek OCR model for using vision methods to solve token storage/memory issues.

Zhou Xinyu’s response:

> “Personally, I think that approach is too forced. I’d rather stay within the feature space and find more general, pattern‑agnostic methods to make the model more efficient.”

---

Takeaway

This rare co‑founder‑level AMA not only showcased Kimi’s technical direction but also revealed the team’s candid stance on industry trends.

For other AI research teams, this open dialog format can humanize communication and spark deeper community collaboration — much like AiToEarn’s integration of creation, analytics, and monetization enables AI ideas to reach and resonate across platforms.

---

In short: Kimi’s AMA was more than a Q&A — it was a statement of openness, technical ambition, and community engagement that other AI innovators might well learn from.