Weng Li’s “Elegant” Approach to Strategy Distillation — How It Redefines Cost and Efficiency | New Paper Analysis

A Leap of Imagination

AI Future Compass — a paper-interpretation column breaking down top conference and journal highlights with frontline perspectives and accessible language.

---

Breaking the "Impossible Triangle"

For years, post-training of models has been trapped in an impossible triangle:

Researchers want models to have strong capabilities, low training costs, and controllable alignment — all at once.

Limitations of Mainstream Methods:

- SFT & Distillation

- Pros: Simple, parallelizable

- Cons: "Cramming-style" training makes models brittle; they fail in unknown scenarios or when errors occur.

- RL (Reinforcement Learning)

- Pros: Enables exploration and adaptability

- Cons: Sparse rewards require costly large-scale trial-and-error.

---

On-Policy Distillation (OPD): A New Path

Against this backdrop, Thinking Machines’ deep dive into Qwen’s work introduces On-Policy Distillation (OPD) — aiming to solve the impossible triangle.

About Thinking Machines

Founded in February 2025 by former OpenAI CTO Mira Murati, the team includes alumni from OpenAI, DeepMind, Meta, and core contributors to ChatGPT and DALL·E.

Notably, Lilian Weng, former VP of Safety Research at OpenAI, is a co-founder.

Lilian Weng describes OPD as an "elegant combination" — a surprising fusion that addresses the challenges of both major paradigms.

---

01 How OPD Differs from Traditional Distillation

The Shortcomings of Traditional Distillation

- Off-policy: Student learns from static, perfect trajectories generated by the teacher.

- Students see only states the teacher would encounter — leading to compounding errors when reality deviates.

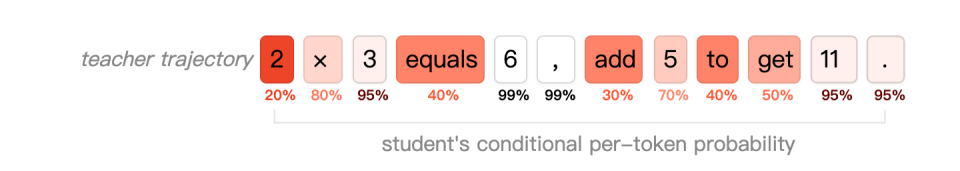

OPD’s Shift to On-Policy

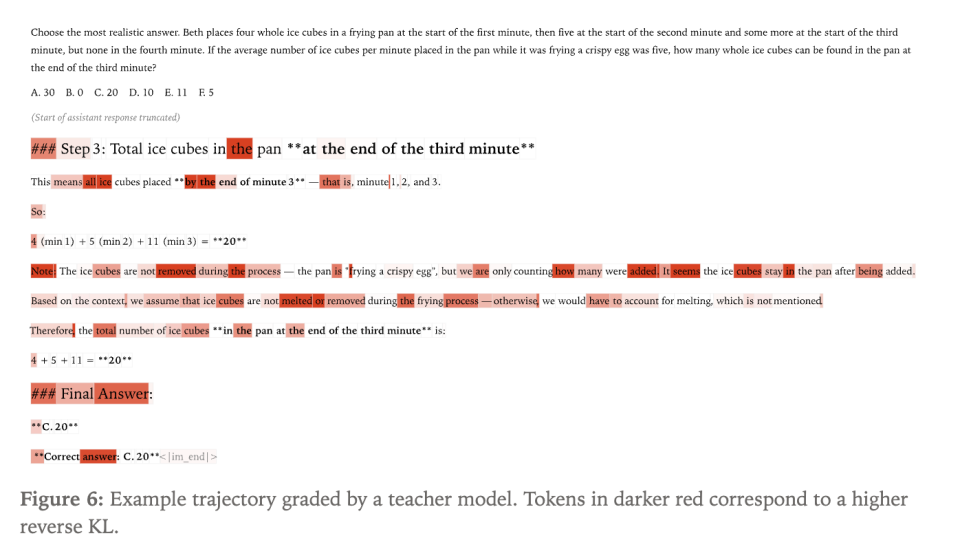

- Data come from the student’s own real-time outputs, not fixed teacher demos.

- Students receive dense, token-level guidance from the teacher on their own (possibly flawed) trajectories.

- This lets students learn from mistakes, improving robustness.

Key benefit:

OPD trains students to think in states they will actually encounter, fixing the compounding error problem of SFT and distillation.

---

02 Fusing RL’s Resilience with SFT’s Efficiency

RL’s Strength

- Learns from real error states.

- Avoids SFT’s brittle failure cascades.

RL’s Weakness

- Inefficient due to sparse rewards and hard credit assignment.

- Process Reward Models (PRM) help but require costly training.

OPD’s Elegant Simplification

- No PRM needed — the teacher provides direct KL divergence feedback.

- RL’s adaptability + SFT’s dense supervision = High efficiency and enhanced stability.

OPD brings RL’s soul (on-policy data) into SFT’s body (dense divergence loss) — avoiding SFT’s fragility and RL’s sluggishness.

---

03 Cost Efficiency: The Second Layer of Elegance

At first glance, OPD seems costly — the large teacher model is invoked every step.

But real numbers tell a different story:

- SFT: Cheap at first, but cost skyrockets for small performance gains beyond initial plateaus.

- RL: Wastes most compute on unproductive exploration.

- OPD: Higher per-step cost, yet vastly higher sample efficiency — no step is wasted.

Example:

- To go from 60% to 70% accuracy:

- SFT: Needs ~5× more data (costly brute force)

- OPD: A small, targeted correction phase after SFT 400K data suffices.

Result: OPD extra cost = only 1/13.6 of SFT’s brute force approach.

It replaces wasted trials with targeted, high-quality guidance.

---

04 The Leap of Imagination

Why was OPD not broadly adopted earlier?

Source Inspirations:

- Qwen 3 (engineering foundation)

- Agarwal’s research

- Tinker’s conceptual work

Cultural Barriers:

- SFT camp: Obsessed with keeping costs low, dismissed online calls to large models.

- RL camp: Fixated on reward models, undervalued imitation learning.

OPD broke both biases:

- An online method that’s cheaper overall than SFT due to sample efficiency.

- Dispenses with complex reward models entirely.

---

Practical Applications & Content Platforms

For practitioners exploring OPD or similar high-efficiency techniques, platforms such as AiToEarn官网 streamline the journey from idea to impact:

- AI-powered content generation

- Cross-platform publishing to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Performance analytics & model leaderboards — AI模型排名.

This is akin to OPD’s philosophy: minimal waste, maximum reach.

---

Final Thought

OPD = On-Policy sampling + KL divergence loss

A minimalistic blend solving two complex paradigms’ weaknesses (SFT & RL) — a true leap of imagination.

Technical report:

https://thinkingmachines.ai/blog/on-policy-distillation/

---

Recommended Reading

Silicon Valley Launches Race for Custom “Perfect Babies”

"AI Godfather" Bengio Teams Up with Industry All-Stars to Publish a Major Paper

Japan’s Trapped Data Centers

---

---

In essence: OPD shows how simple combinations can solve deeply complex challenges, much like how a well-designed platform can multiply the reach of creative work without unnecessary overhead.