“White‑Label AI Products Marked Up 1,000×! Out of 200 Firms, Only 18 Truly Innovate; 38 Have Over 90% Code Similarity — Are Founders Just Trying to Bluff?”

Organizing the AI Startup Landscape

> “Almost every AI application-layer startup is likely to be crushed by the rapid expansion of foundational model providers.”

> — Former Reddit CEO Yishan Wong on X (20M views, widely debated)

Even Elon Musk reposted and commented: “Seems accurate.”

---

The Harsh Reality for AI Startups

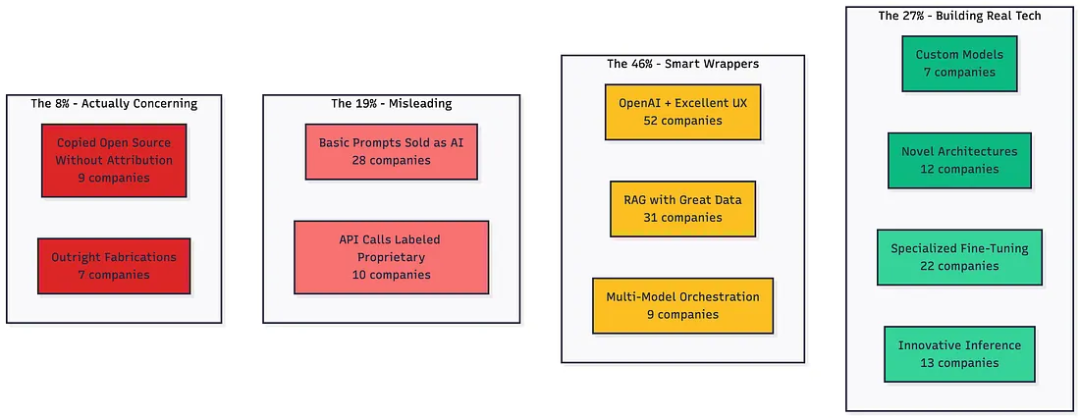

A recent research article (3k+ likes) revealed:

- 73% of 200 funded AI companies (operating >6 months) are simply “wrapping” third-party APIs.

- ChatGPT remains dominant, with Claude increasingly present in varied applications.

Research by Teja Kusireddy

Software engineer Teja Kusireddy used:

- Web traffic monitoring

- Code decompilation

- API call tracking

Findings:

- Only 18 companies demonstrated genuine technical innovation.

- 12 exposed their API keys directly in frontend code.

Kusireddy clarified:

All analysis was based on publicly accessible data, anonymized, with no private access or ToS violations.

Post-Investigation Reactions

- 7 founders contacted him privately.

- Responses ranged from defensive to grateful.

- Some sought advice on reframing “proprietary AI claims” as “built on top of leading APIs.”

- One founder confessed:

- > “I know we’re lying. But investors buy into this — everyone does it. Yet how do we stop?”

---

Case Study: Three Weeks Reverse-Engineering 200 AI Startups

Kusireddy describes:

> At 2 AM, debugging a webhook, I noticed a company claiming deep learning infrastructure was calling OpenAI’s API every few seconds. They had just raised $4.3M for “fundamentally differentiated core technology.” I decided to dig deeper — with real, traceable data.

---

Implementation Plan & Findings

Scraping Architecture Overview

import asyncio

import aiohttp

from playwright.async_api import async_playwright

async def analyze_startup(url):

headers = await capture_network_traffic(url)

js_bundles = await extract_javascript(url)

api_calls = await monitor_requests(url, duration=60)

return {

'claimed_tech': scrape_marketing_copy(url),

'actual_tech': identify_real_stack(headers, js_bundles, api_calls),

'api_fingerprints': detect_third_party_apis(api_calls)

}Process

- Data Collection

- Scraped sites from YC Startup Accelerator, Product Hunt, LinkedIn.

- Traffic Monitoring

- 60s network capture per company.

- Code Analysis

- Decompiled JS bundles and examined.

- API Fingerprinting

- Cross-referenced with known providers.

- Claim vs Reality

- Compared marketing copy with technical implementation.

Excluded: Companies younger than 6 months.

---

73% Failed to Deliver

Gap between hype and reality was stark.

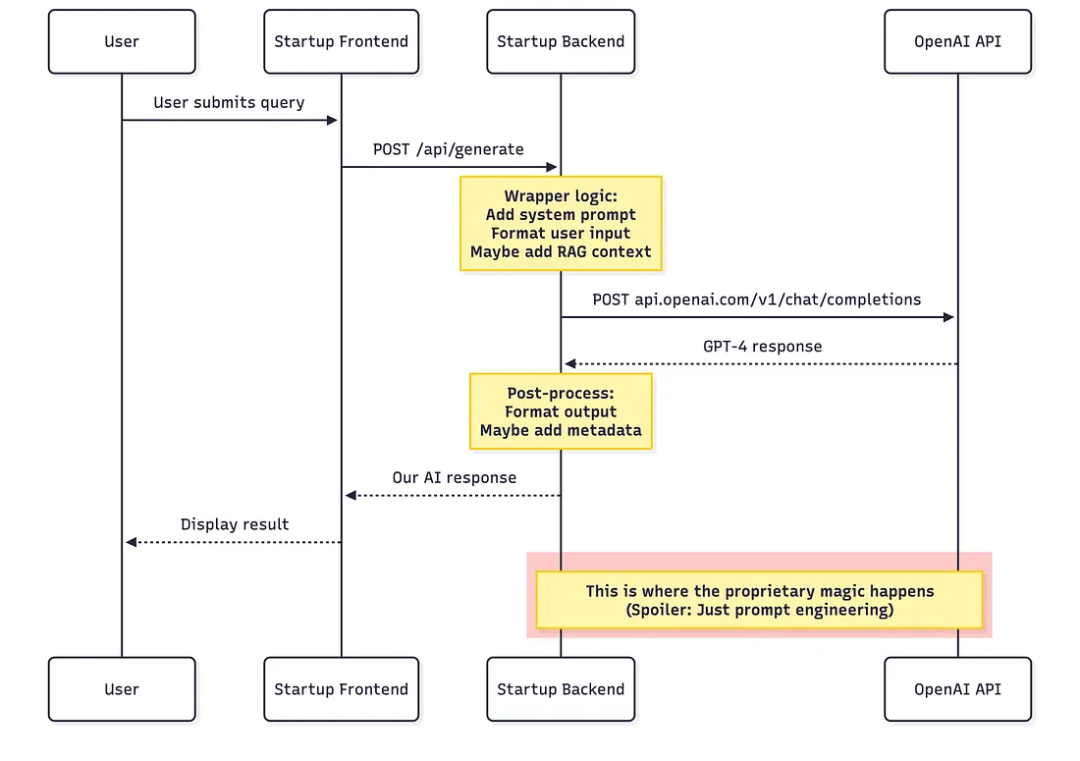

Pattern 1: “Proprietary Model” → GPT‑4 Wrapper

Among 37 companies claiming proprietary LLMs, 34 were simple wrappers.

Technical Signs

- Outbound calls to `api.openai.com`

- `OpenAI-Organization` headers present

- GPT‑4 token usage and latency patterns

- Exponential backoff rate limits

Example:

async function generateResponse(userQuery) {

const systemPrompt = `You are an expert assistant for ${COMPANY_NAME}.

Always respond professionally.

Never mention OpenAI.`;

return await openai.chat.completions.create({

model: "gpt-4",

messages: [

{role: "system", content: systemPrompt},

{role: "user", content: userQuery}

]

});

}Cost Breakdown:

- GPT‑4 API: ~$0.033/query

- Retail: $2.50/query (~75× markup)

---

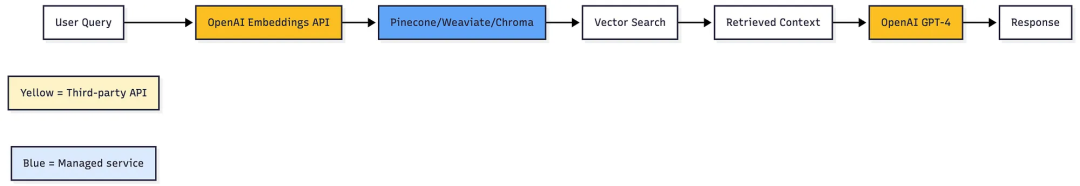

Pattern 2: RAG Architecture Hidden Behind Buzzwords

Claim:

> “Advanced neural retrieval tech based on custom embedding models.”

Reality:

Common Stack:

- Embedding: OpenAI `text-embedding-ada-002`

- Vector DB: Pinecone / Weaviate

- Generation: GPT‑4

Example:

import os, openai, pinecone

class ProprietaryAI:

def __init__(self):

openai.api_key = os.getenv("OPENAI_API_KEY")

pinecone.init(api_key=os.getenv("PINECONE_API_KEY"))

self.index = pinecone.Index("knowledge-base")

def answer_question(self, question):

embedding = openai.Embedding.create(input=question, model="text-embedding-ada-002")

results = self.index.query(vector=embedding.data[0].embedding, top_k=5, include_metadata=True)

context = "\n\n".join([m.metadata['text'] for m in results.matches])

response = openai.ChatCompletion.create(model="gpt-4", messages=[

{"role": "system", "content": f"Use this context: {context}"},

{"role": "user", "content": question}

])

return response.choices[0].message.contentCost: ~$0.002/query

Markup: 250–1000× retail price.

---

Pattern 3: “Fine-tuned Model” Actually Means OpenAI Fine-Tuning API

Only 7% train models from scratch (AWS SageMaker / Google Vertex AI).

Most use OpenAI’s fine-tuning — essentially storing prompts/examples on their system.

---

Quick Guide: Detect API Wrappers in 30 Seconds

- Network Traffic

- F12 → Network tab → Trigger AI feature

- Look for `api.openai.com`, `api.anthropic.com`, `api.cohere.ai`

- Latency Patterns

- ~200–350 ms = likely OpenAI latency signature

- Code Search

- Inspect page source for `openai`, `anthropic`, `claude`, `cohere`, `sk-proj-`

- Marketing Buzzword Check

- Vague phrases (“advanced AI”, “exclusive neural engine”) often hide wrappers.

---

---

Why This Matters

For investors:

Adjust valuations — you’re often funding prompt engineering, not R&D.

For users:

Recognize price markups over API base cost.

You could build similar products rapidly yourself.

For developers:

Core functionality can often be cloned in a hackathon.

For the ecosystem:

73% hype creates risk of bubble.

---

Three Transparent Categories

- Transparent Wrappers – Openly state “Built on GPT‑4,” sell workflows.

- True Builders – Train proprietary models for niche domains.

- Infrastructure Innovators – Create genuinely novel orchestration or retrieval systems.

---

Recommendations

Founders:

- Disclose tech stack

- Compete on UX, domain expertise

- “Built on GPT‑4” is fine — just be honest

Investors:

- Request architecture diagrams, API invoices

- Value wrappers realistically

Customers:

- Inspect browser network traffic

- Ask about infrastructure before paying premium

---

Conclusion

The AI Wrapping Era is here — like cloud, mobile apps, blockchain before.

Transparency will win; hype without substance will be exposed.

Even in hype-heavy markets, 27% are truly innovating.

Honest wrappers can solve real problems.

APIs are tools — just don’t pretend prompts are “exclusive architectures.”

---

Investigate yourself:

F12 → Network → Watch the truth load in real-time.

Reference:

https://pub.towardsai.net/i-reverse-engineered-200-ai-startups-73-are-lying-a8610acab0d3

---

Note: Platforms like AiToEarn官网 provide open-source multi-platform content monetization and could inspire transparency. They integrate AI generation, cross-publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter), analytics, and AI model rankings — enabling creators to monetize efficiently and honestly.