Who Killed That Great Paper? AI Conference Chaos: Good Papers Rejected, Low-Scoring Ones Overhyped

New Intelligence Report: AI and the Future of Peer Review

---

When AI Decides Which AI Papers Get Accepted

In 2026, an AAAI conference reviewer described on Reddit what they called “the strangest review process ever”.

They claimed strong papers were rejected, weak papers advanced, and suspected “connections” were at play in certain acceptances.

Even more oddly, AI was used to “summarize” reviewers’ comments — blurring the line between human judgment and algorithmic decision-making.

---

Reviewer Experience at AAAI 2026

Serving as a reviewer for a top AI conference is supposed to be prestigious.

Yet for this anonymous AAAI 2026 reviewer, the process felt chaotic and illogical:

> "This is the most chaotic review process I’ve ever seen."

Complaints from the First Round

- Reviewed 4 papers: scored 3, 4, 5, and 5.

- Some had minor issues but were overall solid.

- Planned to raise scores after discussion.

- Outcome: All were rejected.

Second Round Results

- Assigned new papers with scores only 3 and 4.

- Quality clearly worse than in the first round.

---

Evidence of Inconsistent Judgments

One paper received:

- Reviewer A: Score 3 — lengthy critique citing missing technical detail & unclear logic.

- Reviewer B: Score 7 → tried to raise to 8 — brief praise, claiming experiments were limited by regulations (not mentioned by Reviewer A at all).

The whistleblower wondered aloud:

> "Could this be a ‘connected’ paper?"

---

Community Response

The Reddit post exploded, appearing on r/MachineLearning’s front page.

Comments included:

- "I’ve experienced the same thing."

- "AI summarizing reviews + bad batches + AI co-reviewing is a disaster."

- "Manipulated reviews aren’t a bug — they’ve become part of the system."

---

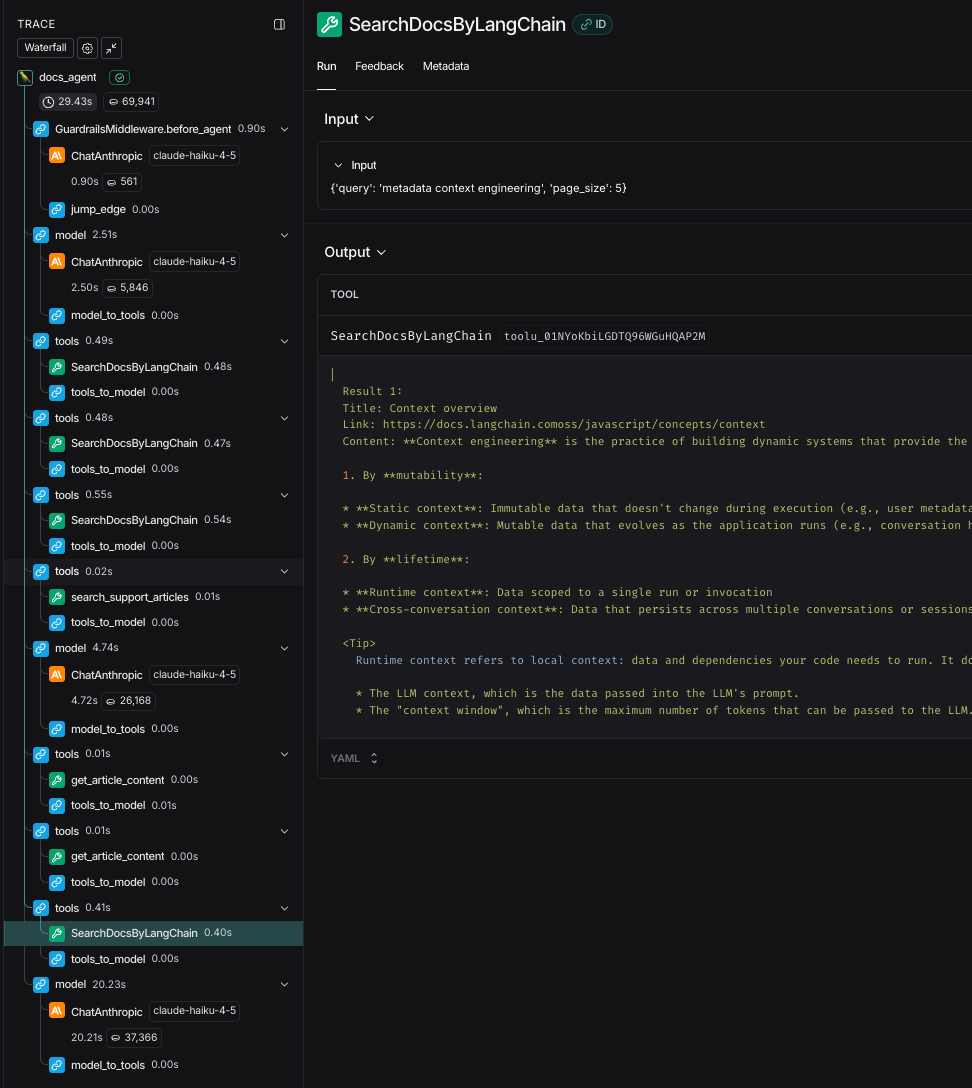

How the AAAI Review Process Works

According to AAAI’s official procedure:

Phase 1 – Initial Screening

- 2 reviewers only.

- If both give low scores → paper is automatically rejected.

- If reviewers disagree or see “potential” → paper moves to Phase 2.

Phase 2 – Secondary Review

- New reviewers and Area Chair (AC) decide final scores.

- Reviewer comments & author rebuttals are summarized by AI into a short report for ACs.

---

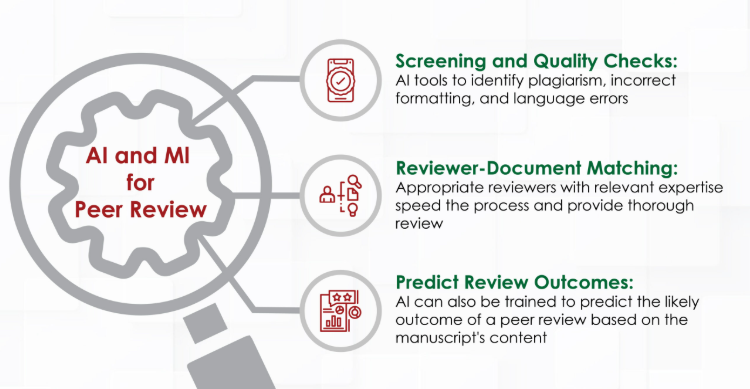

The AI-Assisted Review Controversy

AAAI confirmed in August 2025 its pilot of an AI-assisted review system:

> "The AI system will assist by summarizing review comments and rebuttals, detecting missing information/conflicts, and providing an overview to the AC."

Concerns:

- While officially “just an assistant,” AI summaries may shape final decisions.

- Reviewers worry tone & bias in AI summaries influence acceptance invisibly.

- Reddit users joked: "Whether a paper gets accepted may depend on the AI’s mood."

---

Potential Problems of the Dual-Stage System

- Phase 1 reviewers have veto power — subjective or harsh reviewers can kill papers early.

- Phase 2 reviewers often lack full context from Phase 1.

- AI “flattening” disagreement into summaries risks distortion.

- Concentration of decision power reduces transparency.

Outcome: Higher-quality papers may be discarded early, while weaker papers survive.

---

Academic Trust in Decline

In AI academia, peer review was the quality gatekeeper.

Now, that gate is harder to guard. Many researchers express:

- Frustration at seeing small cliques dominate citation & acceptance patterns.

- Loss of confidence when efficiency trumps understanding.

A sarcastic Reddit remark:

> "AAAI’s originality checks are more conservative than Liverpool’s transfer policy."

---

The Role of Platforms like AiToEarn

AiToEarn官网 is an open-source global AI content monetization platform:

- Publishes simultaneously to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X.

- Integrates AI content generation, cross-platform analytics, and model rankings.

- Transparent and distributed model — a contrast to the opaque academic review systems.

---

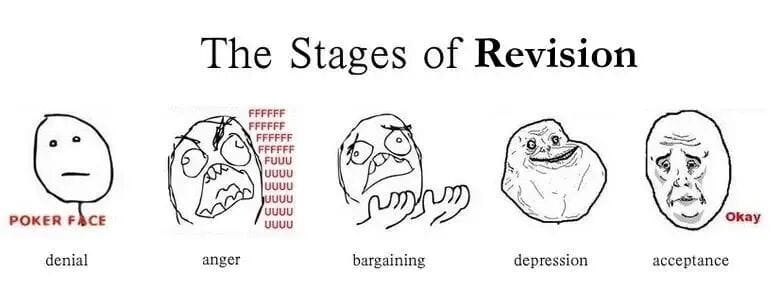

Memes as Protest: “Reviewer 2”

“Reviewer 2” has become an icon of unreasonable rejection.

Humor masks the loss of trust in an academic system increasingly shaped by algorithms.

---

Key Takeaways

- AI-assisted peer review can boost efficiency but risks distorting outcomes.

- Early-stage veto powers combined with opaque AI summaries concentrate decision-making.

- Transparency is critical — both in creative and academic AI applications.

- Trust in peer review is fragile; once lost, it’s hard to rebuild.

---

References

---

If you’d like, I can create a condensed, bullet-point summary version of this article so it’s easy to share with your editorial team.

Would you like me to prepare that next?