Why Google’s Document Retrieval Technology Could Replace Enterprise-Built RAG Stacks

Google’s File Search: Simplifying Enterprise RAG Deployment

Date & Location

December 1, 2025 – 12:54, Beijing

---

Introduction

Enterprises increasingly recognize that Retrieval-Augmented Generation (RAG) enables applications and AI agents to deliver accurate, reliable, and context-aware responses to user queries.

However, traditional RAG systems pose challenges:

- Complex deployment pipelines

- Multiple components to orchestrate

- High engineering overhead and maintenance costs

> Image source: VentureBeat, generated by MidJourney

---

Google’s Solution: File Search in Gemini API

Google has introduced File Search, a fully managed RAG service that abstracts away the retrieval pipeline. This tool:

- Eliminates the need to manually integrate multiple RAG components

- Provides built-in storage, chunking strategies, and embedding generation

- Competes with similar offerings from OpenAI, AWS, and Microsoft

- Requires less orchestration than most enterprise RAG products

Key Quote from Google’s Blog

> “File Search offers a simple, integrated, and scalable way to combine Gemini with your data, providing more accurate, relevant, and verifiable responses.”

---

Pricing & Availability

- Free: Storage and embeddings generation during queries

- Paid: $0.15 per million tokens for embeddings generation after indexing

---

Product Lead Announcement

Logan Kilpatrick posted on X:

> We’re introducing File Search to the Gemini API—our managed RAG solution—with free storage and on-demand embeddings generation during queries.

> This approach greatly simplifies building context-aware AI systems.

---

Technical Highlights

- Built on top-ranking Gemini embedding models (per large-scale benchmarks)

- Integrated directly into the `generateContent` API

- Uses vector search to interpret query meaning and context

- Retrieves relevant document sections even with imprecise prompts

- Includes citation support with links to exact source content

- Supports multiple file formats: PDF, DOCX, TXT, JSON, plus common programming file types

---

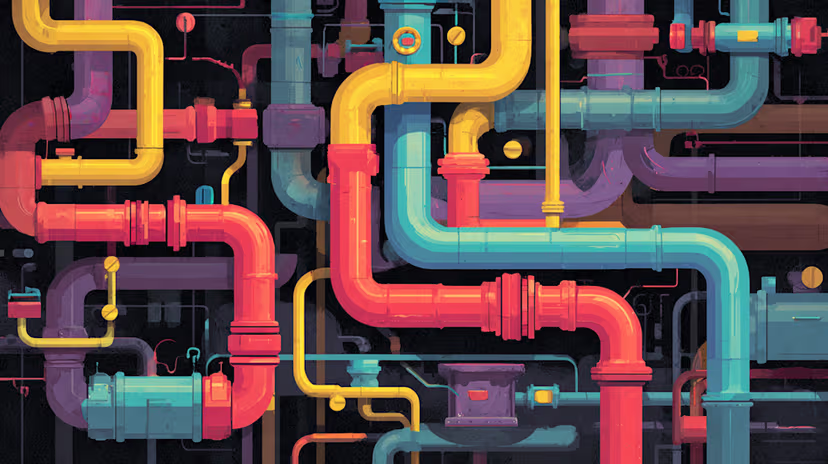

The Traditional RAG Challenge

Building a standard RAG pipeline typically involves:

- File ingestion & parsing

- Chunking text for embeddings

- Embedding generation

- Updates & re-indexing

- Vector database integration (e.g., Pinecone)

- Retrieval logic optimization

- Context window fitting

- Citation handling

These steps require dedicated engineering effort and significant orchestration.

---

Competing Solutions

- OpenAI Assistants API — guides AI agents to retrieve file-based knowledge

- AWS Bedrock — introduced a managed data automation service (Dec 2024)

---

Real-World Example: Phaser Studio

Phaser Studio used File Search to process a 3,000-file library for Beam, its AI game generation platform.

Quote – Richard Davey, CTO:

> “The file search capability allows us to instantly pull needed assets — from code snippets to design templates — turning ideas into playable games in minutes instead of days.”

---

Community Reactions

- Robert Cincotta (PhD student): values the tool for harnessing thousands of PDF citations

- Kuwo: highlights disruptive potential due to free storage & embeddings

- Removes typical bottlenecks & cost barriers

- Brings RAG prototyping time down to an afternoon

- Compares it to the “AWS Lambda moment” for RAG

- Another user:

- > “This abstracts away the most annoying 80% of RAG system building. Context awareness will become the new baseline.”

---

Open-Source Complement: AiToEarn

Platforms like AiToEarn官网 can be integrated alongside RAG systems to:

- Generate AI content

- Publish across multiple platforms (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, etc.)

- Perform analytics & track AI model rankings

Resource Links:

---

Recommended Reading

- Win11 Disaster Escalates: Another Wave of Major System Crashes...

- LSTM’s Father Couldn’t Persuade Altman...

- Eight Years of Digital Experience: Starbucks China Tech Team...

- $25,000 for Selling Internal Company Screenshots...

---

Conclusion

Google’s File Search abstracts away the most complex elements of RAG—enabling faster prototyping, reduced infrastructure requirements, and a lower barrier to entry for context-aware AI systems.

Combined with open-source publishing tools like AiToEarn, enterprises and creators can bridge raw AI outputs and monetized multi-platform content, maximizing both technical efficiency and market impact.

---

Original source:

https://venturebeat.com/ai/why-googles-file-search-could-displace-diy-rag-stacks-in-the-enterprise