With Less Than 1% of OpenAI’s Budget, Kimi’s New Model Surpasses GPT-5

Open Source vs. Closed Source Models: The Real Showdown

Overview

After a four-month hiatus, Kimi has launched the inference-ready version of its flagship open-source model K2 — now renamed K2 Thinking.

It is the most powerful open-source thinking model Kimi has ever built, equipped with:

- 1 trillion parameters

- Mixture of Experts (MoE) architecture

- 32B active parameters

- Native INT4 quantization

- 256k context window

- High compatibility with domestic GPUs

K2 Thinking represents a major leap forward in AI model development.

---

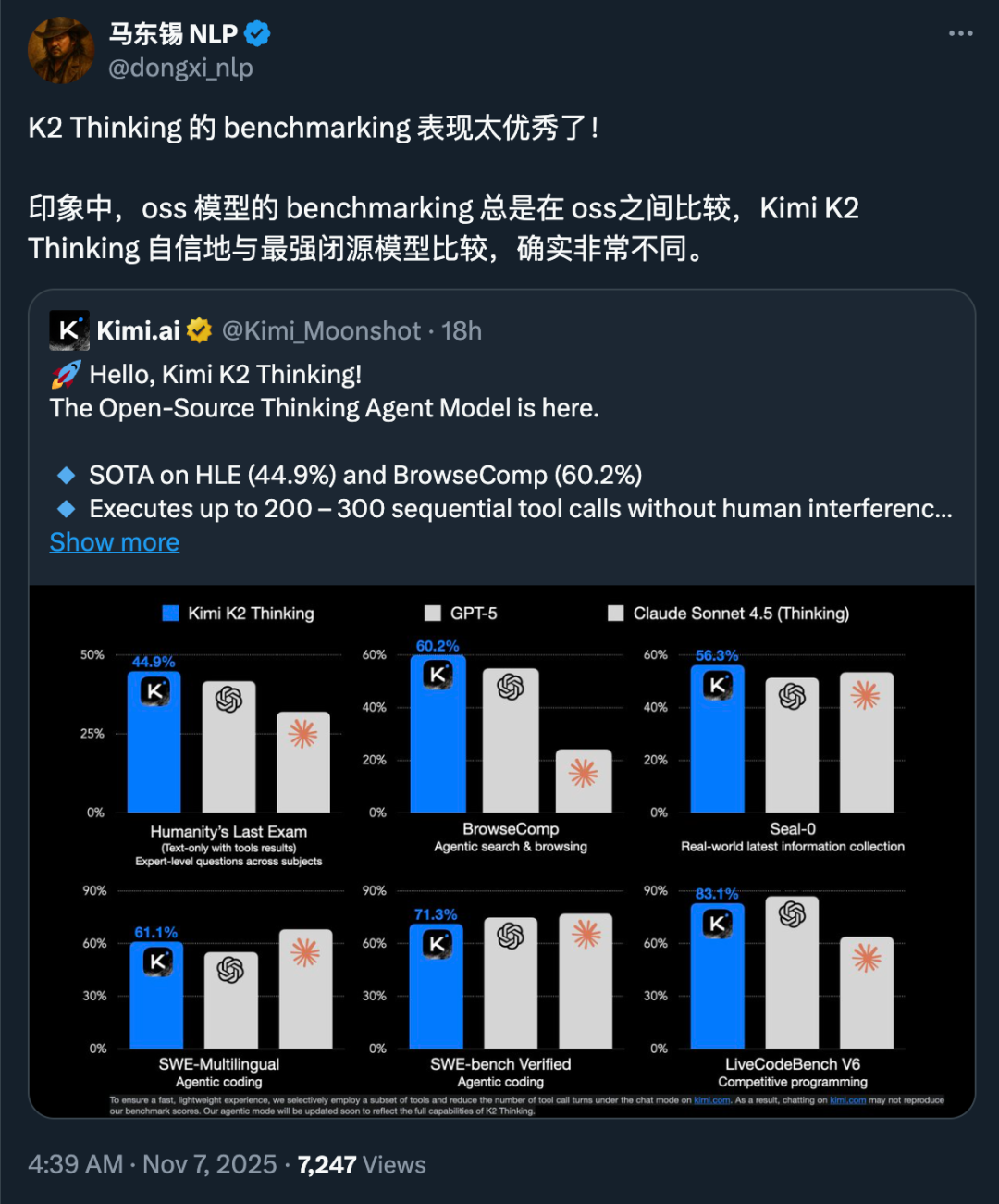

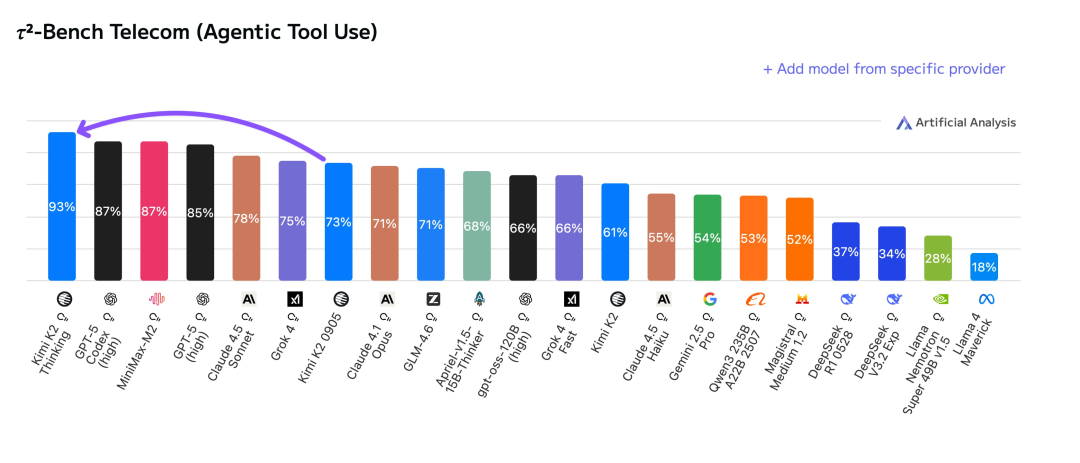

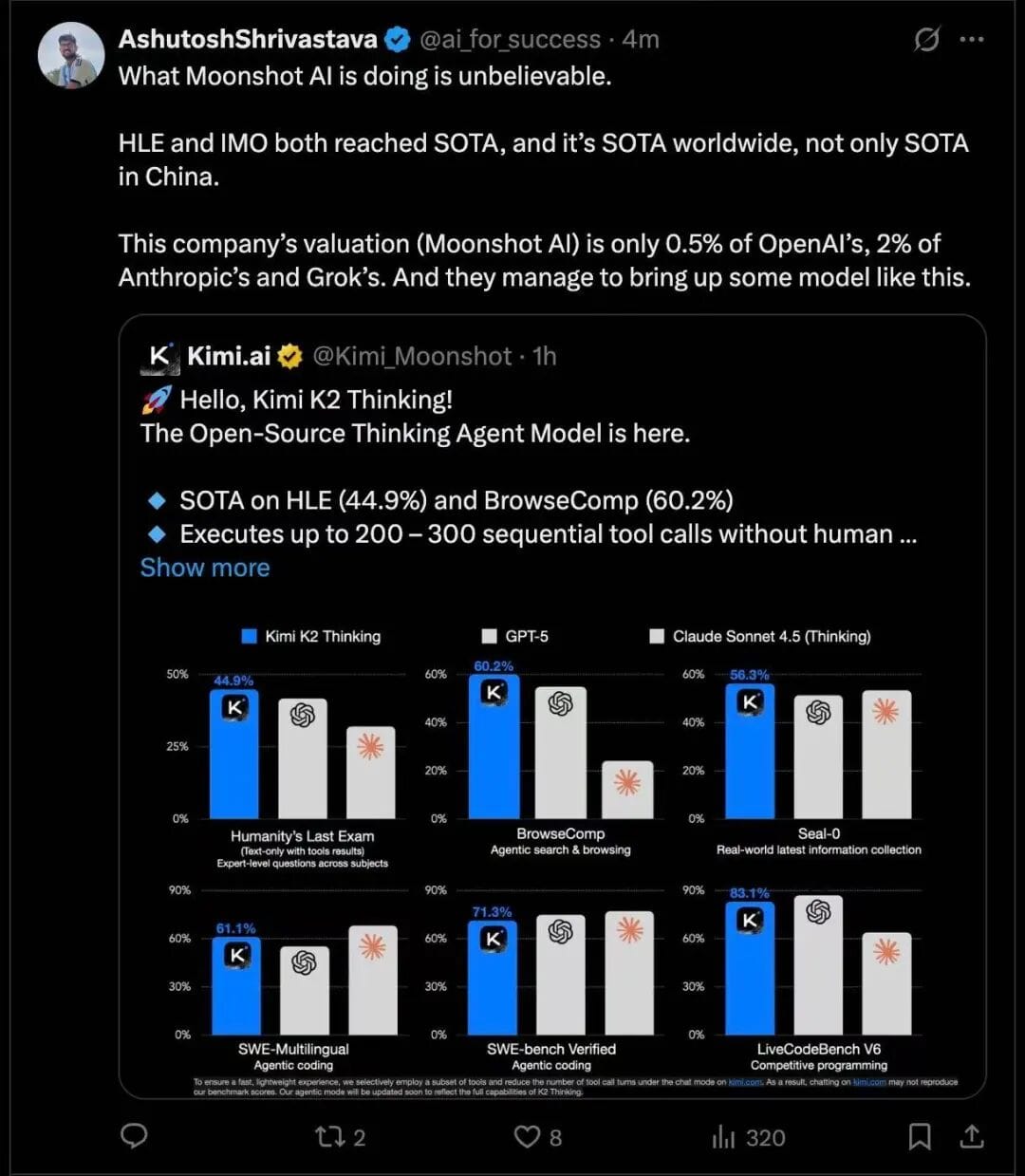

Benchmark Performance

According to official benchmarks, K2 Thinking achieved state-of-the-art (SOTA) results in Humanity’s Last Exam (HLE) — not only dominating the open-source sphere, but also surpassing similar closed-source models.

Compared to the original K2, this new model:

- Requires no human intervention

- Can autonomously execute up to 300 rounds of tool invocations

- Supports multi-step reasoning

- Solves significantly more complex problems

This shift from "Model as Agent" to "Model as Thinking Agent" reflects Kimi’s strategic focus on innovation over sheer resource scale — an effort to rival Western AI giants despite limited resources.

---

⬆️ Follow Founder Park for timely, high-value entrepreneurial insights.

---

Join the “AI Product Marketplace” Community

15,000+ members — industry pros, developers, entrepreneurs.

Scan the Feishu QR Code to join:

Membership benefits include:

- Latest & most noteworthy AI product news

- Exclusive giveaways (beta invites, membership codes)

- Targeted channels for AI product exposure

---

1 — K2 Thinking:

SOTA Performance in Humanity’s Last Exam

Quoting Yao Shunyu from AI in the Second Half:

> “In AI’s second half — beginning now — focus will shift from solving problems to defining them. Evaluation will matter more than training.”

Why Evaluation Matters

With traditional benchmarks like NMLU and GPQA becoming insufficient, the Humanity’s Last Exam (HLE) emerged in 2025 to measure next-generation AI.

HLE facts:

- 2,500 advanced academic challenges

- 100+ disciplines

- Contributions from nearly 1,000 experts in 50+ countries

- Created by the Center for AI Safety and Scale AI

- Officially released March 4, 2025

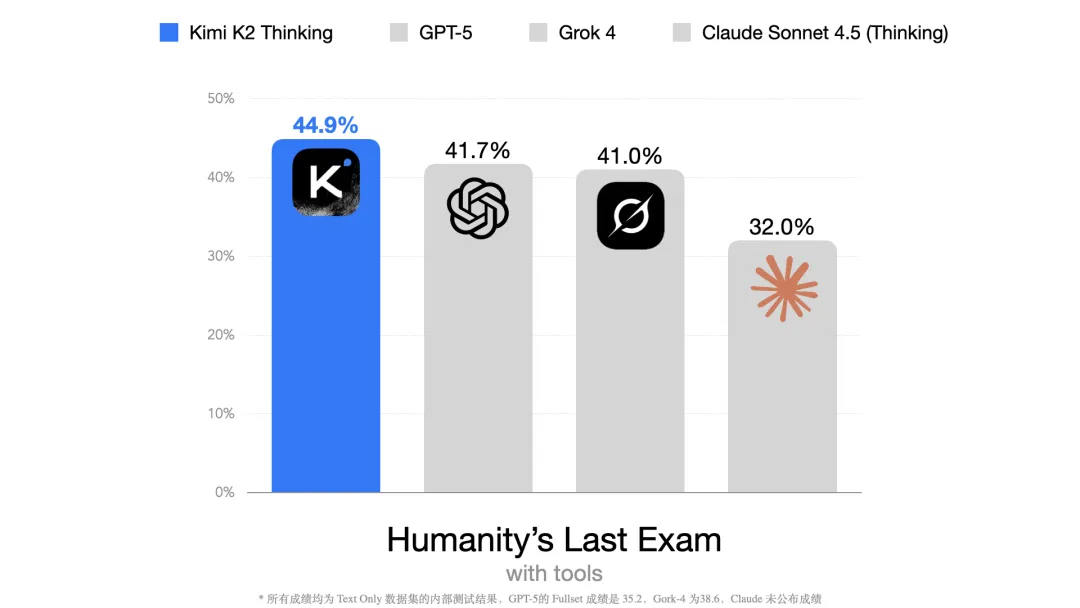

K2 Thinking’s HLE Score

Under uniform conditions (tools allowed: search engines, Python, web browsing):

- Text-Only HLE dataset score: 44.9% — SOTA result

When HLE launched, scores <20% were common; today, top models exceed 40%. Despite scaling law limits, open-source models are now matching or outperforming closed-source competitors.

---

Impact on the AI Ecosystem

This convergence of performance fosters:

- Greater competitiveness

- More collaboration

- A stronger open-source community

Platforms like AiToEarn官网 help creators deploy advanced models to generate and monetize content across major platforms — complete with analytics and AI model rankings (AI模型排名).

Independent reviewers (e.g., Guizang’s AI Toolbox, Cyber Zen Mind) report:

- Significant coding capability boost

- Noticeably improved problem-solving skills

---

2 — From “Brain in a Vat” to Agent Intelligence

Four months ago, at K2's original release, many asked: Why isn’t this a reasoning model?

Amid the world’s excitement over DeepSeek R1, Kimi’s priority was Agents — specifically models with more effective tool calling.

Two Model Paradigms (Yang Zhilin)

Yang explained:

- Reasoning-focused paradigm

- Example: o1 models

- Relies on repeated attempt + reflection cycles

- Still an isolated “brain in a vat”

- Agentic paradigm / Multi-turn Agent Reinforcement Learning

- Interacts extensively with external environment

- Focused on multi-turn tool usage

- Supports test-time scaling

Kimi chose interaction first, reflection later — evolving into K2 Thinking.

Key Advantages

- Multi-turn: Multiple iterative steps during inference

- Tool integration: Connects internal reasoning to the real world

Result:

K2 Thinking can autonomously complete 300 rounds of tool calls & reasoning.

In OpenAI’s L1–L5 tiers, L3 Agent intelligence may now be within reach.

---

Technical Notes

K2 Thinking’s API requires returning the `reasoning_content` field for continuity across multi-step tool usage — similar to Claude’s “extended thinking” method.

Currently, GPT and Gemini models do not support this feature.

---

3 — Resource Challenges and Market Context

After the K2 Thinking release, this chart appeared on X/Twitter:

Despite SOTA performance, Moonshot AI (K2’s creator) is valued at:

- 0.5% of OpenAI’s worth

- ~2% of Anthropic or Grok

---

Comparative Data

| Company | Valuation | GPUs & Resources | Training Cost |

|----------------|------------------|------------------------------------|---------------|

| Kimi | ~$3.3B USD | ~200 employees, limited resources | ~$4.6M USD |

| OpenAI | $500B USD | Massive resource pool | N/A |

| Anthropic | $183B USD | Series F, $13B raise | N/A |

| Grok (xAI) | $200B USD | 200,000 H100 GPUs, 1,200+ staff | ~$49M USD |

Key takeaway:

Chinese AI startups face GPU scarcity, limited funding, higher costs, and fewer trial/error chances — yet K2 Thinking outperforms GPT‑5 & Grok 4 using <1% of resources.

---

As NVIDIA CEO Jensen Huang suggested to the Financial Times, China may be closing the AI gap with the U.S.

---

Further Reading

- Companies Making Agents This Year: Coding Earned Money, Customer Service Secured Financing — What About You?

- OpenAI’s New CEO: Whether to Advertise and How to Advertise — She Decides

- Education Agent Seed Round by Changpeng Zhao — $11M Investment

- Hu Xiuhan’s Two-Year Journey Creating an Anime Sora

---

Case Study: Marketing Agent Startup

- Seed Round — $25M USD

- Boosts client sales by up to 40%

- Combines tech + creative strategy + performance marketing

- Heavy use of AI analytics for cross-platform optimization

---

How AiToEarn Fits In

AiToEarn官网 enables:

- Cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Revenue tracking

- Model ranking (GitHub repo)

Benefits:

- Streamlined content generation → distribution → analytics

- Faster go-to-market cycles

- Consistent cross-channel branding

- Higher ROI

---

Conclusion:

In today’s AI race, precise execution and efficient resource use matter more than sheer scale. Open-source breakthroughs like K2 Thinking, combined with monetization platforms such as AiToEarn, could empower smaller players to challenge trillion-dollar incumbents — creating a more balanced and dynamic AI industry.