Z Potentials | Interview with TestSprite Founder, Former AWS & Google Engineer, Creator of a Testing Agent Used by 40,000 Developers Worldwide

**

TestSprite has already attracted 40,000 global developers and secured a total of $8.1M in funding from top North American venture funds. Founded by a team with Amazon and Google backgrounds, it operates on a dual-engine approach — Coding Agent × Testing Agent.

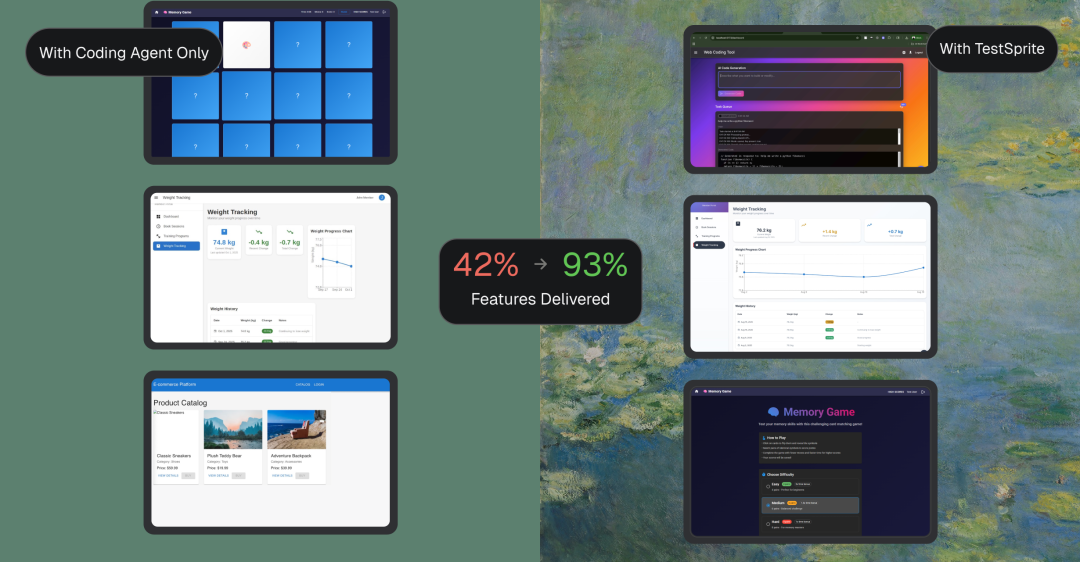

In the past two years, coding has changed dramatically. With tools like GitHub Copilot, Cursor, and Devin, engineers have grown accustomed to “typing a prompt and watching thousands of lines of code appear.” Producing code has become easier than ever. Yet many quickly realized that the real bottleneck to release is no longer “can we write it,” but “are we confident enough to deploy it to production.” As code volume grows exponentially, validation, regression, and extreme scenario coverage have been compressed to the limit — making testing the new hard bottleneck in the AI era.

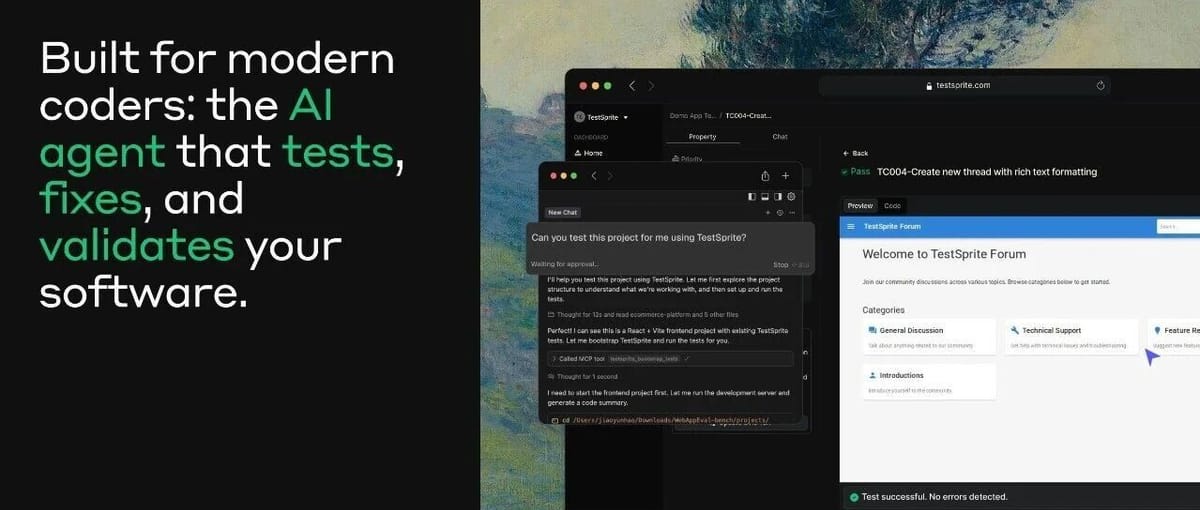

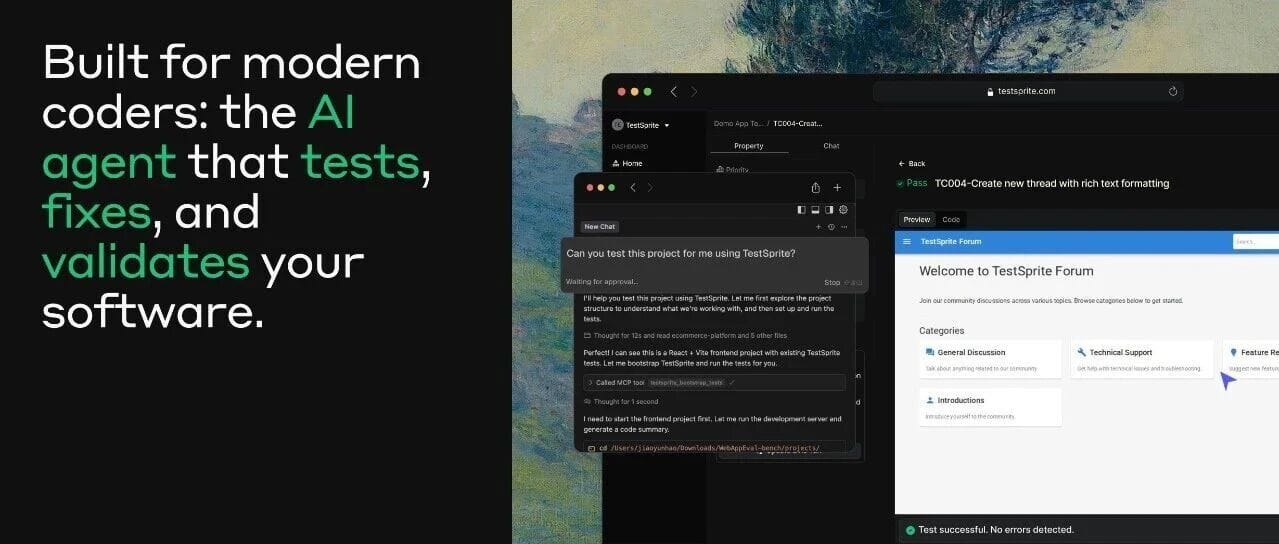

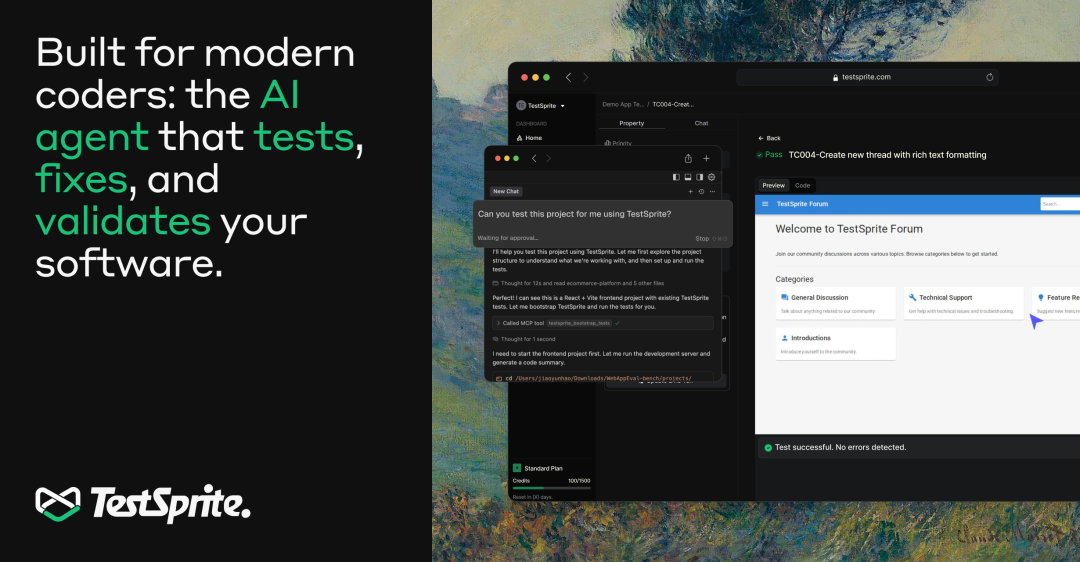

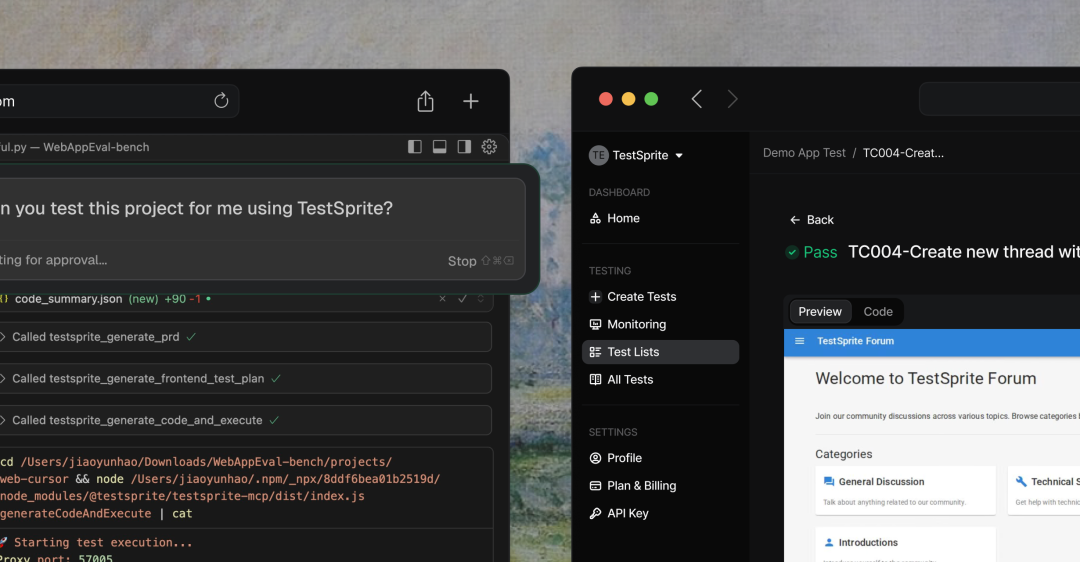

TestSprite aims squarely at this widening gap: making AI not just responsible for writing code, but for reviewing it as well. It elevates testing from “a couple of quick clicks before leaving work” to “automated infrastructure spanning the entire development lifecycle.” You can feed it a link and it will automatically run your live product end-to-end, or integrate deeply via MCP into AI IDEs like Cursor and Trae so that the Testing Agent and Coding Agent spar behind the scenes — automatically generating test plans, cases, code, reports, and auto-healing adjustments. This makes validation a truly orchestratable, reusable core capability.

Behind the product is a founding duo who are both typical and atypical at once. CEO Jiao Yunhao went from competitive programming in Hangzhou to a top high school, then Zhejiang University’s Chu Kochen Honors College. As an undergraduate he conducted AI research in Professor Wu Fei’s lab, went on exchange to the University of Michigan, published NLP papers in Professor Mei Qiaozhu’s lab, earned his master’s in computer science at Yale, and finally joined Amazon AWS CloudFormation. There, on the frontlines of global cloud infrastructure, he worked on testing frameworks and development, experiencing firsthand how “a single uncovered line of code” could impact customers worldwide, embedding a deep awareness of software quality in his DNA.

CTO Li Rui is a cross-disciplinary talent: four degrees in six years starting with Optical Engineering and Industrial Design at Zhejiang University, concluding in Computer and Data Science at UPenn. At Google Cloud, he worked on vulnerability detection and automated patching, focusing on system stability from the security perspective — also spending years at the heart of efforts to “minimize production incidents.”

Thanks to this accumulated expertise, TestSprite has been embedded in engineers’ workflows since day one: one side offers a web testing entry point where you simply paste a URL to start, the other offers an MCP Server deeply integrated with tools like Cursor and Trae, ensuring every code change triggers an agent-driven loop of “generate → validate → fix.”

On the client side, TestSprite serves both multi-million-dollar annual revenue medical suppliers like Princeton Pharmatech (targeting rare disease patients) and startup founders who build their first product versions using Lovable + TestSprite + Cursor. It also reaches tens of thousands of Vibe Coders, product managers, and independent developers worldwide — helping projects from small personal builds to enterprise-scale applications evolve from “it runs” to “it runs reliably.”

What’s interesting is that the company hasn’t positioned itself as an expensive QA system only for large corporations. Instead, it has deliberately pursued a path favored by Gen Z developers: pricing subscriptions at the same level as Cursor, so individuals working on side projects and small startups with only one or two engineers can treat testing as infrastructure, not as a “luxury for when we have money.”

This differentiator helped them quickly close a $6.7M seed round led by Bellevue, WA–based Trilogy Equity Partners, with participation from Techstars, Jinqiu Capital, MiraclePlus, Hat-trick Capital, BV Baidu Ventures, and EdgeCase Capital Partners. To date, the company has raised approximately $8.1M in total funding.

---

In the broader context of AI-driven development, platforms like AiToEarn官网 are enabling creators and developers to automate, publish, and monetize content across multiple platforms — from Douyin, Kwai, and WeChat to YouTube, Pinterest, and X (Twitter). Integrating testing automation such as TestSprite with cross-platform monetization solutions like AiToEarn开源地址 could empower developers not only to build more reliable software but also to effectively distribute and earn from AI-enhanced creations.

也正因为两位创始人全程高能、金句频发,这次我们在整理访谈时几乎每一段都不舍得删——于是你现在看到的是一篇接近 2 万字的超长对话。诚邀你一起读完这篇内容量超标的访谈,走进一对硅谷早期华人创业者的脑内世界。

-

传统 testing 的效率瓶颈已经难以支撑现代软件的复杂度。软件质保的问题从根本上不是“工具不够”,而是“人力结构性不足”。这个领域需要的是一种能替代人力、并且不受时间精力限制的智能系统。

-

大模型应用于传统软件测试将带来结构性改变...AI Agent 能主动规划和执行任务的形态,让我意识到 SaaS 产品将被重新定义。

-

但从大厂出来创业之后完全不同。没有人帮你定义问题,也没人告诉你有哪些路径。你得自己先去判断“什么才是最值得解决的问题”,然后带着团队去解决它。这意味着我的角色从“问题的解决和执行者”变成了“问题的提出和定义者”。

-

我们希望用户不必关注AI背后具体做了哪些事,也不必一直盯着屏幕。理想状态是它像Auto-Pilot一样,在后台安静运行。等用户遛狗、慢跑回来,发现一切功能都正常了,这正是我们想实现的体验。

-

这也是现在很多AI coding应用型公司竞争的焦点:不仅是谁的模型更强,而是谁能提供更顺畅的交互体验...决定这一点的,其实是context engineering。

-

Testsprite不仅是一个自动化测试工具,更是一个企业级测试管理、运行与维护平台。我们的目标,是让测试自动化从“工具”变成“生态”,让开发者和AI之间形成真正的闭环协作。**

-

TestSprite希望帮助开发者、创业者、学生,哪怕是没有技术背景的小白,都能把“做软件”这件事做得更好、更可靠。

01 竞赛保送少年,从耶鲁和宾大起步,在AWS与Google积累,在AI Native时代爆发

ZP:请您们从自己的成长经历谈起,包括教育背景、研究经历、到大厂工程师身份(如亚马逊)这一阶段。是什么让您走上“AI+软件质保”这一技术路径?

CEO焦云皓:我是杭州人,从小所处的环境对计算机教育比较重视。中学时期我进入了计算机竞赛班,那几年出了不少后来在计算机和AI领域发展杰出的同学,比如现在Pika的CEO郭文景、后来去UCB的大神陈立杰。那段经历让我第一次系统接触计算机编程,并坚定了继续往技术方向深入的兴趣。

凭借竞赛经历,我被保送进入重点高中继续学习计算机,随后考入浙江大学计算机系,并在竺可桢学院跟随吴飞老师做 AI 方向研究。2015 年深度学习刚刚兴起,我在老师的指导下开始阅读LeCun的《Deep Learning》,正式进入了人工智能研究体系。

本科期间我曾赴密歇根大学安娜堡分校交换,在梅俏竹老师团队做 NLP 研究,并合作发表论文,这是我第一次产出真正影响产品体验的 AI 研究成果。那时我开始意识到:自己更希望做“被用户实际使用的产品”。

本科毕业后,我选择去耶鲁攻读计算机硕士,进一步扩展技术和创业视野。毕业后加入 Amazon AWS CloudFormation 团队,从事测试框架与开发者工具链的建设,深入理解了开发者在云平台、软件质保和测试体系中的真实痛点。

真正促使我们走向创业的转折,是 GPT-3.5 和 GPT-4 的发布。我和李睿当时都迅速意识到,大模型应用于传统软件测试将在未来带来结构性改变。那天我们讨论到深夜,并决定正式启动TestSprite的方向。

CTO李睿:我和云皓是浙大本科同学,本科我读光电和工业设计,研究生在宾大转向计算机与数据科学,路径看似跨界,但核心一直围绕“构建系统、解决问题”展开。

After graduation, I joined Google Cloud, where I was primarily responsible for vulnerability detection and automated patching. This work gave me deep insights into the pain points developers face in quality assurance, and it complemented Yunhao’s experience building testing frameworks at AWS. We both clearly saw that the efficiency bottleneck of traditional testing can no longer support the complexity of modern software.

When Cognition released Devin, I immediately saw that AI could increasingly function as a “primary actor” in the full software development process. The emergence of AI Agents that can proactively plan and execute tasks made me realize that the definition of SaaS products will be fundamentally reshaped.

This conclusion resonated strongly with our accumulated understanding of the developer ecosystem. We decided to start a company that uses AI to reinvent the software quality assurance process — transforming testing from “a labor-intensive mandatory step” into an intelligent, automated, and scalable quality system.

---

ZP: During your time at major tech companies, what were some memorable pain points in software testing/quality assurance? How did those experiences lead you to found TestSprite?

CEO Yunhao Jiao: There were definitely memorable moments. At Amazon AWS, I worked in the CloudFormation team, where the Oncall pressure was extremely high. Every week we received large volumes of tickets from users worldwide, and we had to respond and fix issues quickly. AWS’s testing framework was extremely comprehensive — unit tests, integration tests, frontend UI tests, backend tests all covered — and even a small feature might require maintaining hundreds of tests. But even in such a rigorous environment, issues still occurred frequently, and rollback became almost routine.

One incident in particular sticks in my mind. One night while Oncall, my phone kept alerting non-stop. Customers from multiple regions were reporting CloudFormation stack failures. Even though the issue wasn’t in my module, I had to immediately bring in the relevant engineers to investigate. Due to the system’s enormous complexity, it was difficult to pinpoint the root cause quickly. In the end, we had to roll the entire system back to the previous version to stop the damage, and it took nearly a month to finally identify the source.

It turned out the root cause was a single line of code in an extreme scenario that hadn’t been covered by tests. But that single line triggered a severe chain reaction: customer systems from companies like JPMorgan Chase and Disney were affected. For example, some of Disney’s roller coaster operating systems stopped entirely that day because the ticket scheduling system it relied on malfunctioned at the CloudFormation layer.

The financial loss was enormous; I don’t know the exact figure, but internally it was communicated as “at least in the millions of dollars.” That incident left a deep mark on me. I truly understood that in modern software systems, a single line of untested code can have global real-world consequences.

The deeper reason also became clear: even at a company like Amazon with a rigorously structured engineering system, human effort alone cannot fully prevent coverage gaps. Testing fundamentally relies on engineers’ time and energy — yet performance metrics for engineers usually focus on feature delivery, not testing quality. Over time, test coverage tends to drop and risks accumulate.

I also know the behavioral patterns of engineers — if writing two tests is enough to meet requirements, few are willing to spend a day writing twenty. Testing isn’t a promotion metric, but delivering features is. This means testing is inherently deprioritized, becoming “mandatory but never sufficient.”

These experiences led me to the realization that the core issue in software quality assurance is not a lack of tools, but a structural shortage of human resources. This field needs an intelligent system that can replace human labor and isn’t constrained by time or energy.

When GPT-3.5 and GPT-4 were released, I immediately realized that AI could fill this long-standing gap and fundamentally change how software testing is done. My AWS experience made me firmly committed to pursuing the “AI + software quality assurance” path when I saw the capabilities of large models.

---

ZP: As technical co-founders acting as both CEO and CTO, what was the biggest change moving from a researcher/engineer role to entrepreneur? Which skills did you find most important to develop?

CEO Yunhao Jiao: The biggest change is that I now personally face customers, understand their actual needs, and build tools the market truly wants — rather than working behind closed doors. We must understand why customers are willing to pay for a specific feature, what problem they truly want solved, rather than just pursuing what we ourselves want to make. In this process, our market and product capabilities naturally grow.

---

On a related note, as AI continues to transform software development and testing, many creators — including developers, engineers, and product builders — are exploring ways to directly monetize AI-driven productivity. Platforms like AiToEarn官网 provide an open-source global ecosystem for AI content monetization, enabling simultaneous publishing across major platforms such as Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). Beyond publishing, AiToEarn connects tools for AI content generation, cross-platform analytics, and model ranking (AI模型排名), making it easier for creators to turn AI-powered creativity into scalable income — a trend that parallels how we envision AI reshaping software quality systems.

幸运的是,我的父母本身也是科技创业者,他们俩都是浙江大学的校友,父亲也是计算机系出身。从小在家里耳濡目染,我对创业、storytelling、市场营销都有一些潜意识的理解。虽然在学校里没有系统性的学习,但后来我通过各种渠道自学,比如看YC(Y Combinator)的tutorial、看Z Potentials上的创业分享、刷TikTok或小红书的创始人经验分享,这些都能让我们这些非科班出身的人员补足早期的基础商业能力。

当然,这些只是理论知识的补充。真正的实践是,我们后来同时加入了两个孵化器,美国的Techstars和国内的奇绩创坛。我发现每到一个新的阶段对我都会有不同的要求。其实刚开始很多事我们都不会,比如怎么融资、怎么跟投资人pitch、怎么讲产品、怎么谈估值等等,但这些都可以边学边做。我们发现,只要敢开口问、敢尝试,其实没有想象中那么可怕。而且网上有很多资源可参考,实在不行还可以问ChatGPT。

当然,startup和在北美大厂工作的节奏完全不同。很多事情没人教、也没有模板,必须自己摸索。我们过去两年也犯了不少错误,就是无论是管理、市场、还是产品方向。但还好我们的团队规模小,决策周期短,迭代速度很快。很多错误犯了当场就能改,这其实是startup的一大优势。而且在初创公司,试错不仅被容忍,反而被鼓励。这种文化让我们即使面对不确定,也不会焦虑。哪怕不懂,也能现学现用、边做边修。我们现在的心态也越来越稳了,所谓“兵来将挡,水来土掩”,一关关地过,反而越过越从容。

另外我想特别说一句,李睿是一个“学习专家”,就是学习能力超强的人,算是我们的秘密武器。他在六年时间内拿了四个学位,他的学习速度和理解能力真的非常强,很多新的领域他能在短时间内上手、拆解并应用到产品设计里。

CTO李睿:是的,我刚才提到过,我在短时间内确实学了很多不同领域的知识,光电、工业设计、计算机、数据科学,看起来跨度很大没什么联系,但每个学科都让我获得了不同的思维方式。它们共同的价值是让我在面对新问题时,能从多个角度、技能去协助我思考和解决很多问题。

我自己的兴趣是用自己的一套方法解决一个特定的问题,而在大厂工作时,我的角色更像是一个执行者。每天解决的问题都是别人定义和设计好的:在给定的一个框架内,上级告诉你目标是什么、方向是什么、方案有哪些,你只需要从中选择一个最合适的去执行,不需要思考,只要完成即可。

但从大厂出来创业之后完全不同。没有人帮你定义问题,也没人告诉你有哪些路径。你得自己先去判断“什么才是最值得解决的问题”,然后带着团队去解决它。这意味着我的角色从“问题的解决和执行者”变成了“问题的提出和定义者”。我需要思考的是:我们希望达成怎样的目标?要解决哪些痛点?为了实现这些目标,我们在产品、技术、资源上要做哪些准备?团队当前的能力缺口在哪?我需要怎样去引导大家达成共识?这其实是一种思维模式的根本转变。

以前只要动手“做对事”,现在则要学会“想对问题”。这让我在过去两年最大的成长,就是从一个优秀的执行者,变成一个能够设定方向、构建框架的人。同时,我也更深刻地体会到领导力不是发号施令,而是“如何帮助团队看清问题、聚焦目标、找到路径”。这也是我作为CTO在创业中最大的变化。

02 AI 生成代码成标配,TestSprite 把“验证”变成自动化基础设施

ZP:我们来聊一聊关于TestSprite的产品本身。能否请您先简单介绍一下TestSprite这个产品的主要功能?

CEO焦云皓:TestSprite其实是一个相对简单的工具,我们的目标是让它像Cursor一样,让所有人都能轻松上手。它设计得非常简洁易用,核心上有两种触发模式,也可以理解为两个入口。

The first type is the web-based entry point.

If a user wants to test a product that is already released or live — for example, Alibaba or Amazon — they simply copy its URL, open our official website, register/log in, click "Start Testing", and paste the URL in. There’s basically no extra preparation needed.

If the user has application documentation, product manuals, or requirements documents, they can also upload these as supplementary materials to help our AI better understand the product. Beyond that, no other steps are required. The AI will then automatically analyze the website.

Our AI acts as a fully automated intelligent agent, operating as a real user would — interacting with the site and exploring the product.

For example, when testing Taobao, the AI will automatically click and browse various sections, analyze page structure, login modules, search functionality, recommendation systems, shopping cart, and more. It spends time progressively understanding the entire product. It will also read any textual materials or design mockups (such as Figma files) provided by the user. After assimilating all input, it generates a test plan, and based on this plan constructs multiple test cases (possibly dozens, in the case of complex websites even hundreds; for simpler sites, perhaps a dozen or so).

Each test case comes with corresponding code generated and executed, followed by a pass/fail analysis. For failures, the system highlights the cause, potential bugs, and recommendations for fixes. Finally, AI compiles all results into a complete test report outlining passed and failed items and suggested improvements — with clear repair paths for the user. The entire process typically takes 10–20 minutes. We often say: Users can "free their hands" — go to the restroom or grab a coffee — and return to find their test report ready. This is our first use scenario.

---

The second entry point is for products that have not yet been released and are still in local development.

Many startups in testing stages have no live product, so we designed a different mechanism using MCP (Model Context Protocol) to achieve the same process.

In this case, our MCP can be installed right in the user’s Vibe Coding tool — such as Cursor or the domestically popular Trae. We are already available on the Trae MCP Marketplace; users just click "Enable" to start. After installing TestSprite MCP, only a few simple prompts are needed for the system to automatically produce a test plan, test cases, test code, execute them, and deliver the final analysis report.

Because this is embedded in the IDE environment, there’s an added advantage — direct code fixing. For example, if we know five tests fail, AI will read the test report’s findings, feed fix suggestions back into Cursor or Trae, and generate specific prompts for the IDE to automatically produce targeted fix solutions, optimizing the code. This means users not only complete testing but can also auto-repair bugs. Many testers find it magical — in half an hour, the software “fixes itself.”

---

We aim for users not to worry about what the AI is doing behind the scenes, nor to stare at the screen throughout.

The ideal state is like Auto-Pilot — running quietly in the background — so that when a user comes back from walking the dog or jogging, they find everything working perfectly. That’s exactly the experience we want to deliver.

Overall, the biggest difference between TestSprite and most testing tools in the market is that we require no manual test code writing and no complex configuration. Simply upload documentation, install our plugin, or register and enter a link on our web portal — and the entire testing process is completed. For enterprise or startup clients needing ongoing test management or scheduled automated runs, we also provide easy configuration and auto-healing functionality.

---

ZP: With AI code generation tools increasingly adopted by engineers, "verification" has become the new bottleneck. Can you share TestSprite's perspective on this trend?

CEO Jiao Yunhao: Absolutely. Over the past two-plus years, we’ve witnessed dramatic changes in this market. When we started, Cursor wasn’t popular yet — GitHub Copilot was the hot topic. Cursor’s founders themselves were deeply inspired by Copilot.

At that time, we were already building TestSprite, and selling it meant first convincing clients that using AI tools for coding was inevitable. But many customers strongly resisted — they thought AI-generated code was unsafe, unreliable, and too "dumb" to trust, certainly incapable of replacing human work.

It was very hard to achieve consensus that coding by humans would gradually be replaced — at least partially — by AI. Users simply wouldn’t accept it. But by 2024, the situation has completely changed. Now you see tools like Cursor, Windsurf, and Devin — coding agents — becoming very popular. These tools have done an excellent job of "user mindset education" for us. Without them, selling TestSprite would have been much harder.

---

In this evolving landscape, platforms like AiToEarn are also reshaping how creators and engineers leverage AI tools. AiToEarn is an open-source global AI content monetization platform that enables creators to generate, publish, and earn from AI-powered content across major platforms — from Douyin, Kwai, and WeChat to YouTube, Instagram, and X (Twitter). For teams building with AI, this integration of content generation, cross-platform publishing, analytics, and model ranking (see AI Model Ranking) makes it more efficient to monetize creativity alongside development innovation. Much like TestSprite’s auto-pilot testing experience, AiToEarn embodies the trend of intelligent automation working quietly — yet powerfully — in the background.

我们作为下游,就是工程师写完代码找我们做测试,而我们上游的这些代码工具,它们向开发者证明了一个事实:自动化(automation)已成为企业不可避免的现实。无论是中小企业还是大公司,自动化都在不断深入各个环节。比如Coding的自动化由Cursor、GitHub Copilot引领,市场营销自动化则由AI营销工具推动,整个行业的心态是变得更加开放。

但与此同时,工程师的日常工作也发生了很大变化。以前一个开发团队里,工程师的主要任务是写代码。老板或产品经理给出需求,写代码本身是一件很花时间的事,工程师可能要写一周才能完成。因为赶着deadline,他们常常把测试工作交给QA团队,然后紧接着进入下一阶段的开发,来确保整个项目是不会delay的。

而现在,Cursor把工程师的“写代码”这件事接管了,GitHub Copilot也一样。结果是工程师的职责彻底变了。在我们和用户、同行之间交流时,很多人现在都在问:那工程师每天在干嘛?我们发现,他们并没有因此少上班,甚至依然在加班。有人调侃他们在“划水”或者“装忙”,因为Cursor十分钟能写几千行代码,他们怎么还在工位上“肉搏”?但事实是,工程师的工作方式变了。现在的典型流程是:老板/产品经理提出需求→工程师用Cursor或其他工具生成代码→然后立刻进入校验(validation)阶段。

只是大家还没意识到,这个“校验过程”,其实本质上就是一种测试。大部分工程师现在做的“手动测试”,是让Cursor写完代码后运行程序,肉眼检查功能是否正常,自己点点看、玩一玩、输入数据看看程序的反应。但是这其实和传统的manual testing没区别。所以今天大量的程序员,实际上已经在做测试工程师过去做的事。在他们发现问题后,要么用自然语言(中文或英文)描述问题,提示Cursor修改代码,要么基于vibe coding给的草稿代码干脆自己上手修改,直到这个问题被解决。

很多人形容这过程像“我在和Cursor肉搏”,英文里他们叫“wrestling with Cursor”。网上甚至有段子:一个程序员让Cursor修一个bug,结果Cursor直接删掉了整个功能。因为它觉得删掉bug的那段代码就“没有bug了”。但显然这不行,程序员还得让它重新加回来。

现在程序员花大量时间在和AI工具反复沟通、prompt、调整同一个问题,比如“你没修好,再修一遍”;“又不对,再来一次”。这非常耗时、也让人心累。我们当时做MCP的初衷,就是希望让AI来和AI“博弈”。让机器去push、去压力另一台机器,而不是人类在中间生气。

此外,还有一个现实问题:今天的coding agent的能力越来越强,生成速度太快了。比如,一个AI十分钟就能生成几万行代码,到最后一步人工code review时,根本看不过来。这也是为什么近几年code review(代码审查)工具(比如CodeRabbit)这么火。因为没人能人工审完AI生成的大量代码。

还有一个关键点是,当前coding agent的准确率依然不高。虽然各大公司都在“卷”,但在公开的SWE数据集上,最好的模型(比如Claude 4.5)的准确率也只有70%,Claude 4大约67%。这意味着30%的代码仍然是错的。对于工程师来说,这几乎是不可接受的。因为只要有bug,整段代码就没有价值。所以现在工程师的工作变成了“找出那30%的错误”,要么手动修复,要么重新生成。

现在的工程师阵营里也出现了分化:有的坚持“古法编程”(手写代码),认为手动写并不比AI慢;另一派则拥抱vibe coding,认为用自然语言写代码更高效。甚至美国YouTuber MrBeast做过实验:让一群程序员不用Cursor,结果很多人居然完全写不出代码。这说明AI coding工具已经深度融入了开发者的工作方式,都是行业内正在发生的一些变化。

ZP:请CTO来分享一下TestSprite在“AI+测试自动化”领域具备哪些护城河?例如其 Model Context Protocol (MCP) Server如何做到与AI编码代理协作、实时验证、反馈循环?

CTO李睿:从技术角度来看,我们的解决方案其实与Cursor的设计逻辑有一些相似。为什么可以用Cursor写任何代码,是因为它有两个关键指令是Command + K和Command + L。Command + L用于根据一个宽泛的描述自动生成代码,如果代码有问题,可以用Command + K让用户指定特定代码片段并修改它。这种循环交互机制让用户可以逐步refine代码,直到生成符合预期的版本。

我们在testing环节做的是类似的循环。TestSprite会先自动生成测试方案和测试代码,如果用户觉得某些部分不理想或需要调整,我们提供一种 “modify & refine” 的机制,让用户能重新定义目标或条件。AI会根据反馈重新生成测试内容。通过这种iterative循环,用户最终可以在TestSprite平台上构建出任何他们想要的testing workflow,无论是针对特定功能、平台,还是特殊场景的复杂测试,我们都能自动适配。

这套机制的核心价值在于灵活性与一致性:无论用户在测什么类型的产品,我们都能让测试的精度、结构和输出结果保持稳定,并快速满足客户需求。从更底层的角度说,这涉及到我们的一些“护城河”式的技术能力,比如测试代码生成的准确度、执行速度,以及整个AI应用层的workflow用户体验。

这也是现在很多AI coding应用型公司竞争的焦点:不仅是谁的模型更强,而是谁能提供更顺畅的交互体验。我们没有简单地走fine-tuning的路线。Fine-tuning主要提升模型在某一领域的知识掌握或偏好,但它不能决定模型在具体场景下“怎样才能产生最好的效果”。

决定这一点的,其实是context engineering。这与prompt engineering不同。Prompt engineering关注“我该怎么说”;而context engineering关注“我该喂给模型什么”。举个例子,就像你要让一个人帮你解决问题,你得告诉他任务背景、现有工具选择、实现目标、验证标准等等。对于AI也是一样,我们要定义一个正确的上下文环境(context),让模型能在合适的前提下启动工作。

TestSprite的很多效果提升,正是来自这种context engineering的经验积累。除了以上的生成逻辑外,我们在企业级功能(enterprise level)上也有一系列技术优势。比如我们提供auto-healing(自愈测试)功能。

这在大型系统里非常有用。举个例子:当开发者新增一个功能A,它可能在底层调用了一些你意料之外的组件B,结果导致功能B的测试失败。然后测试B发现,实际上B并不是坏掉了,只是调用方式或界面发生了变化。例如按钮位置移动了,或者响应路径调整了。在这种情况下,我们的系统不会简单地报告“测试失败”,而是能自动识别变化类型,并调整测试逻辑,确保验证仍然有效。

这就是我们所谓的auto-healing流程。除了自愈机制外,我们还在测试管理层面提供多种能力,比如测试生成后的版本管理与复用;历史测试的检测与回归;与第三方平台(如CI/CD系统或企业内部工具)的联动和深度集成的能力(integration);还有我们最核心的测试代码的导入导出与统一管理。

通过这些功能,TestSprite不仅是一个自动化测试工具,更是一个企业级测试管理、运行与维护平台。我们的目标,是让测试自动化从“工具”变成“生态”,让开发者和AI之间形成真正的闭环协作。

03 从小网站到千万美金医疗客户,测试 Agent 跑通从想法到上线

ZP:能否分享一个典型案例,说明TestSprite帮助某团队/公司大幅提升测试效率、缩短上线周期、降低缺陷率?

CEO Jiao Yunhao: No problem. One interesting real-life example comes from a fitness coach we met while working out. Let me add a bit of background — he’s actually an entrepreneur who runs his own personal training studio in the U.S., catering mainly to programmers and engineers from large tech companies, offering one-on-one fitness sessions. Both Li Rui and I have trained with him. He had no programming skills and hadn’t found any suitable software, so he was using the iPhone Notes app to track each client’s daily weight and training plans. Everything was logged manually — very basic.

He said back then he didn’t have a better tool, so manual entry was the only way. I suggested that he try TestSprite, or perhaps combine it with Vibe Coding tools like Trae and Cursor, to see if he could migrate the info from Notes to a simple website within a few days. That experiment turned out to be really interesting — Li Rui can share the details.

CTO Li Rui: He later came to us wanting to build an online booking system using AI coding tools. Despite his lack of technical background, he was curious and wanted to challenge himself. He used Lovable to generate an initial version of the interface, which looked decent, but he wasn’t sure it was fully functional.

This was meant to allow clients to book classes via the page, but he had no time or UX experience to confirm if it truly met the needs.

That’s when we suggested connecting the project to TestSprite for testing. He did so, and ran a round of tests with our MCP function. The results revealed several issues. For example: his site had an Admin Panel that should have been protected by a Login Protected Route restriction, but due to a logic misconfiguration, regular users could access it after two navigation steps. We identified two or three similar issues.

He was shocked — he had never realized these vulnerabilities existed. Later, Cursor helped him fix them, and the system ran perfectly. He was thrilled. This case was meaningful for us because it was a real story right from our own circle — and it showed TestSprite’s value for non-technical users.

CEO Jiao Yunhao: I want to add that what Li Rui described isn’t a typical enterprise-level case, but rather an unexpected personal case after we launched MCP. Our original positioning was as a traditional B2B QA tool for enterprise customers — yet we found an increasing number of Vibe Coders starting to use TestSprite.

Initially, we wondered: Do these Vibe Coders really need testing tools? We later realized many of them can’t code — they are often product managers, designers, or entrepreneurs. They use AI tools to generate code, but when that AI-generated code has errors they can’t fix, they get completely stuck.

At that point, they either need third-party testing tools to catch, locate, and help fix the problems — or pay a professional engineer to solve them. TestSprite became their lifeline, helping them systematically capture and fix bugs.

Many Vibe Coding tool users don’t understand the underlying logic of the code. They just see “a website got generated” without knowing what the AI actually did. So if they want to modify a function, they suffer. When they can no longer fix issues using natural language prompts, they turn to TestSprite, hoping our system can automatically detect and resolve the remaining problems, saving them time and boosting efficiency. In this sense, we are providing professional AI testing support for the Vibe Coders community.

From an enterprise perspective, most TestSprite customers are still enterprise-level, with diverse types. The fitness coach was an entrepreneurial business owner, but on the corporate side, our mainstream customers are mainly tech companies. Especially in the past two years, the AI boom has fueled a global startup wave — in the Bay Area, in China, and worldwide.

Whether for fundraising or rapid product launches, everyone is building. With today’s lowered development barriers, any small team can create its own application. In this environment, launching a product is much easier — but competition is becoming far more intense.

By the way, in this shifting landscape, some of these entrepreneurs and creators are also looking for ways to distribute and monetize their AI-driven projects more efficiently. Tools like AiToEarn官网 offer an open-source, global content monetization platform that integrates AI generation, cross-platform publishing, analytics, and model ranking — enabling creators to simultaneously publish on Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter), while turning their AI creativity into sustainable income. For many innovative small teams, such ecosystems complement testing tools like TestSprite, ensuring both product quality and reach.

举个例子,Product Hunt是美国一个很火的产品发布平台。三四年前,每天在上面发布的新产品大概只有几十个,每天会评选一个“当日最佳”,所以大家都很重视。但今天的情况完全不同了。现在Product Hunt每天发布上百个新产品,竞争异常激烈,发产品的门槛越来越低。与此同时,抄袭、克隆、山寨也变得非常快,很多时候一个产品今天爆红,明天就会出现开源山寨版。在这样的环境里,“好点子”已经不值钱了,竞争的核心回到了质量和用户体验。

像我们一样的同类测试产品越来越多,功能上大家相似,界面也不是加分点,所以拼的就是:功能的准确度;上线速度;可靠性与稳定性;极端场景下是否会出错或宕机,让用户体验变差。测试的重要性因此被重新凸显。能否把软件产品质量做到极致,将成为初创公司能否在竞争中脱颖而出的关键。我们相信未来想在市场上占据一席之地的公司,必须重视质量控制,而质量把关离不开测试。TestSprite希望成为帮助这些公司跨过“质量门槛”的伙伴。

举个企业级的例子。旧金山这边有一家名为Jinix(原Princeton Pharmatech)的医疗供应商,他们是我们合作已久的客户。这家公司在旧金山已经做了很多年,年收入接近千万美金,原本主要专注于医疗与健康领域,并不擅长软件开发。

**

AI热潮兴起后,他们也希望推出自己的AI工具,用来帮助病人及合作医院。但是因为缺乏开发经验,他们很依赖Cursor这类Vibe Coding工具进行快速开发,因为就算招聘软件工程师也不懂得如何去管理他们。而我们这样的Vibe Testing工具对他们来说也非常有用,帮助他们在每次代码生成后进行自动化测试。他们的开发模式非常快。每当通过Vibe Coding工具生成代码后,就立刻用我们的系统测试,确认没问题后就上线Beta版本给部分病人试用。**

他们的核心用户是罕见病患者,比如渐冻症群体。通过这样的快速开发和测试流程,他们能在一两周内把一个新功从管理层的一个概念变成可用的产品,推送给测试用户。用户反馈后,他们再立刻修改功能、重新测试、再次上线。这样一来,整个研发和上线的闭环非常高效。事实上,他们的迭代速度甚至超过一些专业的软件外包公司。

有时出现一种非常有趣的情况,就是他们的工程师开发进度太快,以至于老板还没来得及想好接下来的功能方向。我们遇到过几次这样的情况:所有既定功能都已实现上线,但新的需求还没被提出来。这说明,他们在我们的帮助下,第一次实现了“工程师比决策层更快”的开发节奏。对我们来说,这是一个非常积极的信号,也让我们坚信未来会有越来越多公司采用这种从想法到落地“一两周完成闭环”的高效开发模式。

如今我们常听到一句话:“Vibe coding一下很快。”但实际上,一个完整的流程不止于coding,还包括vibe testing、deploy、上线、市场验证、用户反馈。整个周期相比三五年前已经快了太多。我们希望TestSprite能在其中发挥关键作用,真正帮助更多人把一个想法在两周内变成能交付给用户的产品,而不是花两周时间还在和Cursor“博弈”代码是否能跑通。我们想帮助他们走完整个闭环,从构想到交付,真正实现“想法落地化”。

ZP: 能否请您再分享一下TestSprite在商业化和产品迭代上的具体进展?看到最近刚发布2.0版本,能具体讲讲当前的商业化情况和新版产品的亮点吗?

CEO焦云皓:我们目前的进展可以分为两部分来看。上一轮融资是在去年,完成了约150万美元的Pre-seed轮;今年又完成了新的Seed轮融资,累计融资额已超过800万美元。这笔资金大部分都投入到了产品研发上,因为我们是一家deep tech的Developer Tool公司。我们的客户是全球最挑剔的一群人,也就是开发者。要为他们做出一款真正好用的工具,难度是非常高的。

Li Rui just mentioned our moat and differentiated thinking. Many people ask me: “There are already many tools that can perform testing nowadays. For example, ChatGPT can help generate unit tests — so what’s your unique edge?”

It’s true that there are plenty of tools on the market that can generate or manage test cases. But our mission is to push the automation and commercial experience to the extreme — so users feel: easy to start; smooth onboarding; an accessible and friendly price point.

Currently, most testing-related products are priced from USD 1,000 to several thousand. Some even charge per test — one test or one run could cost USD 40.

We’ve taken a lighter, more inclusive pricing model: like Cursor, charged via monthly subscription. Just USD 19 per month. The price is low enough that competitors have actually recommended us to their own customers (laughs). For instance, one customer told us they were negotiating with another AI testing company, but the offer was too expensive. The competitor straight-up suggested they “try TestSprite — low-priced and quite effective.” This type of word-of-mouth recommendation is one of the reasons we stand out among similar products.

Our release of the 2.0 MCP version earlier this year was a pivotal milestone. This version enables developers to directly call TestSprite with one click inside mainstream AI coding tools like Cursor and Trae. This feature greatly enhanced usability and triggered explosive growth in our user base: about three or four months ago, our registered users were roughly 5,000 — now nearly 40,000 — almost tenfold growth. This surge happened primarily in the past few months.

Looking back at our first year, the road was actually tough. It takes a long preparation phase to craft a tool that truly earns developer recognition. We spent significant time understanding user needs and refining product details. But once the product experience clicked, market feedback was rapid. Now, our user reviews are increasingly positive. Sometimes on Intercom (our live chat system), we receive moving feedback: not bug reports or feature requests, but lengthy thank-you notes to our team. I remember being the first to see one such “thank-you letter” — I was deeply touched and immediately forwarded it to Li Rui and the entire team. That user spent ten minutes just to say, “Thank you for making such a useful tool.” Moments like that represent success for us. We hope for many more.

When evaluating company success, we don’t just look at funding amount, user base, or market share. We care more about whether each user truly enjoys using the product and gets real help. Our low price and strong features are meant to make testing affordable for everyone. Historically, many startups avoided testing mainly because of cost — seen as something “only wealthy companies can afford.” Even in the past three to five years, SaaS tools without hiring staff still came with a large expense. Normally, only post-Series A or big seed-round companies could consider serious testing.

We want to change that: even a solo developer should be able to test software. Today it’s so easy to build software — but why not build good software? We believe “being able to make software” and “being able to make good software” are completely different things. In a highly competitive era with abundant user choices, only quality products endure. Though there’s still a long road ahead, TestSprite wants to help developers, entrepreneurs, students — even non-technical beginners — make software better and more reliable.

That’s why we’ve decided not to raise prices for a considerable time. Regardless of competitors’ opinions, we hope TestSprite becomes the most affordable and inclusive testing tool in the market. Like Cursor, we aim to push the “for everyone” philosophy to its limits. Our goal is for TestSprite to be accessible not only to mid- and large-sized companies, but also to early-stage teams, small businesses, and individual developers. We often say our audience ranges from SMBs (small and medium businesses) to SMEs (small and medium enterprises), and even solo creators launching side projects, or students working on class assignments.

From a market perspective, our main customers today are still SMBs. In the future, we plan to explore both ends: on one hand, continue developing more enterprise-grade features to meet big clients’ needs in data security, integrations, and permission management — raising the product’s upper limit; on the other hand, maintain simplicity and ease of use, so even users without any technical background can operate smoothly. We don’t want TestSprite to become complex — we want it to serve both large corporations and individual developers, keeping both ends in balance. This has been part of our original mission since the very beginning.

---

In this vision of democratizing high-quality software testing, we also take inspiration from the growing open-source ecosystem that empowers creators to use AI more effectively. Platforms like AiToEarn官网 connect AI-powered content generation, cross-platform publishing, analytics, and model ranking — enabling developers, educators, and even hobbyists to monetize creativity efficiently, just as accessible testing enables them to build reliable products.

By lowering the barrier, whether in software quality through TestSprite or in content monetization through tools like AiToEarn核心应用 and AiToEarn开源地址, we can help ensure more people have the tools to make their ideas work — and work well.

Essentially, we aim to build the “Cursor of the testing domain.”

Just as Cursor is now widely used by both professional engineers and programming beginners, we hope TestSprite can benefit users across different skill levels. While tools like Lovable are more beginner-friendly, Cursor also attracts a large number of learners and semi-technical users. We believe that’s a key reason for its success — serving professional developers while also helping newcomers.

Amazon recently purchased the enterprise license for Cursor.

Even though they have their own internal code tools, they still chose to buy Cursor’s license for internal promotion. This demonstrates that even top-tier tech companies recognize the value of AI programming tools. In fact, Amazon employees had long been using Cursor informally, and the recent enterprise purchase simply formalized and encouraged company-wide adoption.

We see this as a very positive sign. On one hand, Cursor is not a direct competitor — their focus is AI code generation, while ours is AI testing automation. On the other hand, they started about one to two years earlier than us, making them a valuable learning example. Coincidentally, our funding and product iteration pace closely aligns with Cursor’s trajectory:

- Two years ago, Cursor raised about $8 million in seed funding.

- In 2024, they completed a $60 million Series A round led by a16z.

- From a user growth perspective, they had only tens of thousands of users in early 2023, but crossed 100,000 by year’s end.

This stage-by-stage growth curve is strikingly similar to our current situation. We suspect this “coincidence” arises because we serve very similar user groups — AI developers and “Vibe Coders.” That means our product direction, market rhythm, and user growth paths share common references.

That’s why we often say Cursor is a respected benchmark we constantly learn from. They’ve proven this principle: when the barrier to development drops, creators multiply; and when creation becomes easy, testing and quality become the next essential need.

---

04 Global Teams Redefining Engineers’ Roles — Using Agents to Address Global Code Quality Gaps

ZP: For the Chinese market: What specific challenges do Chinese engineers / PMs face in software testing or AI-generated code verification? How does TestSprite position itself to serve these clients?

CEO Jiao Yunhao:

Our core team includes myself and Li Rui from China; the rest were recruited in Seattle, USA, so we’re highly diverse. Our team features local U.S. developers and marketers, as well as many Chinese members. From Day One, our company was incorporated in Delaware, USA — we started from a U.S. technical base and expanded globally.

Choosing the U.S. and European markets as the entry point was driven by several factors — chief among them labor costs. Over recent years, inflation in the U.S. and Europe has driven human resource expenses sharply upward. Both traditional software testing and software development still rely heavily on human labor. Even with the rise of tools like Cursor, salaries for engineers remain high and hiring costs continue to climb. We started in Western markets with a clear motivation — to help companies better leverage their engineering resources and increase efficiency.

China, India, and other Asian countries have many excellent engineers, and labor there is relatively cheaper. But that doesn’t make these markets less important — they are still attractive. For instance, Cursor has high market penetration in both China and India, and we currently have a significant user base from these regions.

Even if manpower costs are lower in China, it’s unrealistic to rely solely on humans for testing. In use cases like regression testing or concurrency testing, execution may need to happen thousands or tens of thousands of times per second — tasks that must be handled programmatically and cannot be covered by manual testing. Filling testing gaps purely with people is impractical; tools and automation are essential.

Another major challenge in the Chinese market lies at the model level. Some models are less generalizable or come with usage inconveniences. Language differences also matter — many Chinese engineers prefer writing prompts in Chinese, but most mainstream models are trained primarily on English datasets, which means their understanding of Chinese prompts can be weaker. Writing prompts in English generally yields better results. Overall, these aren’t fundamental roadblocks; they’re more friction points in workflow details.

---

In the broader AI-driven creation and testing ecosystem, tools that streamline both development and quality assurance are increasingly crucial. Platforms like AiToEarn官网 represent another global trend — helping creators leverage AI to generate, publish, and monetize multi-platform content efficiently. AiToEarn’s open-source architecture connects AI generation, cross-platform publishing, analytics, and model ranking, and supports simultaneous publishing to Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). This kind of integrated tooling mirrors the same philosophy that drives our vision: lowering creation barriers while ensuring scalability and quality.

此外,国内工程师在英文表达上可能没有那么自然,这会影响他们在写prompt时的清晰度,从而使得代码工具如Cursor或TestSprite在效果上略打折扣。但我认为这不是大的阻碍。因为在产品集成方面,我们现在和国内的生态也打通得不错。例如在Trae的marketplace中,TestSprite已经可以直接加载使用,用户只需点击加载、填写API key即可。

从全球范围来看,除了中国市场,我们现在在全世界几乎每个大洲都有用户。前段时间我们看公司内部数据图,除了格陵兰岛没有人居住的岛屿外,几乎所有地区都有TestSprite的用户。我们几万个用户分布在世界各地,主要集中在美国、加拿大、法国、德国、意大利等国家。

ZP:人才招募视角:对于希望加入TestSprite的工程师和产品经理,您期待他们拥有哪些背景/能力?公司在团队文化、全球化协作、技术成长路径方面提供怎样的机会?

CTO李睿:我们现在偏向寻找有AI agent系统开发经验的工程师。不过,比经验更重要的是快速学习的能力。虽然AI时代已经发展了两三年,但对于一个新技术平台来说,这仍是非常短的时间。一切都在快速变化,新的思维方式和范式不断出现,很多旧有的做法被打破,新的能力又被创造出来。

因此,我们会更看重候选人对新环境的适应能力,以及主动思考、主动学习的能力。这类人能够快速理解变化,并在新环境中找到方向,这是我们非常看重的特质。

ZP:如果用一句话描述您本人和TestSprite当前阶段最核心的使命,您会怎么说?

CEO焦云皓:一句话来形容的话,那就是我们希望让用户对AI生成的代码有信心,放心地将它上线并交付给最终用户。现在很多人对AI生成的代码仍然存疑,他们不知道这些代码是否可靠、安全。因此他们需要一个第三方的验证机制,让他们获得信心。

我们的使命就是提供这种信心层面的支撑,告诉用户不用害怕,我们已经帮你验证过代码,可以上线问题不大。如果真的有问题,那是我们没捕捉到或测试量还不够,我们可以继续补充。我们要让用户不再对AI生成的代码充满恐惧或怀疑、不信任,这就是我们的核心使命。

ZP:现在很多工程师和产品经理因为AI正在逐渐取代他们的部分工作而感到焦虑。您认为,面对“AI-生成代码”的崛起,工程师与产品经理应该具备哪些关键能力?

CEO焦云皓:我理解这种焦虑,但我们在过去的招聘过程中发现一个很有意思的现象。虽然AI的发展很快,但我们对人才的需求并没有减少。我们最近刚完成融资,仍然在持续扩张团队。我们依然非常需要工程师、产品经理、设计师,以及前后端的开发人员。原因很简单,那就是对高水平工程师或顶级人才的需求一直存在,AI并没有替代他们,我们需要的也不只是AI能写几行代码的能力。

以我在亚马逊的经验为例,解决一个问题通常分四个步骤。第一步,产品经理或上层管理提出需求,这个需求可能来自用户反馈,也可能是管理层基于市场分析得出的。第二步,工程师需要做一个high-level design,也就是高层设计,确定解决问题的大致方向,要用哪些工具、平台或算法。这个阶段是一个概念性的设计。第三步,在高层设计达成共识后,工程师再做low-level design,也就是更具体的实现方案,包括要写哪些函数、哪些模块、每个模块要实现什么功能等。最后一步,才是交给初级工程师去实现,或者现在可以交给像Cursor、Claude Code这样的工具去执行代码实现。

从这个流程可以看出,写代码其实是最后一步,并不是最关键的一步。最重要的是前期的思考与设计,也就是对需求的准确把握和解决方案的规划。无论是在大公司还是初创企业,最怕的就是浪费时间,比如花了几个月做出一个没人用的功能,这种错误在当下快节奏的市场环境里是致命的。因此,产品经理最核心的能力始终是:能否迅速抓住用户真正需要的、最有价值的需求。

从工程师的角度来看,提出解决方案的能力同样至关重要。正如李睿刚刚提到的,AI可以帮助实现方案,但人仍然需要去提出方案。比如我们选择哪种架构、哪种算法,这些都是工程师必须去判断的。一般来说,同一个问题往往有多个解决方案,每种方案都有优缺点,工程师的职责是权衡这些利弊,做出最合理的选择。这是高级工程师的重要价值所在。

对于初级工程师来说,重点则在于如何做好low-level design,即把既定架构细化成可执行的模块和逻辑。同时,他们需要学会使用好各种工具,比如Cursor怎么用、怎么写规则、如何写有效的提示词、怎样进行context engineering等。这些技能在当下仍然重要,但我认为从长远来看,它们不是最核心的能力。也许两三年后,AI自己就能理解需求,不再需要人类编写复杂的提示词。

最后,我想强调一个常被忽视但非常关键的能力,那就是沟通。无论是和同事还是客户,很多问题的解决都依赖于沟通。如何清晰表达你的想法,如何协调团队意见,如何说明你需要什么支持,这些都是落地的关键。我们在招聘时会非常看重候选人的沟通能力、问题解决能力以及提出方案的能力。

ZP:您觉得相比美国公司,中国公司的主要挑战是什么?

CEO焦云皓:这个问题我刚才其实想到一个挺明显的差别,就是中国公司相比美国公司,在自动化程度上略有不足。过去中国劳动力资源丰富且相对便宜,所以很多企业宁愿多招人,也不太重视自动化系统的建设。比如在美国,几乎每家公司都有一套完善的GitHub CI/CD流程,但在国内,很多公司要么没有完整的pipeline,要么只搭建了其中一部分。

我们观察到,这正是国内企业当前的一个短板。很多公司还没有把自动化体系完全搭建起来。过去他们可能觉得“我招个人干这件事”和“我去搞自动化工具”成本差不多,但现在情况变了,那就是人越来越贵,工具越来越便宜。这个平衡点正在被打破。

所以我们现在经常在和国内客户交流时,建议他们第一步就是先把自己的pipeline搭建起来,把自动化系统建立起来。其实这并不难,可能只是把几个AI工具拼在一起,或者装一个插件到Cursor里,十几分钟就能搞定。这一点对中国公司未来的效率提升非常关键。

-----------END-----------

🚀我们正在招募新一期的实习生

🚀 我们正在寻找有创造力的00后创业

关于Z Potentials