A Comprehensive Beginner’s Guide to AI Agents

# Chapter 1: First Encounter with Agents

Welcome to the world of **Agents**!

In this age where *Artificial Intelligence* is reshaping industries worldwide, the **Agent** has emerged as one of the core concepts driving technological change and application innovation. Whether your aim is to become a researcher, an engineer, or simply to grasp cutting-edge tech, mastering agents is a crucial step.

In this chapter, we’ll explore fundamental questions:

- **What is an agent?**

- **What are its main types?**

- **How does it interact with its environment?**

By the end, you'll have a strong foundation for deeper study.

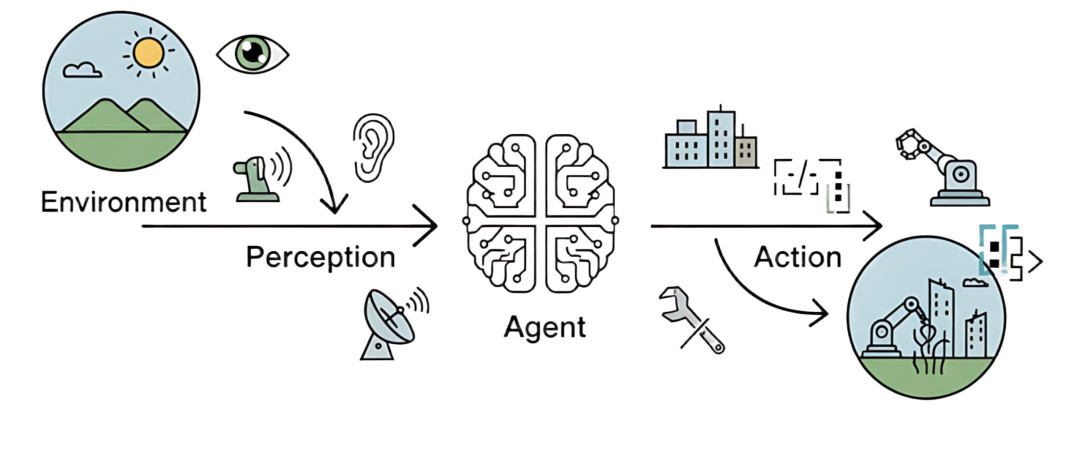

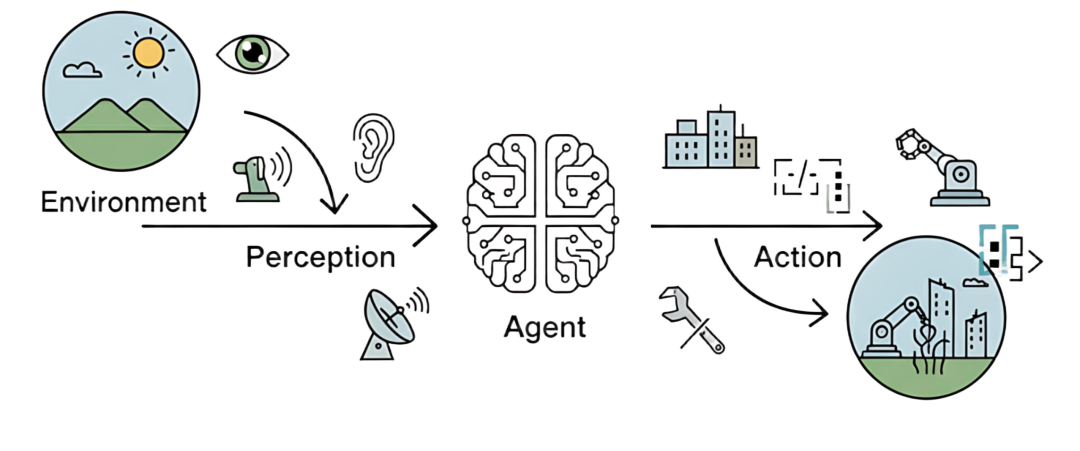

*Figure 1.1 — Basic perception–action loop between an agent and its environment*

Open-source repository:

[https://github.com/datawhalechina/Hello-Agents](https://github.com/datawhalechina/Hello-Agents)

---

## 1.1 What is an Agent?

An **agent** in AI is:

> **Any entity that can perceive its environment through sensors and autonomously take actions via actuators to achieve specific goals.**

**Key elements:**

- **Environment** – External world the agent operates in

*(Road traffic for an autonomous car; financial market for a trading algorithm)*

- **Sensors** – Cameras, microphones, radar, APIs

- **Actuators** – Robotic arms, steering wheels, software APIs

- **Autonomy** – Independent decision-making based on perception and internal state

The **closed loop** from perception to action — illustrated in Figure 1.1 — underpins all agent behavior.

---

### 1.1.1 Agents from a Traditional Perspective

Before the rise of **Large Language Models (LLMs)**, AI pioneers built “traditional agents,” evolving from:

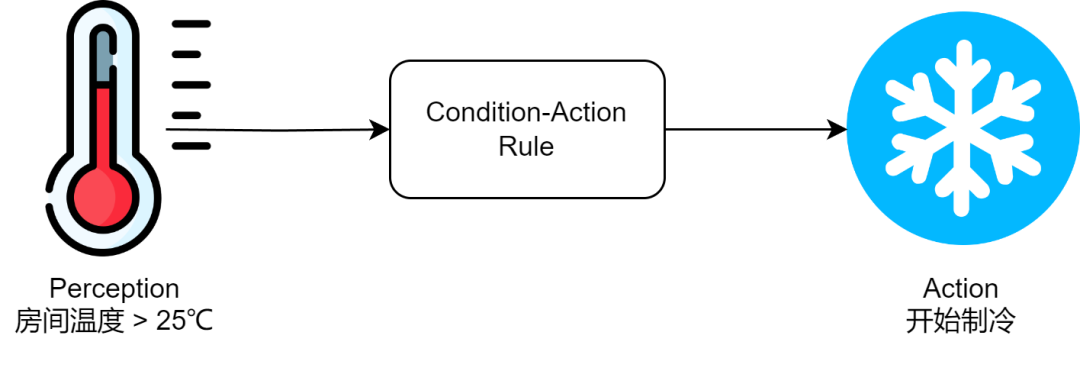

1. **Simple Reflex Agents**

- Rule-based *condition–action* logic

- Example: Thermostat — “IF temp > set value → Activate cooling”

- **Limit:** No context or memory

*Figure 1.2 — Decision logic diagram of a simple reflex agent*

2. **Model-Based Reflex Agents**

- Maintain an internal *world model* for unseen state

- Example: Autonomous car keeps track of surroundings even when sensors lose sight

3. **Goal-Based Agents**

- Plan actions to achieve desired states

- Example: GPS navigation finds optimal routes using search algorithms (e.g. A*)

4. **Utility-Based Agents**

- Assign utility value to outcomes and maximize satisfaction

- Handle multiple, potentially conflicting goals

5. **Learning Agents** *(Reinforcement Learning)*

- Improve via experience and rewards

- Example: AlphaGo discovers winning strategies without explicit instructions

**Evolution:**

From thermostats → cars with internal models → planners → decision-makers → learners — building the bedrock for today’s intelligent agents.

---

### 1.1.2 LLM-Driven New Paradigm

**LLM agents** differ fundamentally:

- **Traditional agents:** Explicitly programmed, deterministic, human-built knowledge models

- **LLM agents:** Implicit world models via large-scale pretraining, emergent capabilities, flexible handling

**Advantages:**

- Process natural language commands directly

- Plan, reason, and adapt dynamically

- Invoke tools autonomously

Example: An “Intelligent Travel Assistant” taking *“Plan a trip to Xiamen”* and autonomously:

1. **Plan** subtasks

2. **Call APIs/tools** for missing info

3. **Adjust** itinerary based on constraints

---

### 1.1.3 Agent Classifications

**By Decision Architecture:**

- **Simple Reactive Agent**

- **Model-Based Agent**

- **Goal-Based Agent**

- **Utility-Based Agent**

- **Learning Agent** *(meta capability)*

**By Time/Reactivity:**

- **Reactive** – Fast, low-latency

- **Deliberative** – Plan-focused

- **Hybrid** – Combine speed + foresight

**By Knowledge Representation:**

- **Symbolic AI** – Explicit rules, explainable but brittle

- **Sub-symbolic AI** – Neural networks, robust intuition but opaque reasoning

- **Neuro-Symbolic AI** – Merge perception (fast) and reasoning (slow)

---

## 1.2 Composition & Operating Principles

### 1.2.1 Task Environment — The PEAS Model

Describe agent environments via:

- **P**erformance measure

- **E**nvironment

- **A**ctuators

- **S**ensors

Travel assistant example:

Partial observability, stochastic changes, multi-agent influences, sequential/dynamic environments.

---

### 1.2.2 The Agent Loop

Agents interact via a **continuous loop** *(Figure 1.5)*:

1. **Perception** – Gather observations via sensors/APIs

2. **Thought** – Reason & plan steps; choose tools

3. **Action** – Invoke actuators/tools to change environment

4. **Observe** – Receive feedback → repeat loop

---

### 1.2.3 Structured Interaction Protocols

LLM agent outputs often follow:

Thought: [Reasoning]

Action: function_name(arg_name="value")

Observations are formatted natural language descriptions based on tool/API output.

Example:

Thought: Need to check Beijing weather

Action: get_weather("Beijing")

Observation: Sunny, 25°C, light breeze

---

## 1.3 Hands-On: Build Your First Agent

**Goal:**

> “Check today’s Beijing weather, then recommend suitable attractions.”

**Steps:**

1. **Install dependencies:**

pip install requests tavily-python openai

2. **Design system prompt** for role, toolset, action format.

3. **Implement tools**:

- `get_weather(city)` via wttr.in API

- `get_attraction(city, weather)` via Tavily API

4. **Create LLM client** *(OpenAI-compatible)*

5. **Run action loop:**

- Maintain `prompt_history`

- Parse actions from LLM output

- Execute tool calls

- Append observations

---

**Key Output Example:**

**Loop 1:**

`get_weather("Beijing")` → *Sunny, 26°C*

**Loop 2:**

`get_attraction("Beijing","Sunny")` → *Summer Palace, Great Wall*

**Loop 3:**

`finish(...)` → *Final travel recommendation text*

---

### Agent Capabilities Demonstrated:

- **Task decomposition**

- **Tool invocation**

- **Contextual reasoning**

- **Result synthesis**

---

## 1.4 Collaboration Modes

### 1.4.1 Agents as Developer Tools

Examples:

- **GitHub Copilot**

- **Claude Code**

- **Trae**

- **Cursor**

They integrate into workflows to assist coding, automate tasks, and increase efficiency.

---

### 1.4.2 Agents as Autonomous Collaborators

Delegated high-level goals → autonomous execution until completion.

Frameworks:

- **BabyAGI**

- **AutoGPT**

- **MetaGPT**

- **CrewAI**

- **AutoGen**

- **CAMEL**

- **LangGraph**

Varied architectures: single-loop agents, multi-agent teams, advanced control flows.

---

### 1.4.3 Workflow vs Agent

**Workflow:**

Predefined static sequence of steps (flowchart logic)

**Agent:**

Dynamic, goal-driven, reasoning-capable, adapts to environment changes

Example: Travel assistant reasons differently for sunny vs rainy days — not hard-coded.

---

## 1.5 Summary

We covered:

- **Definition & evolution** — from simple reflex to learning agents

- **LLM paradigm** — flexibility, tool use, reasoning

- **Operating loop** — perception, thought, action, observation

- **Hands-on build** — practical agent implementation

- **Collaboration modes** — tool-assist vs autonomous

- **Workflow vs agent** — static vs adaptive automation

**Next chapter:**

Explore the **history** of agents and how they evolved.

---

**References**:

[1] Russell, Norvig. *Artificial Intelligence: A Modern Approach*, 4th ed., 2020.

[2] Kahneman, D. *Thinking, Fast and Slow*, 2011.