Cursor Reveals for the First Time: “Training Is the Product” — The Secret Weapon Using Reinforcement Learning to Make AI Coding 4× Faster

Why AI Programming Assistants Often Feel "Off"

Have you noticed that AI programming assistants are either smart but slow, or fast but inaccurate?

I wrestled with this contradiction—until Sasha Rush from Cursor presented at Ray Summit 2025.

Their team unveiled Cursor Composer, a model trained with reinforcement learning (RL) to be both highly intelligent and extremely fast.

---

A New Mindset: Training-as-Product

The most important takeaway from the talk: Cursor isn’t chasing meaningless benchmark scores.

Instead, they focus on real-world programming workflows, training the model in actual codebase environments.

Key Ideas:

- RL training includes coding conventions, tool usage, and parallel execution strategies.

- The training environment is identical to the product environment—the AI "lives" the same experience as a real user.

- This training-as-product philosophy changes how AI tools should be built.

---

Why “Fast and Smart” Matters

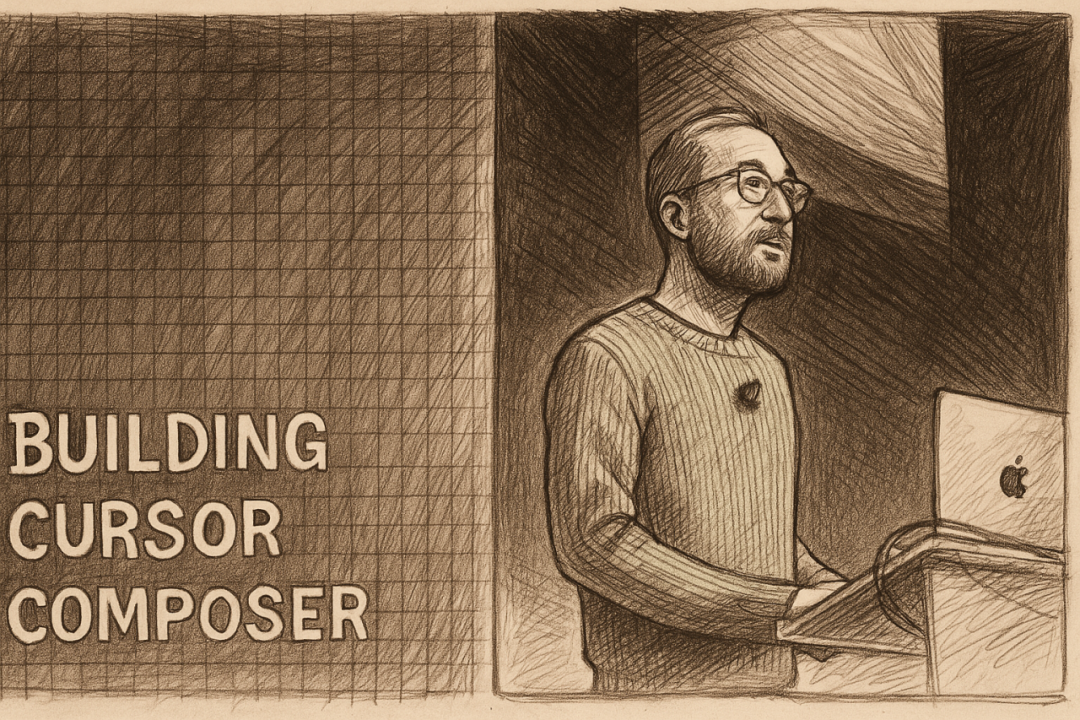

Sasha Rush showed that Cursor Composer:

- Is on par with frontier models in internal benchmarks.

- Outperforms last summer’s top mainstream releases.

- Beats all fast but less intelligent models.

- Generates tokens 4× faster than peers with similar capability.

Speed isn’t just a metric—it’s central to flow in coding.

> A 30-second AI response breaks concentration. A 2-second response keeps your brain in the zone.

The inspiration came from Cursor Tab, loved for speed and smoothness.

A prototype nicknamed Cheetah embraced this principle—fast, interactive agentic coding.

User feedback was glowing, calling it alien technology.

---

The Rise of Workflow-Aware AI Tools

Beyond code, specialized AI tools now shape other industries.

Example: AiToEarn官网, an open-source platform for AI content monetization—connecting AI generation, cross-platform publishing, analytics, and model ranking.

Supported Channels:

Douyin · Kwai · WeChat · Bilibili · Rednote (小红书) · Facebook · Instagram · LinkedIn · Threads · YouTube · Pinterest · X (Twitter).

---

Agent RL: Training AI to Behave Like a Real Developer

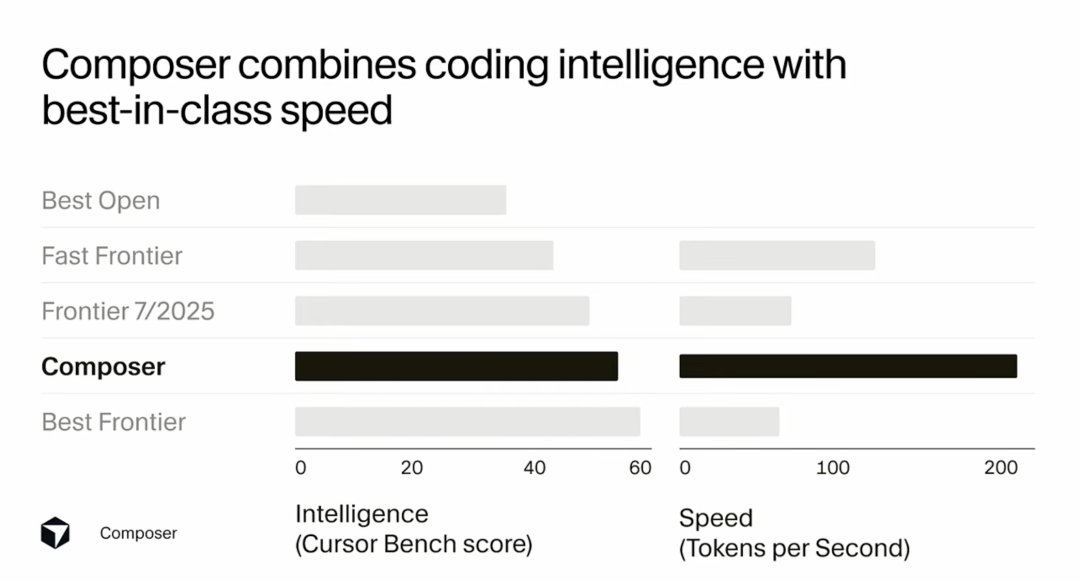

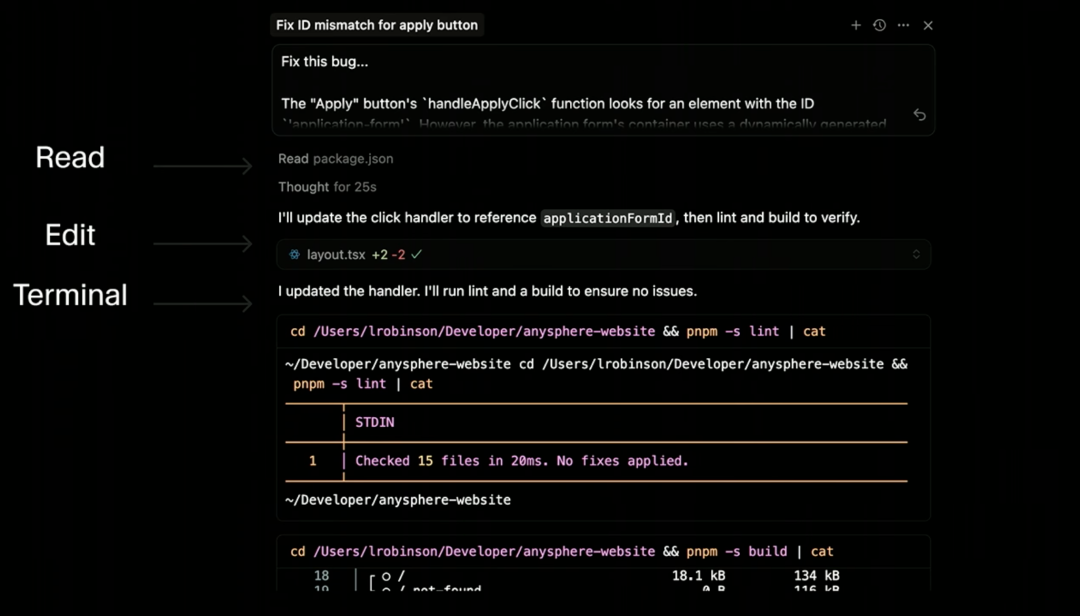

Workflow:

- User query → Cursor backend.

- Agent chooses from ~10 tools:

- Read files

- Edit files

- Search codebase

- Gather lints

- Run terminal commands

- Tools may run sequentially or in parallel.

Under the Hood:

- The agent is an LLM generating tokens.

- Tool calls follow XML-like patterns with parameters.

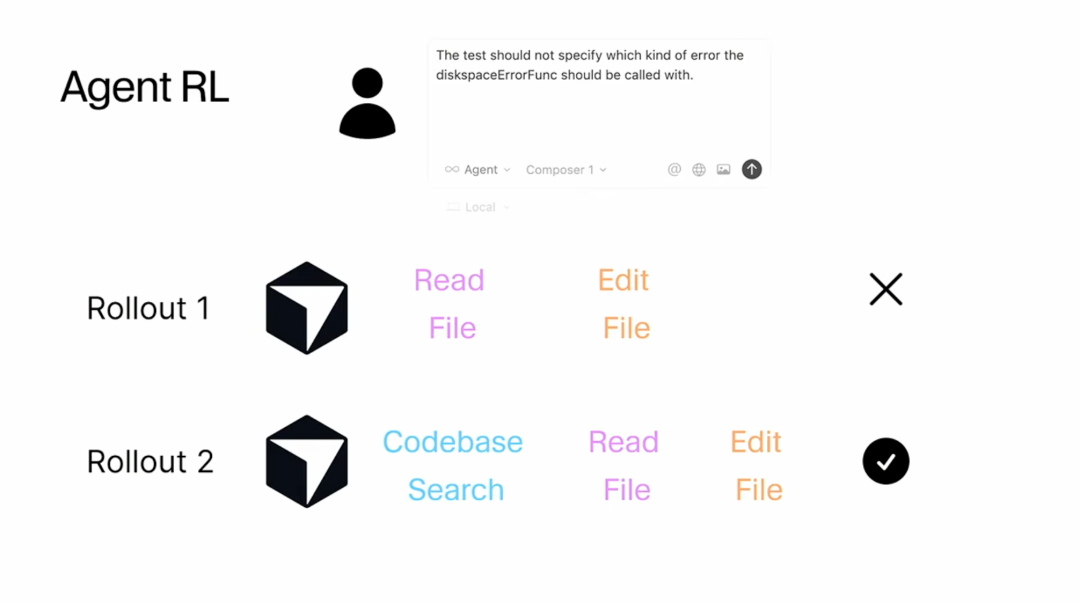

- Rollouts simulate multiple concurrent workflows to find the best action path.

RL Training:

- Simulates production use: real queries drive tool calls.

- Multiple rollouts from the same state test alternative action paths.

- Output quality determines parameter updates.

---

Core RL Challenges

- Matching Training & Inference

- MoE models need parallel performance across thousands of GPUs.

- Must keep training and production architectures identical.

- Ultra-Long Rollouts

- Real tasks require up to 1M tokens and hundreds of tool calls.

- Example: “Refactor” may demand reading files, searching, linting, testing.

- Consistency

- Training through the real production agent ensures direct skill transfer.

- Requires identical tool formats/responses in training and production.

---

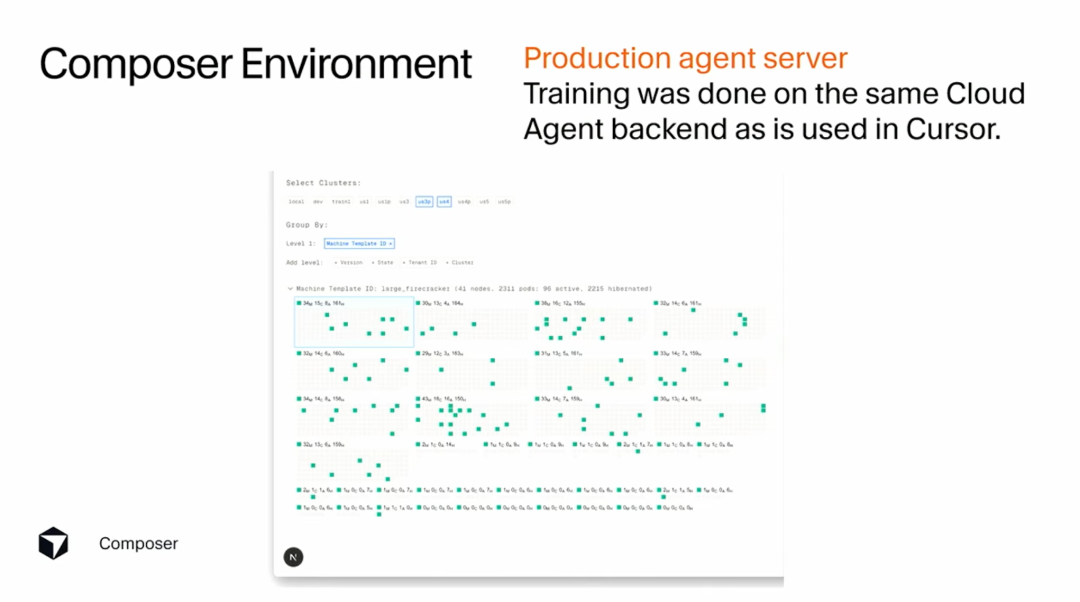

Infrastructure: The Secret Weapon

Three Server Components:

- Trainer: PyTorch ML stack at extreme scale.

- Inference Server: Ray orchestration for rollouts.

- Environment Server: microVMs to simulate code editing, terminal commands, and lint checks.

Low-Precision Training:

- Custom GPU kernels for MXFP8 microscaling.

- FP8 with scaling factors → better accuracy + 3.5× MoE layer acceleration.

Rollout Heterogeneity:

- Stragglers problem solved with Ray load balancing across processes.

---

Tight Product–Training Integration

Cloud agents run on the identical infrastructure as RL training—offline or even in subway rides.

Benefits:

- The model learns actual product tools, e.g., semantic search via embeddings indexing your files.

- Training produces direct, production-ready skills.

---

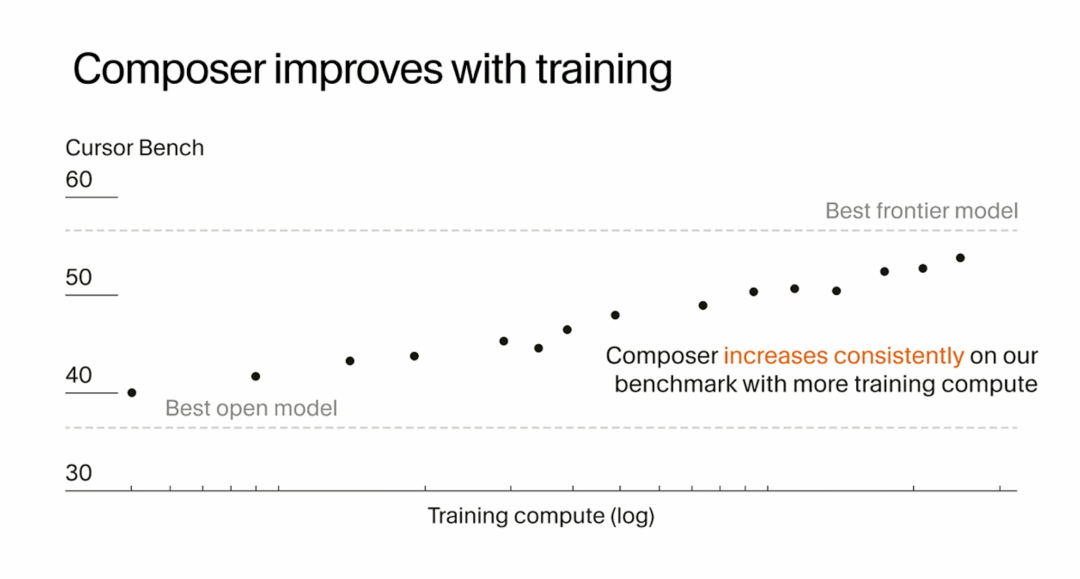

Proof That RL Works

Early Findings:

- Steady performance gains with compute investment.

- Learned parallel tool usage → faster user experiences.

- Shift toward deliberate edits after more reading/search actions.

User feedback:

- Speed + intelligence unlock a new programming style.

- Internal devs now use Composer daily.

---

Lessons: Building Specialized AI Models

Three takeaways:

- Specialization beats generalization for real tasks.

- Use your AI to build your AI — accelerates development.

- Infrastructure is essential — product and training must be tightly coupled.

Platforms like AiToEarn官网 follow similar principles—cross-platform publishing + monetization through a tightly integrated AI ecosystem.

---

Conclusion: User-Centric Innovation

Cursor Composer’s success proves:

- Solving a real user pain point matters more than chasing trends.

- RL + infrastructure + product integration can deliver fast and smart AI assistants.

- The same philosophy can empower creators via ecosystems like AiToEarn官网.

---

If you’d like, I can create a summary table highlighting all RL strategies, infrastructure choices, and product outcomes from Cursor Composer’s journey for quick reference. Would you like me to prepare that next?