L4 Roadmap Unveiled: Li Auto’s Autonomous Driving Team Introduces New Paradigm at Global AI Summit

A Technological Breakthrough in AI and Autonomous Driving

---

> AI is entering its “second half” — will advanced assisted driving lead the next wave of evolution?

---

The Shift from Human Data to Experiential Learning

Recent debate around AI large models hitting bottlenecks has intensified.

Rich Sutton, regarded as the father of reinforcement learning, explained in The Bitter Lesson that artificial intelligence is moving away from dependence on human-generated data toward experiential learning paradigms.

Former OpenAI researcher Yao Shunyu stated bluntly that AI is entering its “second half.” He believes:

- New AI evaluation or configuration frameworks must be developed for real-world tasks.

- To surpass human-level intelligence, AI must move beyond imitation and embrace new, scalable data sources.

---

Autonomy Meets the AI Paradigm Shift

At ICCV 2025, a leading global academic conference on computer vision,

Li Auto’s Senior Autonomous Driving Algorithm Expert Zhan Kun delivered the talk:

“World Model: Evolving from Data Closed-loop to Training Closed-loop.”

Li Auto shared:

- A full-stack approach from data to training

- The world’s first production-level architecture integrating world models and reinforcement learning

From rule-based algorithms to large models, Li Auto has both witnessed and shaped industry evolution.

The company's presence at ICCV marks an inflection point—AI’s “second half” is full of challenges and breakthroughs.

---

From Data Closed-loop to VLA Training Closed-loop

Li Auto’s LiAD Technical Path

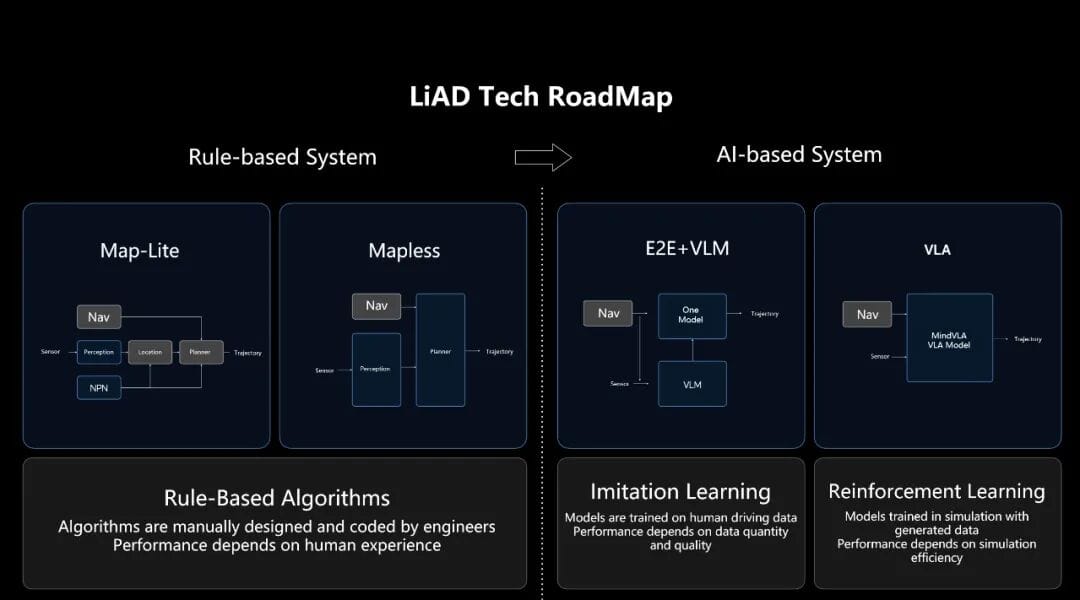

At ICCV, Li Auto presented its advanced assisted driving roadmap, built around VLA — Vision-Language-Action models — enabling interactive autonomous driving.

Evolution of LiAD Technology:

- Rule-based algorithms → initial automation

- End-to-End (E2E) learning → direct mapping of sensor signals to driving trajectories

- Hybrid E2E + VLM → dual-system architecture

- (pioneered by Li Auto, now industry trend)

Impact:

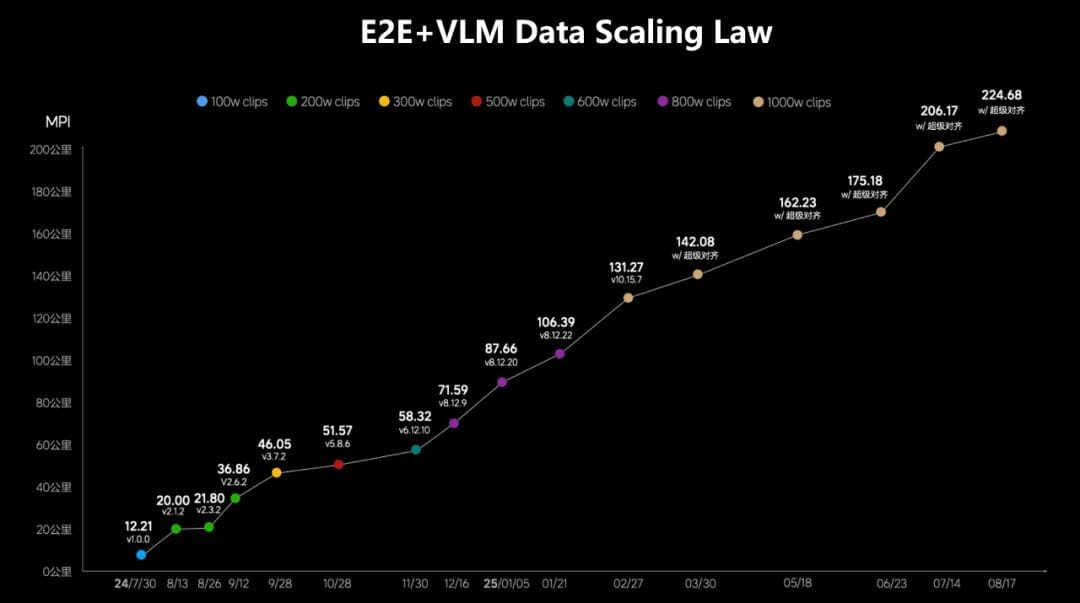

MPI (Miles per Human Intervention) has sharply increased within 12 months of launching E2E assisted driving.

Data and MPI Correlation:

However:

- Beyond 10 million clips of training data, imitation learning saturates.

- Many critical scenarios (corner cases) have insufficient real-world data.

---

Moving Toward the Training Closed-loop

Challenge: Rare scenarios demand targeted improvement for L4-level autonomy.

Li Auto’s Solution:

- Shift to Training Closed-loop → integrates data collection + simulated environments

- Simulation aligns with specific training targets, iterating until goals are met

- Focus on objective achievement, not just data accumulation

---

Broader AI Implications

This shift mirrors a global AI trend:

Continuous feedback loops, simulation, and reinforcement learning.

Example: AiToEarn官网 – an open-source content creation platform integrating AI generation, distribution, analytics, and ranking — similar in principle to Li Auto’s closed-loop but applied to digital content.

---

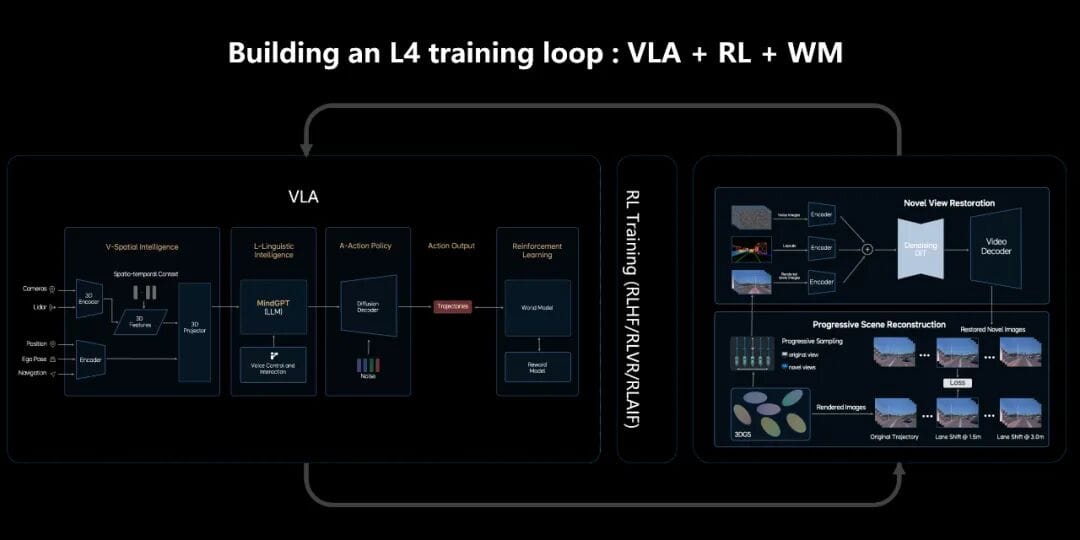

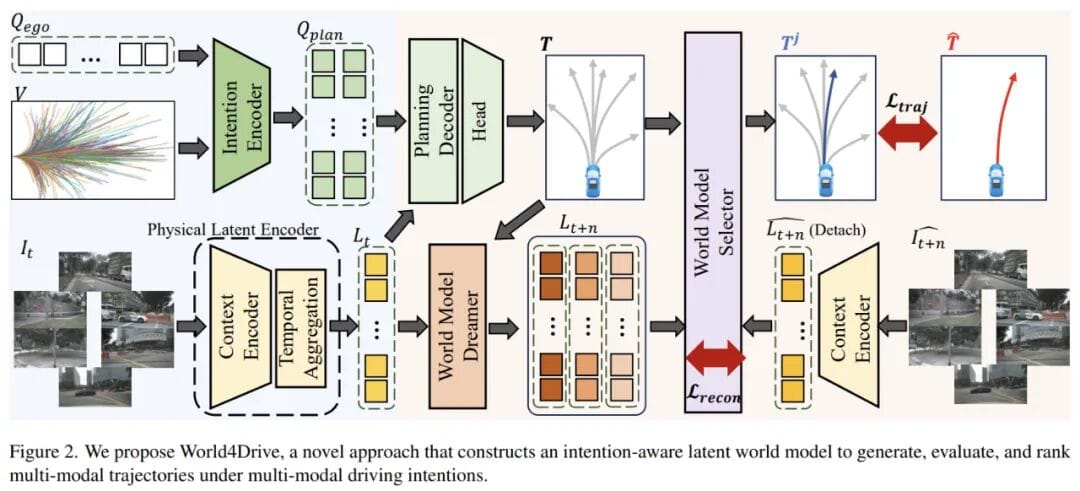

Li Auto’s World Model + VLA Strategy

- On-vehicle VLA model → prior knowledge + real driving skills

- Cloud-based world model → training with:

- Real-world data

- Synthetic data

- Data co-explored with model

Reinforcement learning methods: RLHF, RLVR, RLAIF

→ Continuous improvement via simulation, feedback, and iteration.

---

Core Outputs:

- Regional-level simulation & evaluation – realistic, long-horizon tests

- Synthetic data generation – diversity in rare scenarios

- Reinforcement learning world engine – free exploration with feedback

---

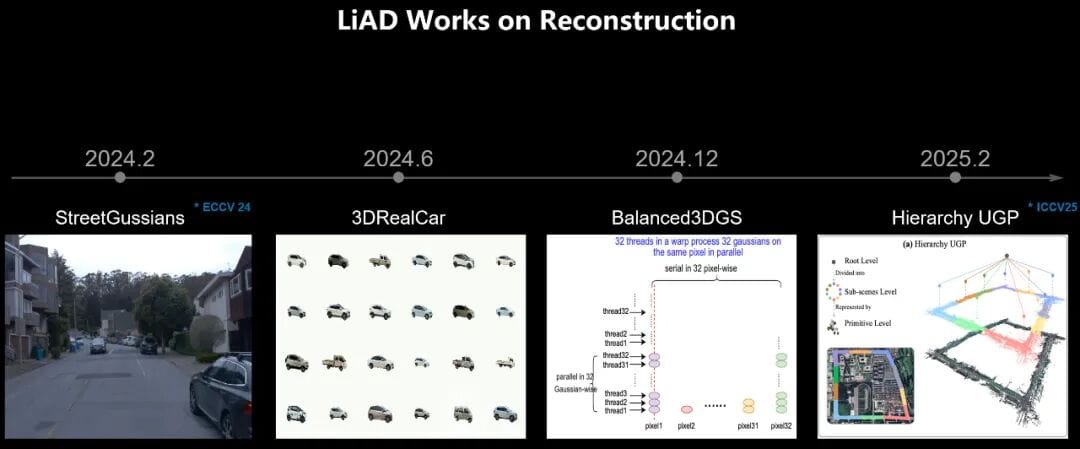

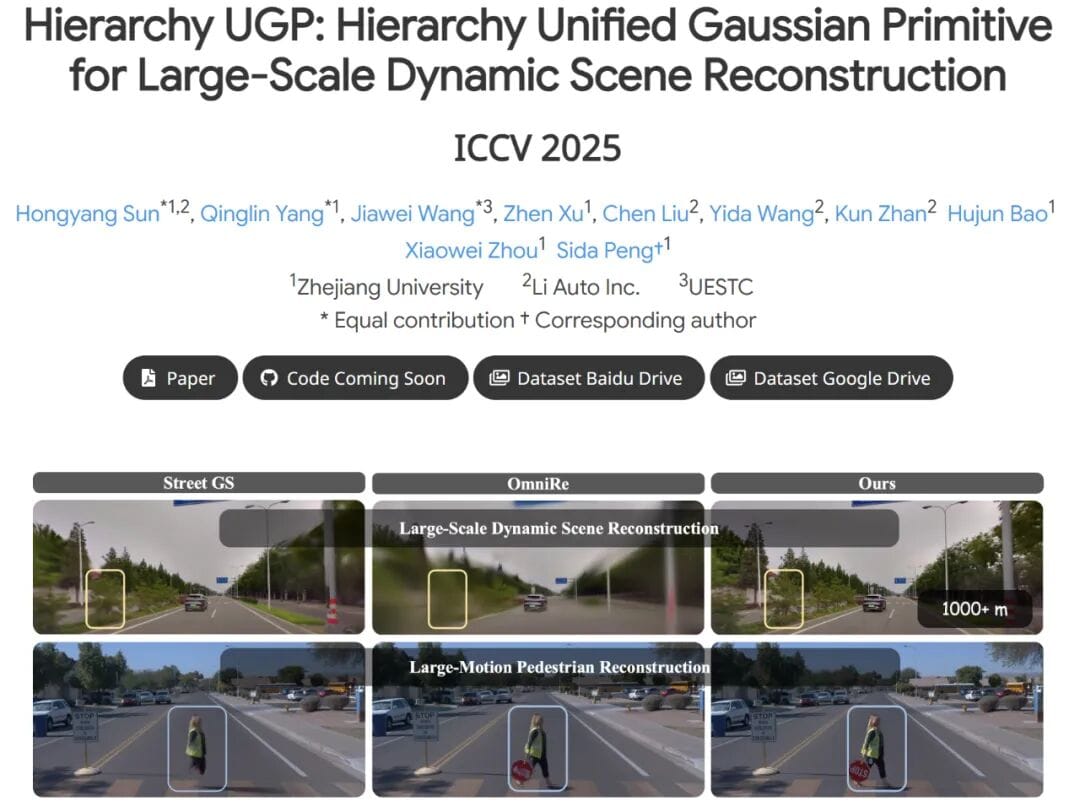

Environment Reconstruction

Since 2023, Li Auto has explored 3DGS reconstruction for autonomous driving.

New Direction: Reconstruction + Generation

→ Combines stability of reconstruction with generalization of generative models.

Research Highlight:

Hierarchy UGP: Hierarchy Unified Gaussian Primitive for Large-Scale Dynamic Scene Reconstruction accepted at ICCV.

---

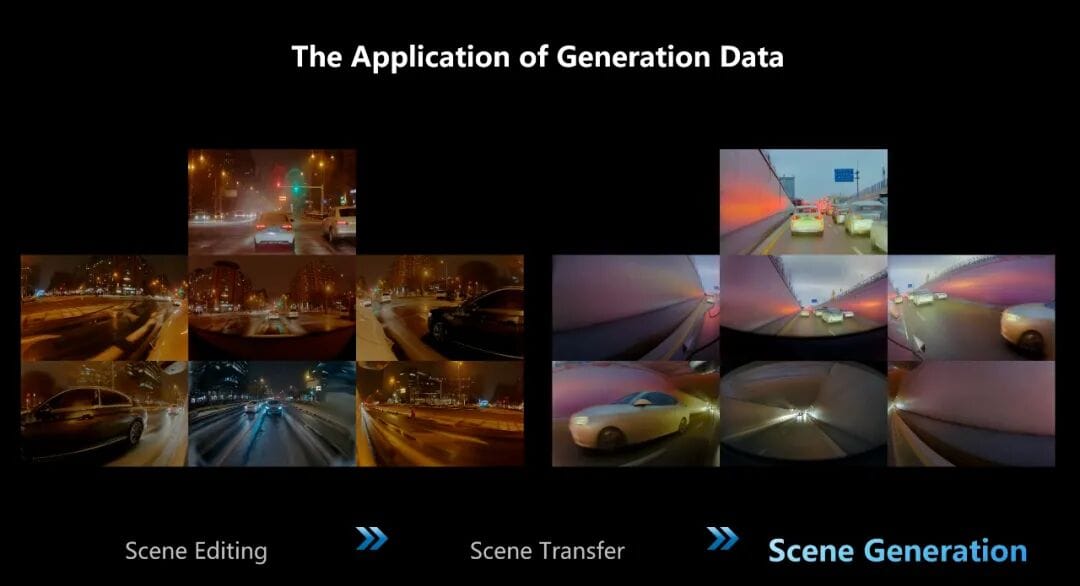

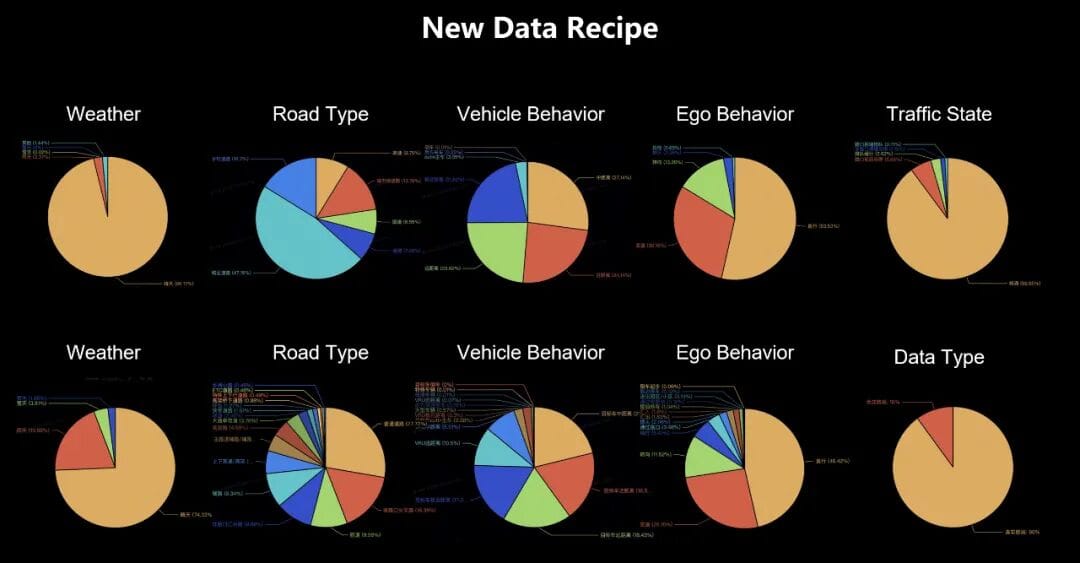

Synthetic Data Applications

Li Auto generates full videos and point clouds from prompts, enabling:

- Rare critical scenario simulation

- Region-specific driving environment adaptation

Result: Balanced datasets → Increased stability & generalization in real-world driving.

---

From Research Achievements to Future Directions

Since 2021: 32 papers published, expanding into VLM/VLA/world model research.

ICCV 2025:

Five accepted papers covering:

- 3D datasets

- End-to-End frameworks

- 3D reconstruction

- Video simulation

---

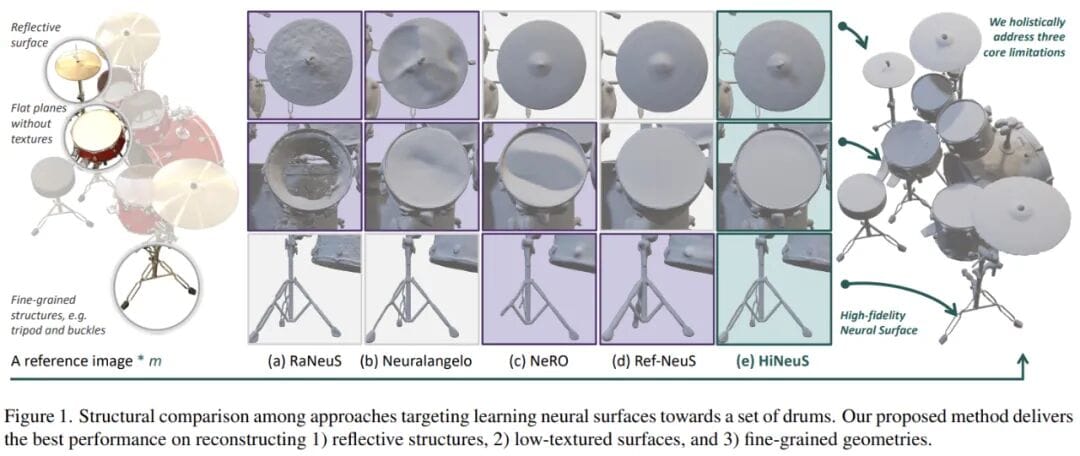

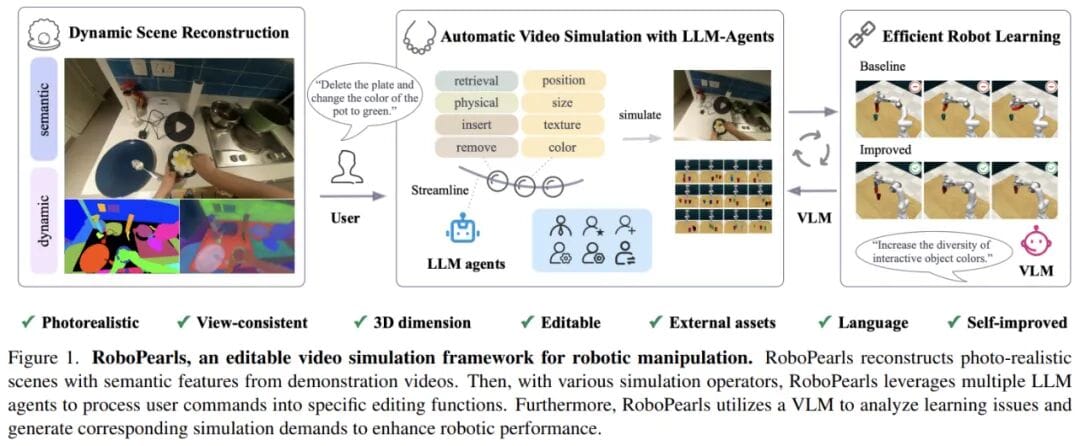

Example Papers

- 3DRealCar – first large-scale automotive RGB-D dataset

- 2,500 vehicles, 3 lighting conditions, precise 3D scans.

- World4Drive – intention-aware world model for autonomous planning.

- HiNeuS – neural surface reconstruction for low-texture & reflective scenes.

- RoboPearls – editable video simulation for robotics.

---

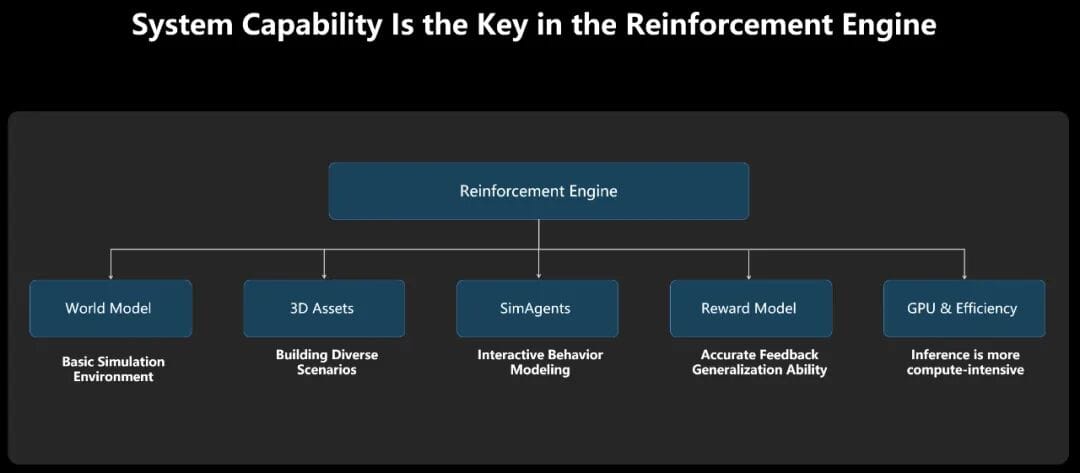

Reinforcement Learning Engine Challenges

Key Factors for Success:

- World models

- 3D assets

- Simulation agents

- Reward models

- Performance optimization

Interactive agents may exceed L4 single-vehicle complexity.

Li Auto’s upcoming MAD research will address these.

---

Driving AI Industry Evolution

- Goal (2023): Become an AI enterprise

- Nearly 50% of R&D spending on AI

- Four AI divisions:

- Assisted driving, Li Xiang Tong Xue assistant, intelligent manufacturing, intelligent commerce.

- 2024 Deliveries: Li Auto i8 ships with VLA driver LLM.

Li Auto’s open-source efforts have attracted 3,200+ developers, spreading the VLA paradigm across the industry.

---

Cross-domain Collaboration Potential

Platforms like AiToEarn demonstrate how AI ecosystems can empower creators and engineers across industries.

By integrating:

- AI content creation

- Simulation data publishing

- Analytics & rankings

These frameworks accelerate innovation through continuous feedback loops beyond autonomous driving.

---

© THE END

Submit Contributions / Request Coverage: liyazhou@jiqizhixin.com

---

If you’d like, I can now optimize the headings for SEO and prepare a press-ready condensed summary version for newsroom use. Would you like me to do that next?