RL is the new Fine-Tuning

LoRA, RL, and the Future of AI Model Optimization

Interview with Kyle Corbitt, Founder of OpenPipe (Acquired by CoreWeave)

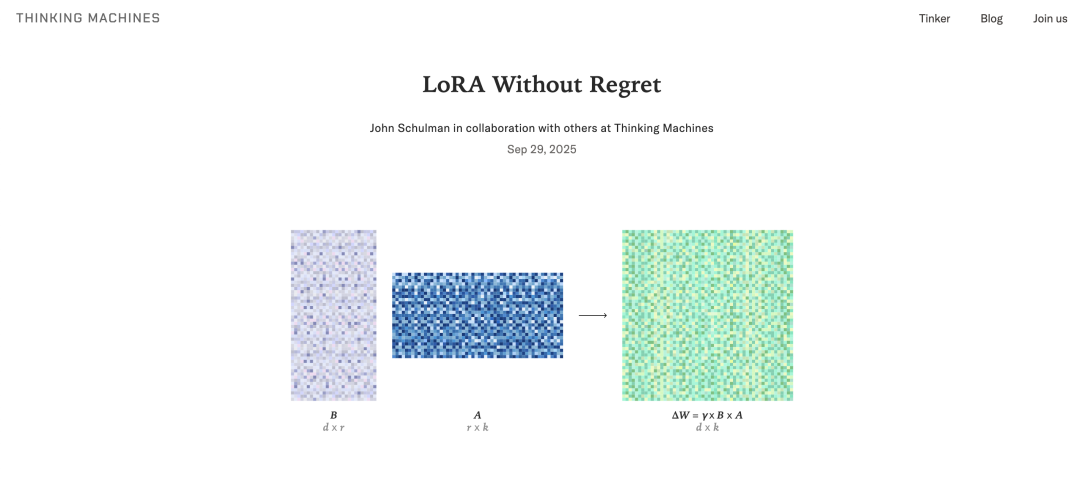

In September 2025, Thinking Machines published its long-form article LoRA Without Regret, presenting a set of SFT (Supervised Fine-Tuning) and RL (Reinforcement Learning) experiments. Their conclusion: under certain conditions, LoRA can match the performance of full-parameter fine-tuning while consuming far fewer computational resources.

This rekindled interest in LoRA as more than just a “budget alternative” to full fine-tuning. But since OpenAI’s o1 model highlighted RL-based narratives and DeepSeek’s R1 model revealed RL mechanisms, industry attention has shifted sharply towards RL — seen by OpenAI scientist Yao Shunyu as marking AI’s “second half” due to RL’s stronger generalization abilities.

Below is an excerpted, translated interview from Latent Space with Kyle Corbitt, founder of OpenPipe — a company that began with LoRA fine-tuning tools and later expanded into RL through products like Ruler (a general-purpose reward function). On September 3, 2025, CoreWeave acquired OpenPipe.

---

Key Takeaways

- Parallel LoRA: Multiple LoRA adapters can run in parallel on the same GPU, enabling per‑token pricing versus GPU uptime billing.

- Limited Need for Fine-Tuning: Only essential when small‑parameter models must be used (~10% of cases); otherwise ROI is low.

- RL as Standard Practice: In future agent deployments, RL will be part of the lifecycle — either before deployment or for ongoing optimization.

- Environment Bottleneck: RL’s biggest obstacle is constructing robust training environments; “World Models” may help solve this problem.

---

1️⃣ LoRA Loses Popularity, Then Returns

The OpenPipe Story

OpenPipe was founded in March 2023, right after GPT‑4’s release. High closed‑model costs and low open‑model performance created a gap: smaller companies needed affordable distillation of GPT‑4 capabilities into compact models.

Core differentiators:

- SDK Compatibility with OpenAI’s SDK for simple data capture.

- Data‑Driven Distillation from real production requests.

- Seamless API Switching after distillation with minimal code changes.

The release of Mixtral (Apache 2.0 licensed) was a turning point — offering a powerful open-source base for fine-tuning startups.

LoRA Advantages

LoRA inserts small trainable layers into pre-trained models, reducing memory and training time, while allowing multiple adapters to share one GPU instance. But fine-tuning demand itself was low, making the adoption rate modest.

Thinking Machines’ research and endorsements have recently improved LoRA’s reputation.

---

2️⃣ The Shift from Fine-Tuning to RL

When to fine-tune:

- Must use small-parameter models (e.g., real-time applications).

- Compliance-bound deployments in private cloud environments.

Fine-tuning cost components:

- Initial Setup: Several weeks/months of engineering work.

- Ongoing Overhead: Each prompt/context change requires retraining, slowing iteration.

- Monetary Cost: Typically $5–$300 per run — minor compared to engineering time.

RL Turning Point

OpenPipe’s pivot came after OpenAI’s o1 preview and leaks about “Strawberry”. Early experiments proved RL’s value for specialized tasks and agents. By January 2025, OpenPipe shifted fully to RL, despite only a 25% confidence in the direction. Confidence later increased to ~60%.

The belief: All large-scale agents will integrate RL — pre‑launch or post‑launch.

---

3️⃣ RL Deployment: The Environment Challenge

PPO vs GRPO

- PPO: Needs an extra value model for evaluation.

- GRPO: Compares relative group performance — simpler, more stable, no value model.

GRPO Advantage: Easier evaluation — only determine “better vs worse” in a set.

Key Obstacle: Robust training environments are hard to build, especially for complex systems.

Why Environments Matter

Agents must train with realistic inputs, outputs, errors, and human behavior variability. Simulators often limit diversity, making models brittle in real-world scenarios.

Example: High-fidelity coding environments are easier to simulate than dynamic human interaction.

---

4️⃣ Prompt Optimization vs Weight Adjustment

Observation: Prompt tuning can yield big gains, but top-notch prompts are rare (≈10% are excellent).

JEPA & DeepSpeed: Underperformed compared to OpenPipe’s internal methods.

Challenge: Static datasets lose relevance as prompts evolve — akin to off-policy RL limitations.

Future Trend (2026): Move toward online evaluation for continuous improvement.

---

5️⃣ Open vs Closed Models: Token Economics

Reality: Only ~5% of tokens today are open-source — share may grow if quality matches closed models.

Closed Model Dominance: Subsidies, quality edge, and infra control keep them ahead (esp. in coding).

Concern: Subsidy sustainability — depends on massive GPU investment/utilization.

---

6️⃣ Ruler: Breakthrough in Reward Functions

Ruler uses relative ranking rather than absolute scoring — working excellently with GRPO.

Result: Reward allocation problem largely solved.

Key insight: Evaluation models don’t need to be cutting-edge to train top-performing agents.

---

7️⃣ World Models as Environment Simulators

World Models simulate system behavior and state changes, potentially solving costly environment setup problems.

Coding exception: Code can be executed directly — simulation less necessary.

Goal: Enable agents to learn from real-world mistakes and adapt continuously — unlocking the majority of currently stalled PoC projects.

---

8️⃣ Reward Hacking in Online RL

Typically easy to detect — models exploit loopholes repeatedly.

Solution: Adjust reward prompts to explicitly punish exploitative behavior.

---

References

- Why Fine-Tuning Lost and RL Won — Kyle Corbitt, OpenPipe: YouTube Link

- Images: `images/img_001.jpg` through `images/img_007.jpg`

---

Editor’s Note:

This interview captures a key industry shift — from parameter-efficient fine-tuning (LoRA) to task-optimized reinforcement learning, with infrastructure bottlenecks now being the primary barrier. The emergence of general reward functions like Ruler and simulation tools like World Models may define the next competitive advantage in AI deployment.

---

Do you want me to also visually summarize this interview into a 1-page diagram showing the LoRA–RL evolution path and obstacles? This would make the workflow much easier to digest for technical and business readers.