# COLA: Sensor-Free Human–Robot Collaboration

*(Still, it has to be college students who know how to have fun — doge!)*

---

## A Robot Sidekick with Campus Energy

While casually surfing the web, I stumbled upon something fascinating:

A male college student has a **robot teammate** — and it’s *incredibly clingy* (well, sort of~).

**Scenes from their day:**

1. **Morning Supermarket Shift**

The robot follows him around during part-time supermarket work — once goods are packed, it happily pulls the cart and navigates stairs with ease:

2. **Noon Campus Cafeteria**

It helps push the meal cart, instantly reacting to simple gestures — a pat on the head means *stop*:

3. **Post-Work Workout**

After a long day, the robot even joins him for exercise:

4. **Vlog Potential**

Imagine filming from the robot’s perspective: *“A High-Energy Robot’s Day”*:

---

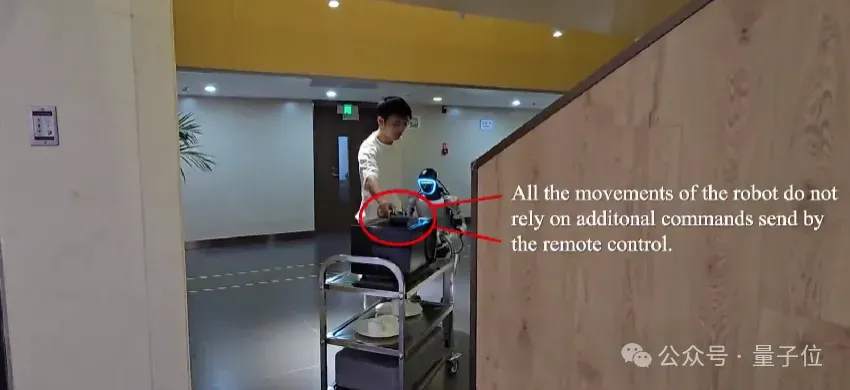

## The Unusual Interaction Method

Did you notice? All interaction is done via **patting its head** or **tugging its body**.

No remote control. **No voice commands**.

---

## From Fun to Research

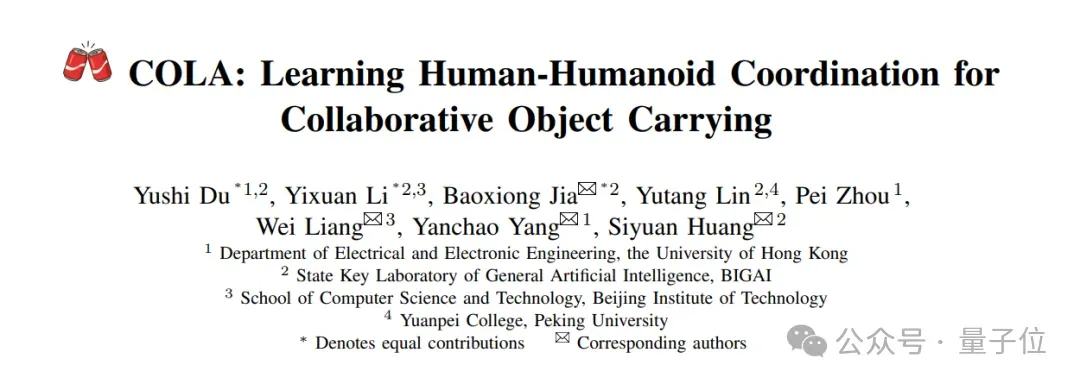

Turns out, this is part of a legitimate high-tech research project — complete with a published academic paper:

---

## The Problem They’re Tackling

**Scenario:** *Human–robot collaborative object carrying*

- For robotic arms: Well researched and validated.

- For humanoid robots: Lacks deep exploration.

**Challenge:**

Humanoids have **complex whole-body dynamics** — coordinating torso, limbs, joints, maintaining balance, and handling diverse environmental factors.

**Goal:**

Enable **seamless human–humanoid collaboration** for moving varied objects.

---

## Their Solution: COLA

### Overview

**COLA** is a novel reinforcement learning method that operates **without cameras, LiDAR, or external sensors**, relying purely on **proprioception**.

---

### Key Design Philosophy

#### 1. Unified Collaboration Policy

- Traditional: Separate “robot-leading” & “human-leading” modes → causes lag.

- **COLA merges roles into *one policy***:

- Steady human force → robot follows.

- Human hesitation or instability → robot leads & stabilizes.

- No manual input — roles switch fluidly.

- Even tasks like carrying heavy items upstairs become smooth:

---

#### 2. Dynamic Closed-Loop Training

- Training environment simulates unpredictable events:

- Sudden movement changes.

- Shifts in object weight.

- Grip slips.

- Focus: Real-world robustness.

---

#### 3. Continuous Feedback Loops

- Robot’s actions alter the environment → environment changes influence next decisions.

- Mirrors actual human–robot transport dynamics.

---

## Why COLA’s Approach Is Different

**COLA’s Core Advantage:**

Operates purely through *intrinsic perception* — internal data:

Joint angles, actuator force feedback, position/velocity info.

**Benefits:**

- Immune to environmental interference (e.g., poor lighting).

- Eliminates remote controls.

- Reduces hardware costs and system complexity.

---

### Technical Steps

1. **Residual Teacher Fine-Tuning**

- Train base movement policy.

- Add a *Residual Teacher* for cooperative carrying adjustments.

- Merge into a unified collaboration strategy.

2. **Simulation Training & Knowledge Distillation**

- Run millions of safe simulation episodes.

- Train a strong “teacher” model.

- Distill knowledge into a lightweight “student” policy for real robots.

---

### Predictive Insights

COLA implicitly predicts:

- **Object movement trend** (tilting risk, direction).

- **Human intent signals** (turning, force adjustment needs).

**Result:**

Load balance via coordinated trajectory planning.

---

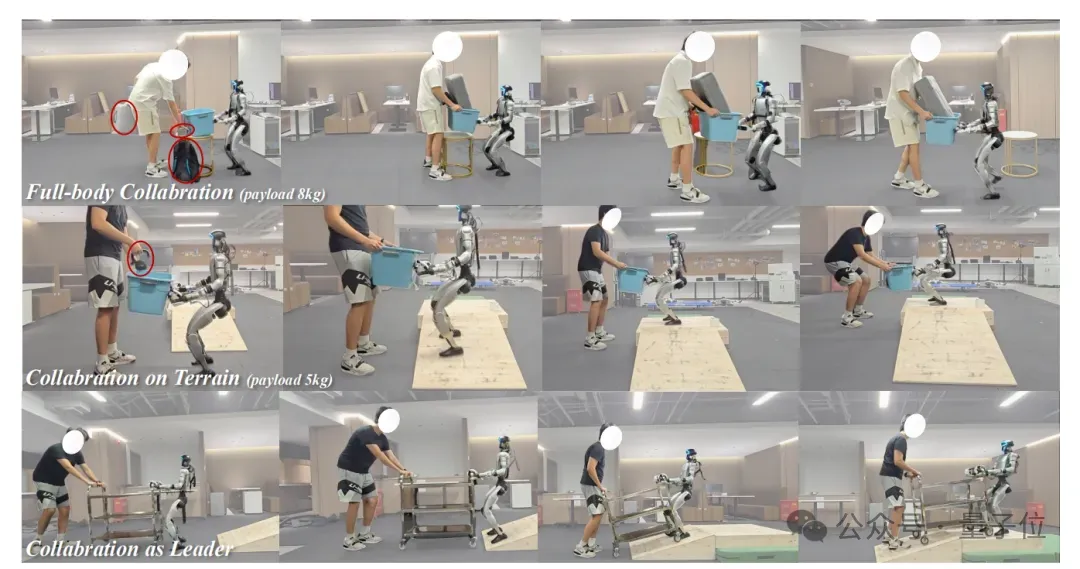

## Experimental Validation

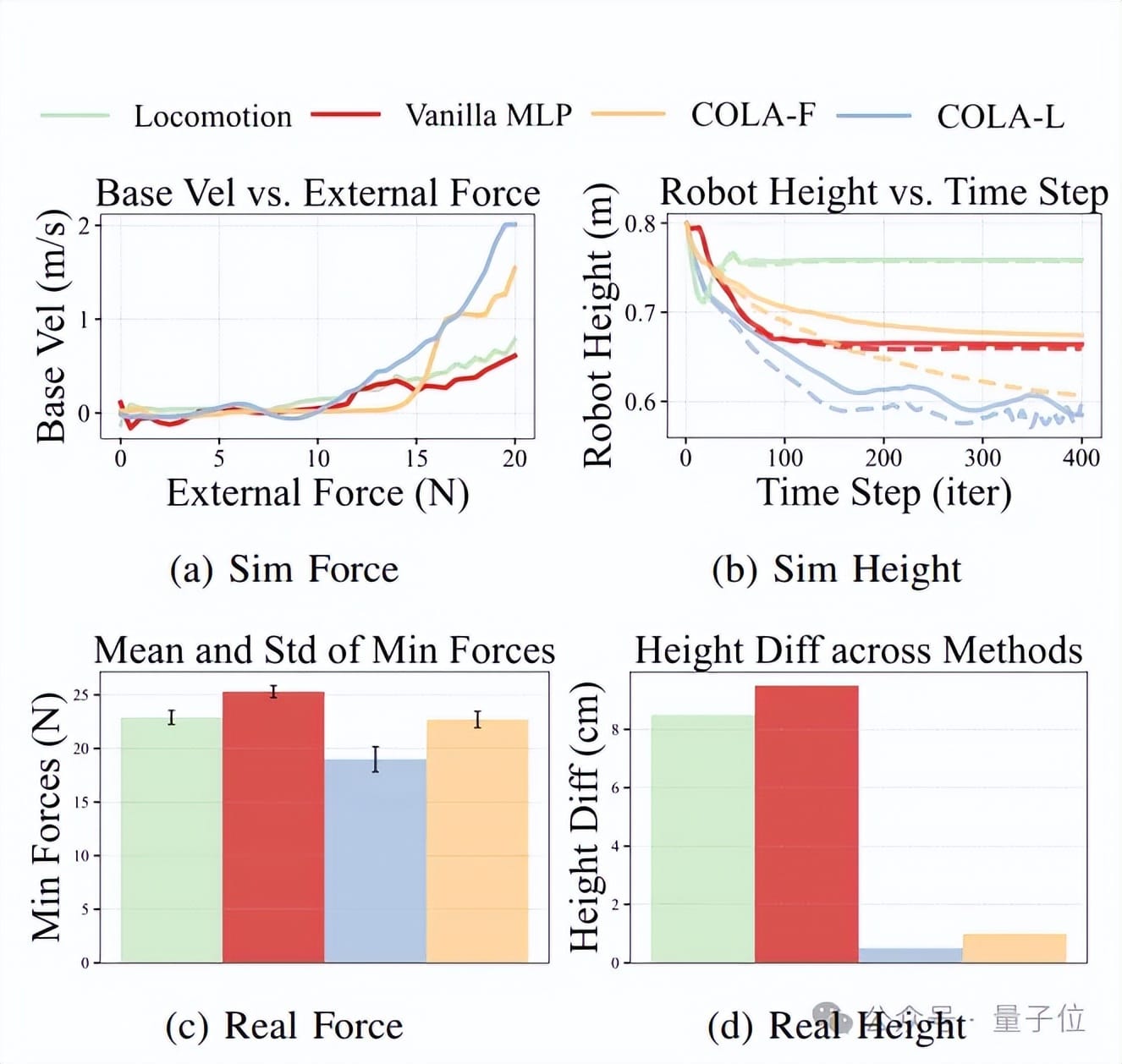

**Simulation Results:**

- Outperformed explicit goal estimation & Transformer-based methods in:

- Movement precision (velocity, angular velocity, height error).

- Lower human effort (average external force).

- **Leader-oriented COLA-L** > **Follower-oriented COLA-F**.

**Real-World Tests:**

- Objects: Boxes, flexible stretchers.

- Patterns: Straight walking, turning.

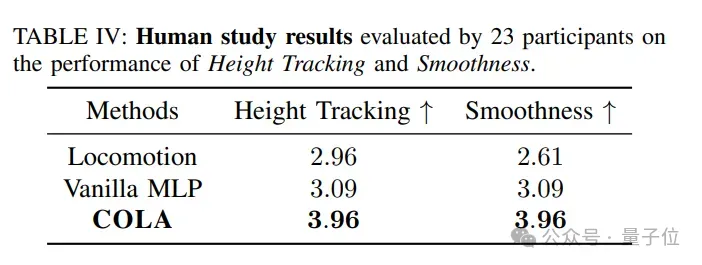

**Human Trials:**

- 23 participants.

- COLA rated **highest in tracking accuracy & smoothness**.

---

## The Team Behind COLA

### Co-First Authors

- **Yushi Du** – Dept. of EEE, University of Hong Kong.

- **Yixuan Li** – Ph.D., Beijing Institute of Technology; interests in humanoid robotics.

---

### Corresponding & Senior Authors

- **Baoxiong Jia** – Research scientist, Beijing Institute for General AI; Peking Univ. & UCLA alumnus; multiple 2025 conference papers.

- **Wei Liang** – Professor, BIT; heads PIE Lab; focuses on computer vision & VR.

- **Yanchao Yang** – Asst. Professor, University of Hong Kong; specialties in embodied intelligence.

- **Siyuan Huang** – Director, Center for Embodied AI & Robotics; teaches at Peking Univ.; worked at DeepMind, Facebook Reality Labs.

---

### Other Contributors

- **Yutang Lin** – Third-year student, Peking University.

- **Pei Zhou** – Ph.D. student, University of Hong Kong.

---

## Potential for Creators

Sensor-free collaboration like COLA opens new storytelling opportunities.

AI-powered content platforms such as [AiToEarn官网](https://aitoearn.ai/) can:

- Generate AI-driven content.

- Publish across Douyin, Instagram, YouTube, etc.

- Track and monetize engagement via integrated analytics.

More info: [AiToEarn开源地址](https://github.com/yikart/AiToEarn).

---

## References

- **Paper:** https://www.arxiv.org/abs/2510.14293

- **Project Homepage:** https://yushi-du.github.io/COLA/

- **Social Reference:** https://x.com/siyuanhuang95/status/1980517755163185642

---

**What do you think?**

---